Eye Tracking Virtual Reality

Conduct eye tracking studies in immersive environments to gauge respondents’ emotional responses.

See Features Now

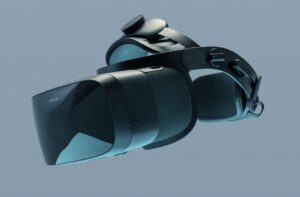

Varjo VR-3 sets a new standard for virtual reality headsets with the industry’s highest resolution across the widest field of view (115 degrees) and eye tracking at 200 Hz. With a resolution of over 70 pixels per degree in the center of the field of view, you can see and read the smallest of details in virtual reality with perfect clarity. And with a color accuracy that matches 99% with the sRGB color space, everything looks exactly as it does in the real world.

Varjo VR-3 Eye Tracking Headset is compatible with the iMotions VR Eye Tracking Module.

| Display and Resolution | Full Frame Bionic Display with human-eye resolution. Focus area (27° x 27°) at 70 PPD uOLED, 1920 x 1920 px per eye Peripheral area at over 30 PPD LCD, 2880 x 2720 px per eye Colors: 99% sRGB, 93% DCI-P3 |

| Field of View | Horizontal 115° |

| Eye tracking | 200 Hz with sub-degree accuracy; 1-dot calibration for foveated rendering |

| Refresh rate | 90 Hz |

| Hand Tracking | Ultraleap Gemini (v5) |

| Comfort and Wearability | 3-point precision fit headband Replaceable, easy-to-clean polyurethane face cushions Automatic interpupillary distance adjustment 59–71mm |

| Weight | 558g + headband 386g |

| Dimensions | Width 200 mm, height 170 mm, length 300 mm |

| Connectivity | Two headset adapters in-box Two USB-C cables (5 m) in-box PC Connections: 2 x DisplayPort and 2 x USB-A 3.0+ |

| Positional Tracking | SteamVR 2.0 (recommended) or 1.0 tracking system |

| Audio | 3.5mm audio jack with microphone support |

Conduct eye tracking studies in immersive environments to gauge respondents’ emotional responses.

See Features Now

Varjo

Eye tracking Virtual Reality

Varjo

Eye tracking Virtual Reality

Vive

Eye tracking Virtual Reality

Varjo

Eye tracking Virtual Reality

Varjo

Eye tracking Virtual Reality

Consumer Insights

Academia

Consumer Insights

Consumer Insights

Read publications made possible with iMotions

Get inspired and learn more from our expert content writers

A monthly close up of latest product and research news