Introducing

iMotions 10

We have re-imagined the way that you set up your studies to make the process more intuitive and less tedious.

Study setup

Introducing the Easy-to-Use Study Builder Wizard:

Experience an intuitive study builder tailored to your study type, ensuring you only see the options that matter to you. No more clutter – just a streamlined study design experience.

Auto-Detect Screen Size with Template Saving:

Let our software do the heavy lifting. It automatically detects screen sizes and allows you to save templates for future use, making your research more effortless.

Study Preview

True-to-life Previews:

Get peace of mind with true-to-presentation study previews, allowing you to check for appropriate screen sizes and more.

Block-by-Block Study Preview:

No more repeated full experiment runs. Preview your study block by block, saving you time.

Working with Stimuli

Easily Ensure Stimuli Consistency:

Easily achieve precision and maintain consistency across stimuli types with our “Stimuli Overview” feature.

Easy stimuli import:

Simply drag and drop from your file explorer, making it effortless to integrate external content into your studies.

Highlight and Hide Stimuli:

Easily locate and control stimuli within blocks. iMotions 10 provides multiple features to help you keep your experiments on track.

General

Redo and Undo Actions:

Stay in control with the ability to redo and undo actions, ensuring a smooth research process.

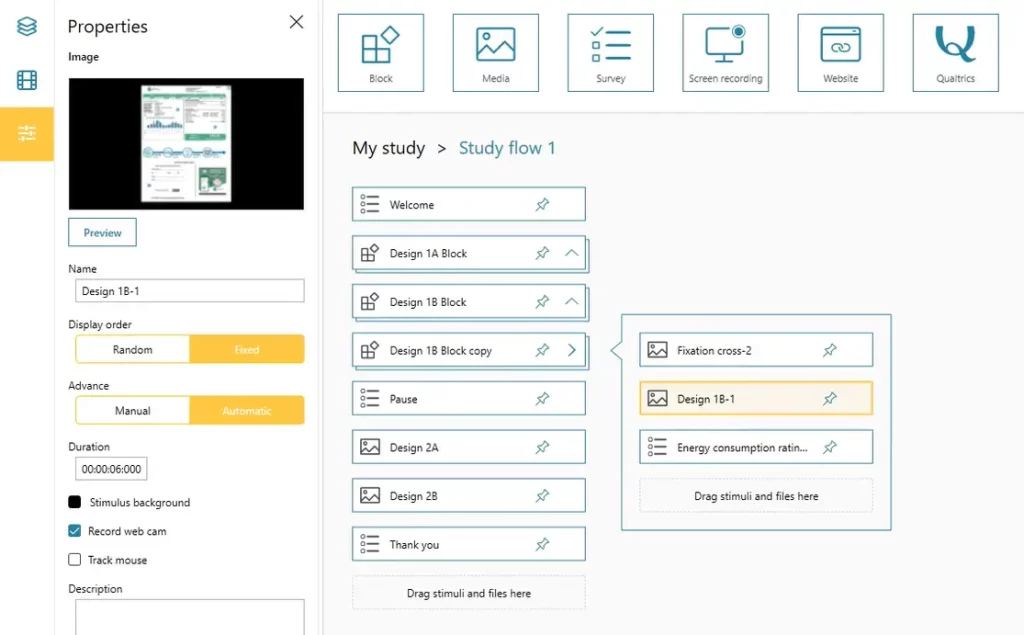

Seamless Drag-and-Drop:

Drag-and-drop functionality for stimuli and blocks provides simple and intuitive control over study setup and design.

Clean and Simple Study Settings:

Experience a simpler and cleaner study settings view, so you can more clearly view your study setup.

Bulk Editing Made Easy:

Effortlessly fine-tune time and position for multiple elements at once, saving you time and ensuring precision with your study setup.

A huge thanks to our beta testers

During the beta testing of iMotions 10 we got some great feedback from the testers which helped us develop the new study builder and make it as user friendly and functional as possible.

Here is what some of them had to say at the end of the beta test:

I recently had the opportunity to use iMotions 10, and I am thoroughly impressed with its newly redesigned study builder. Whether you’re an undergraduate student, a graduate student, a Ph.D. candidate, a researcher, or an industry professional, this version transforms your approach to complex studies. Its user-friendly interface simplifies the integration of multi-sensors and complex study designs, making these processes more accessible than ever. The intuitive ‘walk-up-and-use-it’ experience in iMotions 10 elegantly bridges the gap between ease of use and the complexity of data design, collection, and analysis; it brings a consumer-level ease of use to the typically intricate world of rigorous human behavior research. The redesigned study builder exemplifies how powerful human insight technologies can be seamlessly integrated and user-friendly for diverse research needs.

Dinko Bačić

Assistant Professor of Information Systems

UX & Biometrics Lab Founder & Primary Investigator

Loyola University Chicago

I have used the iMotions platforms for a variety of biometric research studies since 2019. I have always been pleased with the core value of the platform and modules, which in my view is the opportunity to create and conduct studies combining different sensors/technologies in one study. I have experienced how the platform has evolved and improved over the years. However, in my view the most recent iMotions 10 introduces the biggest and best upgrade so far – particularly in terms of a user-friendly design. The new interface is much more intuitive and easier to use. You quickly gain an overview of the study, and the processes involved with designing the study and subsequently analyzing the data is smoother. Larger studies are easier to manage with the better overviews this new interface offers.

Tine Juhl Wade

Senior Lecturer, NeuroLab Researcher & Coordinator

VIA University College, Campus Horsens

Ready to upgrade? Get the latest version of iMotions here.

Other features recently added to iMotions Lab

Voice Analysis

With iMotions 9.4 we are excited to introduce a brand new module – voice analysis. Powered by audEERING, the voice analysis module allows for analysis at every level of vocal production. Go deep into emotion analysis and collect data related to emotion detection (angry, happy, sad, neutral), and emotional valence (arousal, dominance, and valence). It’s also possible to explore fundamental voice features with metrics relating to prosody, including pitch, loudness, speaking rate, and intonation, as well as data regarding perceived gender and age. Any audio data – whether recorded within an experiment, or simply imported into iMotions, can be processed with audEERING’s voice analysis algorithms. All data processing takes place offline on your own hardware, ensuring full control of the data.

This module can also be easily combined with the new speech-to-text feature to assess the semantic value of words alongside the valence related to their production.

Read more about voice analysis in our pocket guide here, and about the module here. You can also find documentation in our Help Center here, and information about voice analysis data here.

Speech-to-text

The integration of AssemblyAI’s speech recognition API into iMotions Lab enables users to import, transcribe, and analyze audio and video files. This feature supports multiple languages and offers capabilities like speaker detection, sentiment analysis, and speech summarization. Beneficial for diverse fields like academic research, market research, and customer experience, it allows comprehensive analysis of verbal data, aiding in deeper understanding of human communication and informed decision-making.

Read more about the feature here.

Emotional Heatmaps

We are excited to release emotional heatmaps – gaze plots that color code what facial expression your respondents made when looking at the different areas of an image and other static visuals. Facial expression analysis is calculated and aggregated for each static stimulus – any of those metrics can be selected to form the basis of the emotional heatmap. This can provide a quick and singular overview of how participants’ facial expressions change while they view an image. You can find more information about implementing emotional heatmaps in the Help Center article here.

EEG intersubject correlation calculation

You can now calculate and export EEG intersubject correlation scores. This is available as a new R notebook, and provides insights about how well synchronized the EEG signals are from multiple participants. This metric has been well-established in research and is seeing increasing use and deployment within a range of new disciplines and industries. The metric is particularly valuable when assessing the shared level of engagement between participants while they watch or listen to stimuli. Examples of neural synchrony being applied in real world research are available in this report available here, and in the Help Center article here (customers only).

Glasses improvements

A variety of improvements and additions have been made to several eye tracking glasses systems. This includes updated import options for Pupil Labs studies, helping to streamline the research workflow. Gyroscope, accelerometer, pitch, and roll data are now available for Neon by Pupil Labs. You can now also import both Raw Sensor Data and Timeseries Data downloaded from Pupil Cloud into iMotions. Finally, it’s also possible to re-import data from Pupil Cloud with fisheye correction. Additionally, the Viewpointsystem VPS19 glasses now support the exporting of .mp4 videos, and updates to the UI now also provide improved information when importing eye tracking glasses data from the wrong folder, smoothing out study processes.

You can download our Eye Tracking Glasses Pocket Guide here.

WebET 3.0 brings big improvements to accuracy

With the update to WebET 3.0 our webcam eye tracking algorithm is now 4x more accurate than previous version, and is now also a lot more robust in different environments.

Upcoming Features and Improvements

Even though iMotions 10 marks a milestone in the development of iMotions Lab there is still much more to come. General updates and improvements are released once or twice a month, and you can keep track of these from the release notes. Make sure to sign up to our newsletter to be alerted as soon as new big features are released

Last updated 18 January 2024.