Similar Products

-

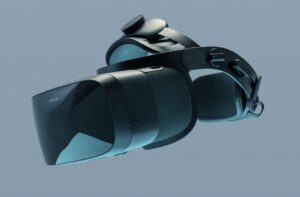

Varjo

Varjo XR-4 – Focal Edition

Eye tracking Virtual Reality

In StockCompare -

Varjo

Varjo XR-4

Eye tracking Virtual Reality

In StockCompare -

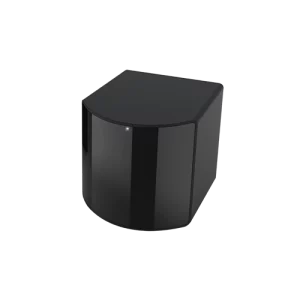

Vive

SteamVR Base Station 2.0

Eye tracking Virtual Reality

In Stock -

Varjo

Varjo XR-3

Eye tracking Virtual Reality

In StockCompare -

Varjo

Varjo VR-3

Eye tracking Virtual Reality

In StockCompare