We reached some fantastic milestones in 2022. We expanded our customer portfolio with many new exciting clients, including opening 155 academic labs this year. iMotions played a role in hundreds of peer-reviewed articles about human behavior research. We brought next-level webcam-based eye tracking to market. These, and so many other milestones are reasons for celebration.

What underpins those successes is what excites us about 2023 – and beyond. We are seeing academic institutions increasingly invest in opportunities for human behavior research so as to provide students with hands-on experience with innovative technologies, while also developing revenue-generating partnerships with commercial businesses by using our platform to yield a deeper understanding of the drivers behind human behavior. Market researchers are increasingly embracing online behavioral research that allows for studying any consumer, anywhere and at any time. Meanwhile, biosensor data via smart devices and wearables brings new possibilities for longitudinal studies within healthcare.

Peering a little deeper into these trends, there’s a common thread. That is the unique capability to enable multimodal research in a single platform designed for, and with the help of, academic scientists with a mission to elevate the quantity and quality of human insights.

No single technology has a monopoly on the truth

Every single technology that we’ve integrated – and will continue to integrate – into our platform is great at revealing aspects of human behavior. EEG, skin conductance, facial expression analysis, and eye tracking all individually reveal new layers and make your data better and more nuanced. Pick any single one of these technologies, and it will provide valuable insights into psychophysiological signals that you cannot acquire through other means. However, the best research – containing insights that can be relied upon to help human-centered innovation, while supporting business, marketing, and operational decisions – includes measures from a combination of these technologies. We firmly believe that no single technology, or even research measure, has a monopoly on the truth. With one, you will get an insight, but with more, you will get the full picture.

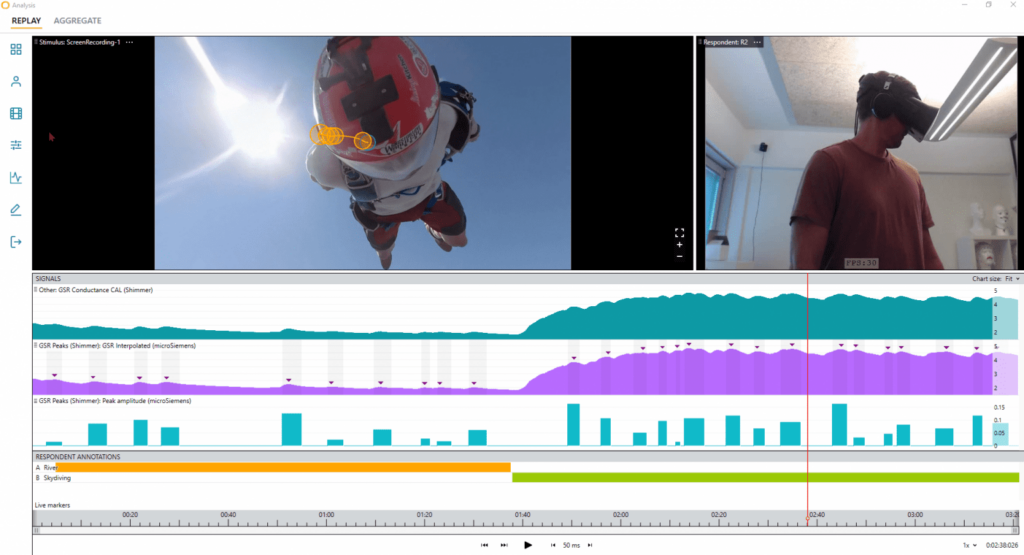

Eye tracking is fantastic at pinpointing exactly where someone is looking. Add in facial expression analysis and now you have a sense of which emotional expressions were evoked during every momentary gaze. Include skin conductance and you can understand the intensity of the physiological response they’re having in those moments. By layering the appropriate technologies into a study, you will gain deeper and more nuanced insights than you would with any single technology.

If you’re looking for in-depth explanations of each, we encourage you to explore further by reading our complete guides for; Eye Tracking, Facial Expression Analysis, or Galvanic Skin Response. Or go here to download guides for all our integrated technologies.

Context is everything

Researchers know that each tool and technology has its strengths and its flaws, not only reinforcing the value of their use in combination but the value of context. Eye tracking works under the assumption that attention correlates with where the eyes are fixated – it doesn’t take into account peripheral vision. Facial expression analysis only shows you what is expressed, which can be different from what is felt on the inside. Skin conductance usually occurs with a time delay, making it impossible to directly pinpoint its cause, and can fluctuate regardless of whether a reaction is positive or negative, or sometimes in the absence of external stimuli. Even EEG’s frontal alpha asymmetry, so often used in consumer neuroscience, is prone to individual differences which must be kept in mind when designing a study. It’s essential to understand the assumptions that underlie each of the tools, and the limitations of what they can provide.

Throughout the journey towards multimodality, one thing has remained clear: humans are the central component. Data collection means nothing without the touch of human intelligence for analysis. Data is an essential part of any study, but it needs to be put into context. Data does not tell you “why” it only tells you “that”. It will not tell you why a respondent reacts a certain way to a commercial for example, but it will tell you that a respondent is reacting a certain way – the “why” is concluded from human-driven analysis.

One clear example lies in the difference between Facial Recognition and Facial Expression Analysis, which we detail in “Facial Recognition vs. Facial Expression Analysis – A case for responsible data collection.”

The necessity for human oversight, interpretation, and analysis is why we continue to build a team of highly-educated neuroscientists, PhDs, and research experts, who are able to bring scientific expertise to behavioral research. To say human behavior is complex is an understatement. It is because of complexity that human behavior and decision-making cannot, and will likely never, be understood in a vacuum of non-contextualized data. It requires context to make accurate interpretations, analyses, and conclusions. And while emerging technologies are able to provide helpful and valid insights, they will never precede the need for, and power of, human analysis.

It’s of no surprise that it’s taken time for research to embrace the multiple sensor integration path. Developing and validating each of these technologies as being effective for studies and as valid tools for researchers has taken time. Understanding and belief in the power of nonconscious biosensor technologies have been a hurdle to overcome when surveys for so long seemed to be good enough. The evolution of these technologies – particularly the speed, cost, and flexibility of sensors – has now allowed them to become a staple of everyday research, not just for specialized experiments.

If you want to read more about how biases create challenges in survey research alone, check out “What is bias? (a Field Guide to Scientific Research)“.

Looking towards the future, it’s an exciting time in which technologies will become even more advanced and open up unimaginable opportunities for our understanding of ourselves. This will allow us to become more adept at understanding the complexity of human behavior – at understanding the influences, emotions, and mental states that drive human behavior and decision-making. While we continue to forge this path, we must not stray from the fundamental truths that underlie multimodality and contextualization.