The face is in focus. Governments and businesses the world over are rapidly realizing that our faces tell far more stories than what it is possible to vocalize. People’s reactions to stimuli can be too minute to perceive without digital assistance, and that has opened up new avenues of academic as well as commercial research. However, while most people can see the benefit of facial research, many are still unaware that there are two very distinct areas of facial research: Facial Recognition and Facial Expression Analysis. Very different in nature, while focusing on the same physiological feature, the face.

It is important to note that at iMotions, we are leading experts in facial expression analysis software, but we do in no way offer or support facial recognition for either academic or commercial use.

As is the case with all other research methodologies, there are some things to be mindful of when conducting and utilizing facial research. This blog highlights some of the differences between Facial Recognition and Facial Expression Analysis, some of the ethical pitfalls, as well as best practices in data collection.

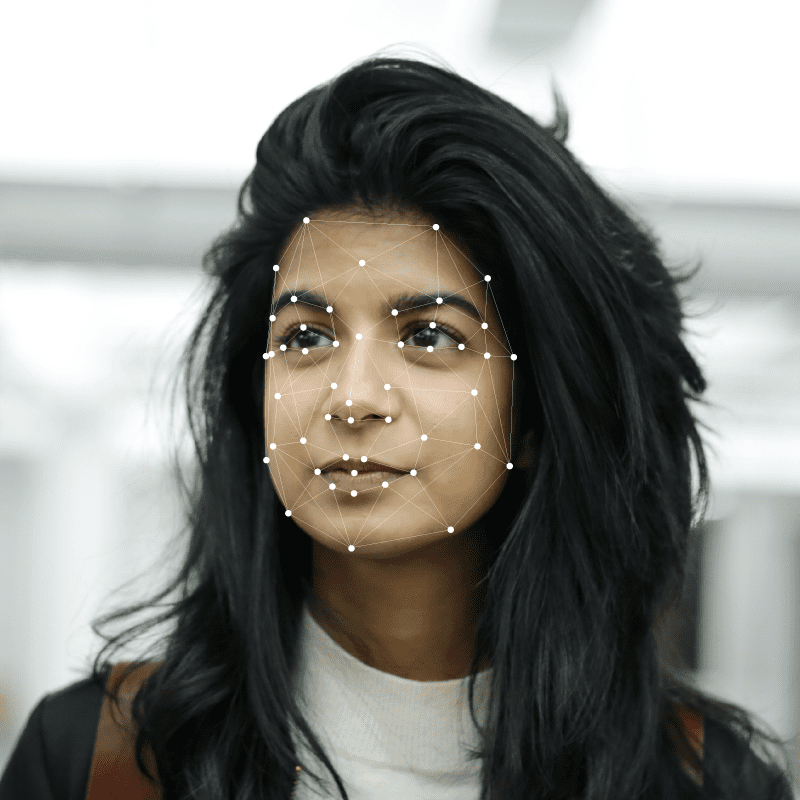

What is Facial Recognition?

Most people are familiar with the concept of facial recognition. It is one of the most used biometric authentication and identification metrics, second only to your thumbprint, and integrated into a wide range of security procedures in our daily lives.

For Example: Opening your high-end smartphone requires you to look at the camera or going through security at many airports. Some CCTV cameras running 24/7 in public areas even capture your unique features to ascertain your identity. Facial recognition is rapidly becoming more and more ubiquitous in society.

Without going into the intricacies of the coding of facial recognition, the software uses an algorithm to verify a person’s facial features via an image stored in a gigantic database of images. The technology essentially examines elements on a person’s face and matches it against images already stored, in order to identify the person in question.

Though it is usually applied as a security measure, we are beginning to see facial recognition enabling corporations and governments to do a multitude of other things with the technology.

The Chinese company, Alibaba, has already made payment with facial recognition available in its stores in China. Just shoot your best smile to the cashier and be on your merry way. Up until quite recently, facial recognition was still mostly used as an identification tool, that you most likely know from unlocking your smartphone with your face, but that is rapidly changing now, as the above example amply shows.

Ethical quandaries on the use of facial recognition

The use of facial recognition in mass surveillance raises some critical ethical issues. Key among these is privacy infringement, as it could enable unwarranted tracking and potential profiling. Potential misuse by authorities poses risks of power abuse and targeting of specific groups, especially in the absence of strong regulatory frameworks. Additionally, concerns about data security and breaches risk exposing sensitive personal information. If used unchecked, using facial recognition on mass-surveillance can fundamentally alter public spaces, suppress free expression, and challenging the balance between public safety and individual rights.

It is not only in survaillence that cacial recognition technology can be misapplied. Facial recognotion can, and often does, lend a false veneer of legitimacy to pseudoscience. By ostensibly providing ‘data-driven’ insights, it might be wrongly used to support baseless theories, such as predicting behavior or personality from facial features, echoing historical fallacies like phrenology, yet under the guise of modern technology.

A good example of facial recognition being used for pseudoscience, is the case of SciMatch.com. This dating app, claims to use AI and deep-learning algorithms to match users based on facial analysis and inferred personality traits. However, its method, echoing discredited theories like physiognomy, phrenology, and lacks scientific validation. Critics question its 87% accuracy claim in personality matching, labeling it pseudoscientific and expressing concerns about its impact on genuine human connections.

These examples show some applications for facial recognition but also highlight the limits for research in human behavior. It is a piece of software that can compare vast quantities of image data against a single image to produce a match. It reaches a binary conclusion. It is not inconceivable that facial recognition can find use in research projects, but at best, its applicability will be limited. The opposite is true of facial expression analysis.

What is Facial Expression Analysis?

As with facial recognition, facial expression analysis (FEA) cares about your facial features, but not that they are attached to you specifically.FEA originates from the Facial Action Coding System (FACS), created by psychologist Paul Ekman in the 1970s. Modern incarnations of FEA today use machine learning algorithms to analyze facial expressions with unprecedented accuracy and nuance. FACS works by breaking human expressions down into what are called Action Units that correspond with a specific muscle or muscle group in a person’s face.

Action Units allow researchers to easily classify different expression patterns they observe in their respondents, thus rendering the subsequent analysis a lot easier. So, FEA cannot in itself determine what a person is feeling at any given moment, only allow researchers to categorize the use of different Action Units that can then be applied to determine a participant’s emotional state.

Download our Facial Expression Analysis Pocket Guide

At iMotions, we advise our users on how to best utilize facial expression analysis in their research. We make a point of instilling in them the importance of asking follow-up questions after the software has processed the data. We do this because no software is a perfect substitute for a human being. We have integrated automated facial coding for Facial Action Coding System into our software thanks to our partner Affectiva to make it the industry-leading research tool it is today. However, it is ultimately a tool for researchers to strengthen their work, not define it for them.

Emotions can be contextual

Have you ever seen a friend or a family member smile to themselves, and had to ask them why they were smiling? You could see them smile but had no idea of the connotation. Were they wistful, happy, nostalgic, or remembering a hilarious joke? It can be impossible to tell, which is why you had to follow up. The same goes for data you get when applying biosensor measuring on respondents. That is because of the intricacies of how we display emotion.

While it is true that computers, armed with a high-quality webcam, can identify even more subtle expressions than a person might be able to, there is also a high degree of contextuality to how we portray emotion. People display emotion all the time, but when these emotions manifest on the face, we have a finite number of muscles with which to show these emotions, the aforementioned: Facial Action Units.

That means that some expressions have overlapping qualities; overlapping expressive components, as well as idiosyncratic mannerisms, can create false positives in a data collection process. It is up to the researchers to weed out those false positives.

Otherwise, the research will become contaminated. If you are an animated public speaker, a computer might conclude that you are terrified when you might be passionately arguing a point. Wide-open eyes and open mouth are both indicators of fear and shock, but you could have been surprised.

Several factors can induce false positives in your data. If a study also uses Galvanic Skin Response (GSR), which is the measurement of micro sweat, then the mere fact that the sun comes out and warms up the room can register you as being emotionally aroused, which is likely to show on the face as well. These subtle intricacies can provide a false positive on specific emotions that might, in fact, not be true.

As is the case with facial recognition, facial expression analysis can be and is already being automated with the help of machine learning into what has been dubbed “Emotion AI”. The idea of Emotion AI first came into prominence some twenty years ago in an article titled “Affective Computing” written by MIT scientist Rosalind Picard. The article stipulated that computer science was nearing the point where computers could recognize emotions and even to an extent “have emotions”.

One of the central points of the paper is that human emotion affects us in everything we do in our daily lives, from deciding what food to eat, to making crucial personal and professional decisions. In order to create an AI that can best assist us, its ability to recognize affect is key. To best create the sense of digital emotional understanding, emotion AI works by compiling and analyzing data from a list of different measuring points, most crucial is facial expression analysis. Together with eye tracking, physiological signals, and voice analysis, these measuring points theoretically allow a computer to approximate and analyze human emotion.

Introduction to Multi-Sensor Research

Automating the measurement of human emotion is, of course not in itself, a bad thing. There are some things to be wary of when it comes to emotion AI. Primarily, if the AI is fully automated, or autonomous.

People do not behave the same in specific situations, both because we are all different and because we all perceive different situations with differing levels of stress, arousal, shock, happiness, or boredom on our faces.

We also interpret and react to situations differently from a cultural point of view. It would be a very bold claim to say that every person in the world acts the same, and a concern could be that an autonomous AI would not be able to see that. Again, it comes down to the practices involved in the process of collecting the data. However, If results are not followed up on and verified by a researcher or data collector, they hold no scientific or real-world value.

The Ethical Use of Facial data

It seems almost redundant to say that research should be conducted ethically and responsibly, but there are a couple of reasons to restate its importance here. Firstly, it’s just good research and data collection practice. Secondly, we are entering a mediascape where there is an increasing focus on the misuse of both Facial Recognition and Facial Expression Analysis as forms of surveillance, suppression, and questionably obtaining and abusing personal information.

Biometric research, especially research focusing on the face, is a contentious subject and researchers conducting FEA research must be on a firm ethical footing. At iMotions, we have always promoted that data collection should be ethically sound, which means the following:

Always, always, obtain consent from the people you are measuring.

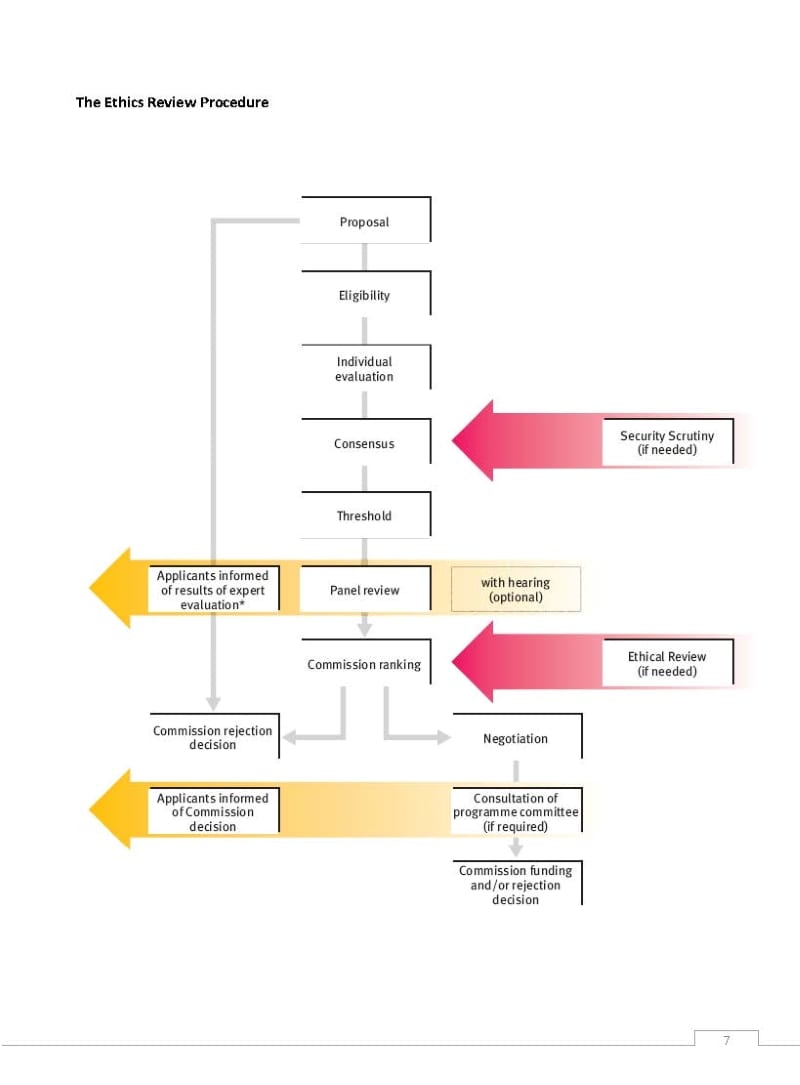

Aside from being a violation of an individual’s right to be informed, it is little more than qualified guesswork on the side of the researcher to conduct research with unwitting participants/subjects. Central to all research involving human subjects is, as mentioned above, to have informed consent from all participants in any study. The European Commission’s “Ethics for Researchers” guideline states that Informed consent “…implies that, prior to consenting to participation, participants should be clearly informed of the research goals, possible adverse events, possibilities to refuse participation or withdraw from the research, at any time, and without consequences.”

Source: The European Commission’s “Ethics for Researchers” guideline

This is especially prudent for biometric researchers when a lot of facial studies can be conducted through webcams, which is a research avenue that is opening up more and more. Due diligence should of course be ubiquitous throughout the duration of the research process and that also means that the person conducting the research/data collecting must always follow up on results from the respondents to make sure that false positives are not accepted as results.

Having consent forms, NDA, and other legal forms to help protect you and your participants from misuse of data, allows trust and transparency within your research. This relationship should not be ignored as people become more aware of how their data is being collected and how their data rights can be affected in the near future

Facial expression analysis is an incredibly powerful tool for biometric research, and many amazing studies are being conducted using it. Having an algorithm to do the heavy processing lessens the time spent setting up and conducting research, thus allowing for large-scale participant groups. The overarching thing to remember as a researcher is that you are the last stop in a long funnel of information, and it is ultimately your responsibility to ethically collect and conclude on the data for the purpose of your research, thus ensuring its scientific validity.