Welcome to the world of eye tracking, a fascinating journey into understanding how our eyes reveal much more than what we just see. Often referred to as the windows to the soul, our eyes offer a unique glimpse into human behavior, attention, and cognition. Whether you’re a student stepping into this intriguing field, a professional exploring new research methodologies, or simply curious about how eye tracking technology can unveil the unseen aspects of human perception, this guide is your starting point.

Eye tracking technology, once a tool reserved for high-end research labs, has now found its way into various aspects of our daily lives. From enhancing user experience in technology to advancing medical research, the applications of eye tracking are as diverse as they are groundbreaking. But what exactly is eye tracking? How does it work, and why is it so important in understanding human behavior?

In this comprehensive pocket guide, we’ll demystify eye tracking technology, breaking it down into simple, understandable concepts. You’ll discover the basic principles behind how we track eye movements, the different types of eye tracking devices, and the myriad ways this technology is being applied – from improving marketing strategies to developing life-changing assistive devices.

So, whether you’re writing a thesis, designing a new video game interface, or just satisfying your curiosity, join us on this eye-opening journey into the world of eye tracking. Let’s explore together how this remarkable technology is not just watching where we look, but also helping to shape the future.

Also, make sure not to miss our webinar covering all about the different types of eye tracking setups.

Table of Contents

- The technology behind eye tracking

- Eye tracking devices

- Eye Tracking Glasses

- Gaze data accuracy

- 1. Neuroscience & psychology thrive on eye tracking

- 2. Eye tracking delivers unmatched value to market research

- 3. Simulation

- 4. Eye tracking can help gain deep insights with Human Computer Interaction (HCI)

- 5. Website testing

- 6. Learning & education can benefit from eye tracking

- 7. Eye tracking is used in medical research to study a wide variety of neurological and psychiatric conditions

- 8. Gaming and UX – why is eye tracking the big hit among web designers and developers?

- Eye tracking data

- Advanced eye tracking metrics

- The bottom line on eye tracking

- Get the most from eye tracking

- Best practices at a glance

- Best practices in eye tracking

- Choosing the right equipment

- Eye tracking software

- Eye tracking done right with iMotions

- Contact Us

- Eye Tracking

- Glossary

The technology behind eye tracking

– How exactly does eye tracking work?

Eye tracking use is on the rise. While early devices were highly intrusive and involved particularly cumbersome procedures to set up, modern eye trackers have undergone quite a technological evolution in recent years. Long gone are the rigid experimental setups and seating arrangements you might think of.

Modern eye trackers are hardly any larger than smart phones and provide an extremely natural experience for respondents.

Remote, non-intrusive methods have made eye tracking both an easy-to-use and accessible tool in human behavior research that allows objective measurements of eye movements in real-time.

The Technology

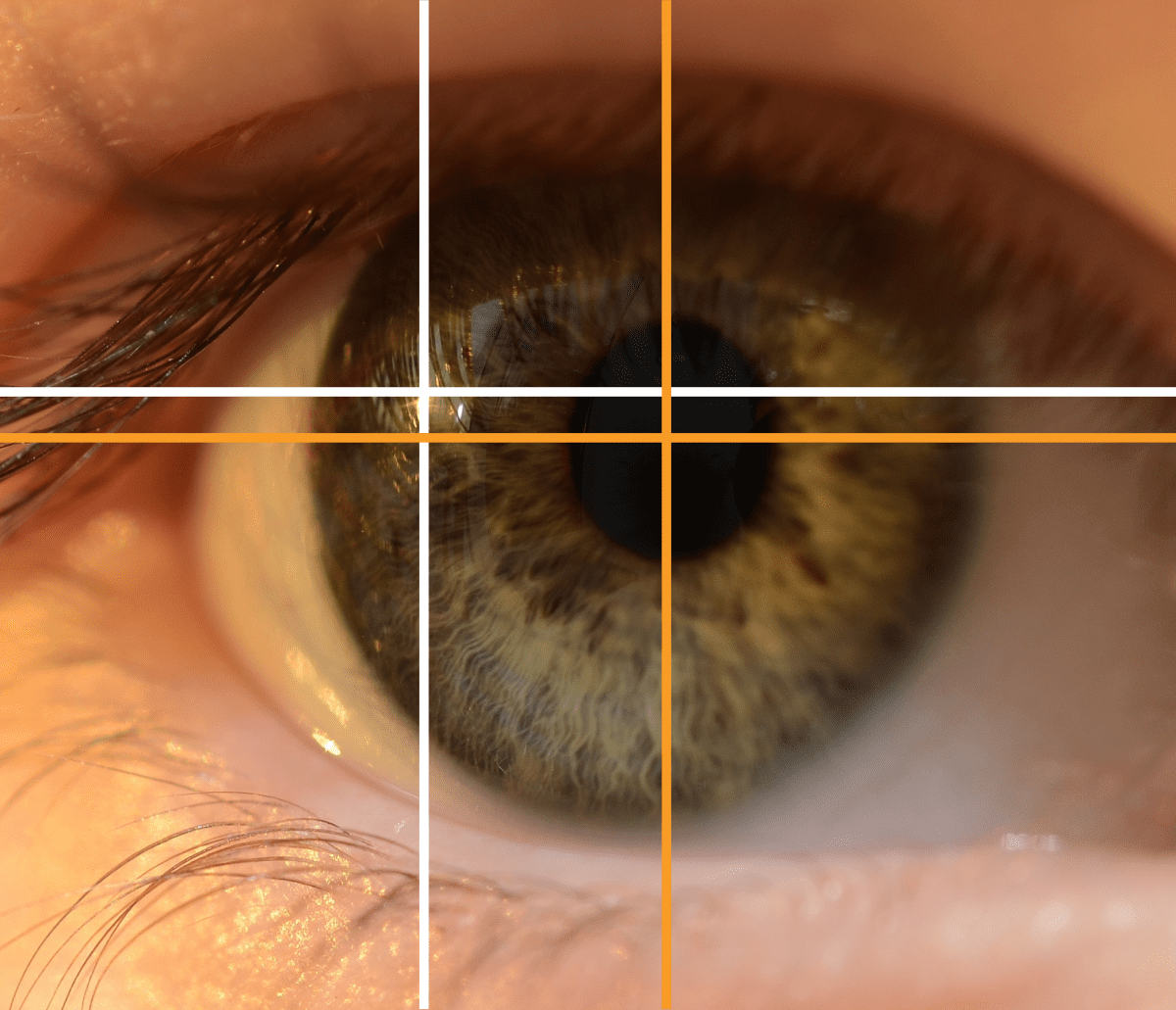

Most modern eye trackers utilize near-infrared technology along with a high-resolution camera (or other optical sensor) to track gaze direction. The underlying concept, commonly referred to as Pupil Center Corneal Reflection (PCCR), is actually rather simple.

It essentially involves the camera tracking the pupil center, and where light reflects from the cornea. An image of how this looks like is on the right. The math behind it is …well, a bit more complex. We won‘t bore you with the nature of algorithms at this point.

Image above: Pupil Center Corneal Reflection (PCCR). The light reflecting from the cornea and the center of the pupil are used to inform the eye tracker about the movement and direction of the eye.

Why infrared spectrum imaging?

The accuracy of eye movement measurement heavily relies on a clear demarcation of the pupil and detection of corneal reflection.

The visible spectrum is likely to generate uncontrolled reflections, while illuminating the eye with infrared light – which is not perceivable by the human eye – renders the demarcation of the pupil and the iris an easy task – while the light directly enters the pupil, it just reflects from the iris.

This means that a clear contrast is generated (with little noise) and can, therefore be followed by algorithms (running inside the eye tracker) with ease.

Here‘s the bottom line of how it works:

Near-infrared light is directed toward the center of the eyes (the pupils) causing visible reflections in the cornea (the outer-most optical element of the eye), and this high-contrast image is tracked by a camera.

Eye tracking devices

There are three main types of eye tracker:

Screen-based (also called remote or desktop) glasses, (also called mobile) and eye tracking within VR headsets. Webcam-based eye tracking has been seen as an option, but this technology is inherently inferior to infrared-based eye trackers (something we cover in this blog post).

Screen-based

- Record eye movements at a distance (nothing to attach to the respondent)

- Mounted below or placed close to a computer or screen

- Respondent is seated in front of the eye tracker

- Recommended for observations of any screen-based stimulus material in lab settings such as pictures, videos, websites, offline stimuli (magazines, physical products, etc.), and other small settings (small shelf studies etc.)

Glasses

- Records eye activity from a close range

- Mounted onto lightweight eyeglass frames

- Respondent is able to walk around freely

- Recommended for observations of objects and task performance in any real-life or virtual environments (usability studies, product testing, etc.)

Eye Tracking Glasses

The Complete Pocket Guide

- 35 pages of comprehensive eye tracking material

- Technical overview of hardware

- Learn how to take your research to the next level

Gaze data accuracy

– How do the trackers compare?

Measurement precision certainly is crucial in eye movement research. The quality of the collected data depends primarily on the tracking accuracy of the device you use. Going for a low quality system will prevent you from being able to extract high precision data.

A common misconception is that researchers face an inevitable trade-off between measurement accuracy and the amount of movement the respondent can make with their head. The truth is a bit more complex than that

Screen-based eye trackers:

Require respondents to sit in front of a screen or close to the stimulus being used in the experiment. Although screen-based systems track the eyes only within certain limits, they are able to move a limited amount, as long as it is within the limits of the eye tracker’s range. This range is called the headbox. The freedom of movement is (usually) sufficiently large for respondents to feel unrestricted.

Eye tracking glasses:

Are fitted near the eyes and therefore allow respondents to move around as freely as they would like – certainly a plus if your study design requires respondents to be present in various areas (e.g. a large lab setting, or a supermarket).

Does that imply that eye tracking glasses are more susceptible to measurement inaccuracies?

Not at all.

As long as the device is calibrated properly, head-mounted eye trackers are unaffected by head movements and deliver high precision gaze data just like screen-based devices. Also, as the eye tracking camera is locked to the head‘s coordinate system, the overlaying of eye movements onto the scene camera does not suffer from inaccuracies due to head movement.

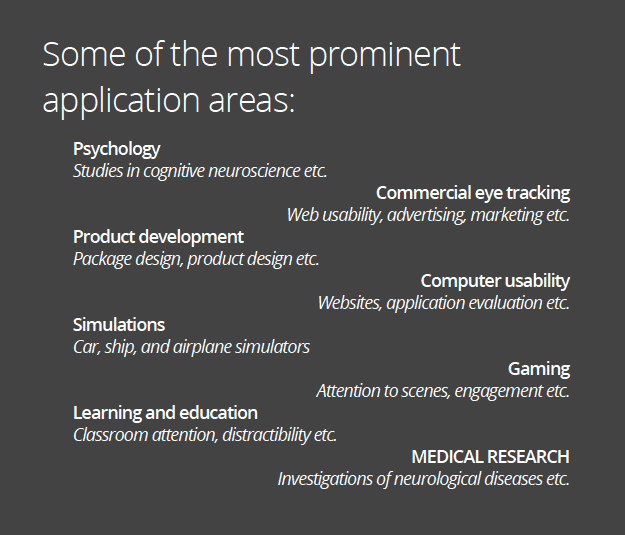

Who uses eye tracking?

– Use cases in research

You may be surprised to discover that eye tracking is not exactly a novelty – it has in fact been around for many years in psychological research. Given the well-established relationship between eye movements and human cognition, it makes intuitive sense to utilize eye tracking as an experimental method to gain insight into the workings of the mind.

If eye tracking is old news, how come it is the latest buzz in human behavior research?

First let‘s rewind a bit.

Studies of eye movements based on simple observation stretch back more than 100 years ago. In 1901, the first eye tracker was built, but could only record horizontal eye movements and required a head-mount. In 1905, eye movements could be recorded using “a small white speck of material inserted into the participant‘s eyes“. Not exactly the most enjoyable experimental setup.

It‘s safe to say that eye tracking has come a long way. With technological advancements, modern eye trackers have became less intrusive, more affordable, accessible, and experimental sessions have became increasingly comfortable and easier to set up (long gone are the scary “white specks“ and head-mounts).

Currently, eye tracking is being employed by psychologists, neuroscientists, human factor engineers, marketers, designers, architects – you name it, it’s happening. In the following pages, we’ll go through some of the most common application areas for eye tracking, and see how it helps guide new discoveries and insight in each.

1. Neuroscience & psychology thrive on eye tracking

Neuroscience and psychology utilize eye tracking to analyze the sequence of gaze patterns to gain deeper insights into cognitive processes underlying attention, learning, and memory.

How do expectations shape the way we see the world? For example, if you see a picture of a living room, you will have an idea of how the furniture should be arranged. If the scene doesn’t match your expectations, you might be baffled and gaze around the scene as your “scene semantics” (your “rules” of how a living room should look) are violated.

Another research area addresses how we encode and recall faces – where do we look to extract the emotional state of others? Eyes and mouth are the most important cues, but there’s definitely a lot more to it.

Another research area addresses how we encode and recall faces – where do we look to extract the emotional state of others? Eyes and mouth are the most important cues, but there’s definitely a lot more to it. Eye tracking can also provide insights into processing of text, particularly how eye movements during reading are affected by the emotional content of the texts. Eye tracking can provide crucial information about how we attend to the world – what we see and how we see it.

2. Eye tracking delivers unmatched value to market research

Why is it that some products make an impression on customers while others just don‘t get it right? Eye tracking has become a popular, increasingly vital tool in market research. Many leading brands actively utilize eye tracking to assess customer attention to key messages and advertising as well as to evaluate product performance, product and package design, and overall customer experience.

When applied to in-store testing, eye tracking provides information about the ease and difficulty of in-store navigation, search behavior, and purchase choices.

3. Simulation

There are various different ways in which to investigate human behavior in simulations. One of the most common approaches is to use a driving simulator. Such research often use eye tracking glasses combined with a several other sensors to gain a better understanding of human behavior in hazardous situations.

Where do drivers look when they face obstacles on the street? How does talking on the phone affect driving behavior? How exactly does speeding compromise visual attention? Insights of that kind can help improve hazard awareness and be applied to increase future

driver safety. Automotive research has embraced eye tracking glasses for a long time to asses the drivers‘ visual attention – both with respect to navigation and dashboard layout. In the near future automobiles might even be able to respond to the drivers’ eye gaze, eye movements, or pupil dilation.

4. Eye tracking can help gain deep insights with Human Computer Interaction (HCI)

So what is Human Computer Interaction (HCI)? Essentially, HCI research is concerned with how computers are used and designed, and how this relates to their use by people. From laptops, tablets, smart phones, and beyond, he use of technology can be evaluated by measuring our visual attention to the devices we use.

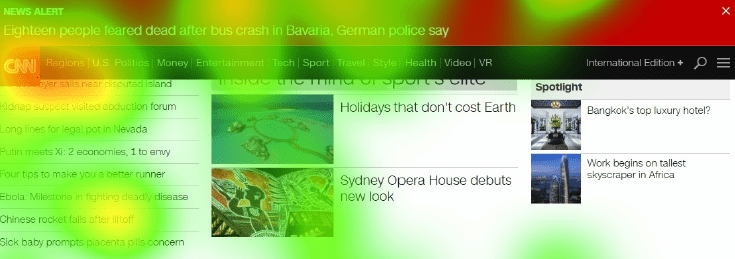

5. Website testing

A rapidly growing field that utilizes eye tracking as a methodology for assessment is usability and user experience testing. Eye tracking for website testing is an often utilized approach, giving insights into how websites are viewed and experienced. How do people attend to adverts, communication, and calls to action (CTAs)?

If you‘re losing out on revenue, eye tracking data can deliver valuable insights into the gaze patterns of your website visitors – how long does it take them to find a specific product on your site, what kind of visual information do they ignore (but are supposed to see)?

Cut to the chase and see exactly what goes wrong. The very same investigations can even be applied to mobile apps on tablets and smartphones.

6. Learning & education can benefit from eye tracking

What if learning could be an equally satisfying experience for all of us? What exactly does it take to make learning a success? In recent years, eye tracking technology has impressively made its way into educational science to help gain insights into learning behavior in diverse settings ranging from traditional “chalk and talk“ teaching approaches to digital learning.

Analyzing visual attention of students during classroom education, for example, delivers valuable information in regard to which elements catch and hold interest, and which are distracting or go unseen.

Do students read or do they scan slides? Do they focus on the teacher or concentrate on their notes? Does their gaze move around in the classroom? Eye tracking findings like these can be effectively used to enhance instructional design and materials for an improved learning experience in the classroom and beyond.

7. Eye tracking is used in medical research to study a wide variety of neurological and psychiatric conditions

Eye tracking in combination with conventional research methods or other biosensors can help assess and potentially diagnose conditions such as Attention Deficit Hyperactivity Disorder (ADHD), Autism Spectrum Disorder (ASD), Obsessive Compulsive Disorder (OCD), schizophrenia, Parkinson‘s disease, and Alzheimer‘s disease.

Additionally, eye tracking technology can be used to detect states of drowsiness or support multiple other fields of medical use, quality assurance or monitoring.

8. Gaming and UX – why is eye tracking the big hit among web designers and developers?

Eye tracking has recently been introduced into the gaming industry and has since become an increasingly prominent tool as Designers are now able to assess and quantify measures such as visual attention and reactions to key moments during game play to improve the overall gaming experience.

When combined with other biometric sensors, designers can utilize the data to measure emotional and cognitive responses to gaming. New trends and developments may soon render it possible to control the game based on pupil dilation and eye movements.

Eye tracking data

– Understanding the results

Eye tracking makes it possible to quantify visual attention like no other metric, as it objectively monitors where, when, and what people look at.

Eye tracking metrics

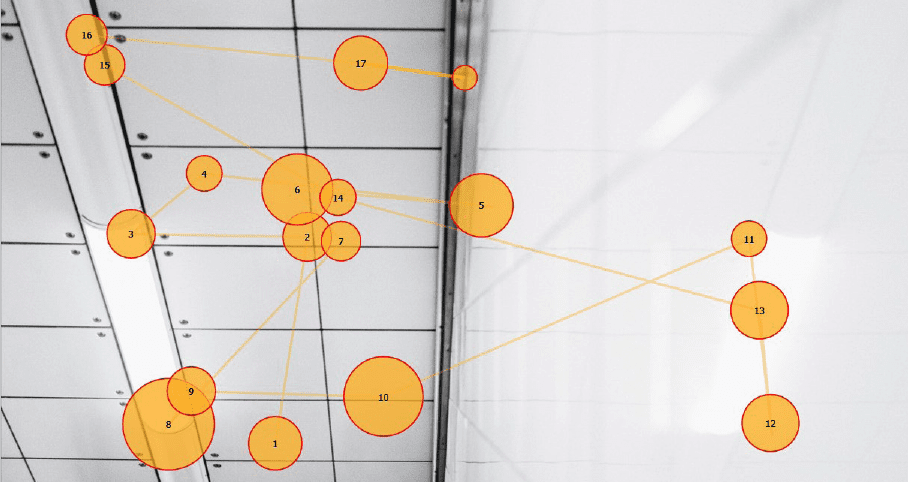

Fixation and Gaze points

Without a doubt, the terms fixation and gaze points are the most prominent metrics in eye tracking literature.

Gaze points constitute the basic unit of measure – one gaze point equals one raw sample captured by the eye tracker. The math is easy: If the eye tracker measures 60 times a second, then each gaze point represents a sixtieth of a second (or 16.67 milliseconds).

If a series of gaze points happens to be close in time and range, the resulting gaze cluster denotes a fixation, a period in which our eyes are locked toward a specific object. Typically, the fixation duration is 100 – 300 milliseconds.

The eye movements between fixations are known as saccades. What are they exactly? Take reading a book, for example. While reading, your eyes don’t move smoothly across the line, even if you experience it like that. Instead, your eyes jump and pause, thereby generating a vast number of discrete sequences. These sequences are called saccades.

Perceptual span and smooth pursuit

Reading involves both saccades and fixations, with each fixation involving a perceptual span. This refers to the number of characters we can recognize on each fixation, between each saccade. This is usually 17-19 letters, dependent on the text. Experienced readers have a higher perceptual span compared to early readers, and can therefore read faster.

Imagine watching clouds in the sky as you pass your time waiting at the bus stop. As you now know about saccades, you might expect your eye movements to in this scenario to behave in the same way – but the rules are a bit different for moving objects. Unlike reading, locking your eyes toward a moving object won’t generate any saccades, but a smooth pursuit trajectory. This way of seeing operates as you might expect – the eyes smoothly track the object. This occurs up to 30°/s – at speeds beyond this, saccades are used to catch up to the object.

As fixations and saccades are excellent measures of visual attention and interest, they are most commonly used to fuel discoveries with eye tracking data.

Now let‘s get practical and have a look at the most common metrics used in eye tracking research (that are based on fixations and gaze points) and what you can make of them.

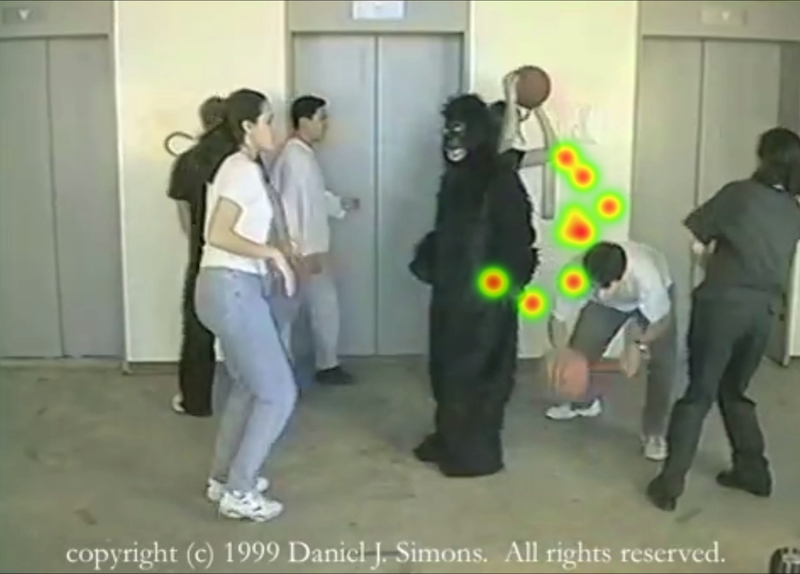

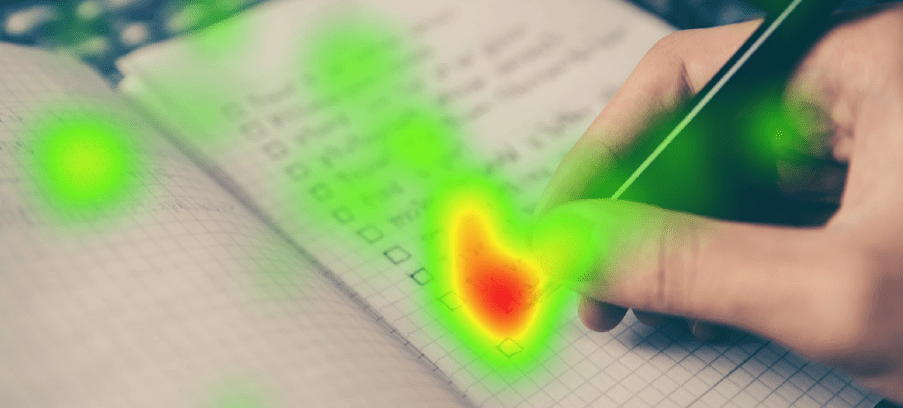

Heat maps

Heat maps are static or dynamic aggregations of gaze points and fixations revealing the distribution of visual attention. Following an easy-to-read color-coded scheme, heat maps serve as an excellent method to visualize which elements of the stimulus were able to draw attention – with red areas suggesting a high number of gaze points (and therefore an increased level of interest), and yellow and green areas showing fewer gaze points (and therefore a less engaged visual system. Areas without coloring were likely not attended to at all.

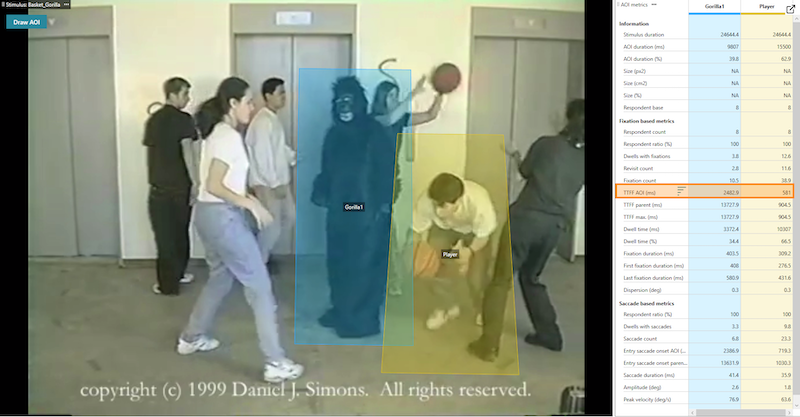

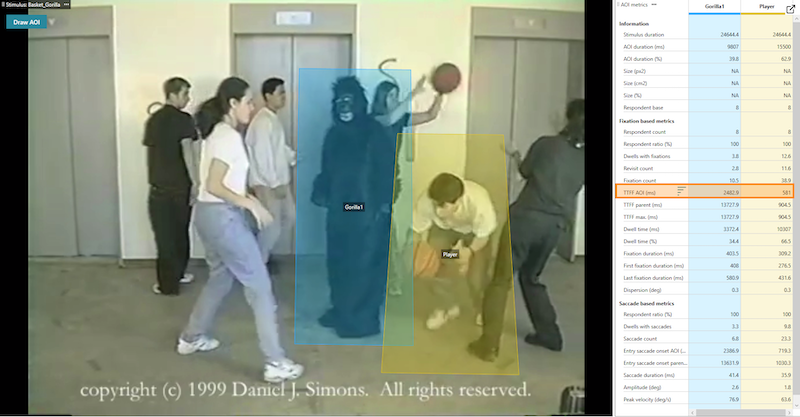

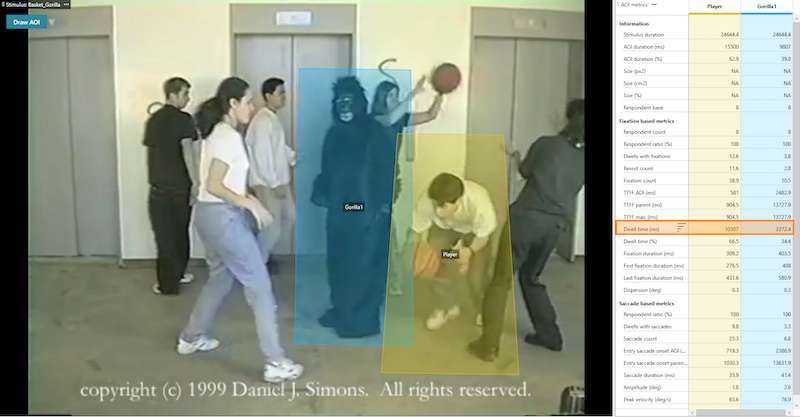

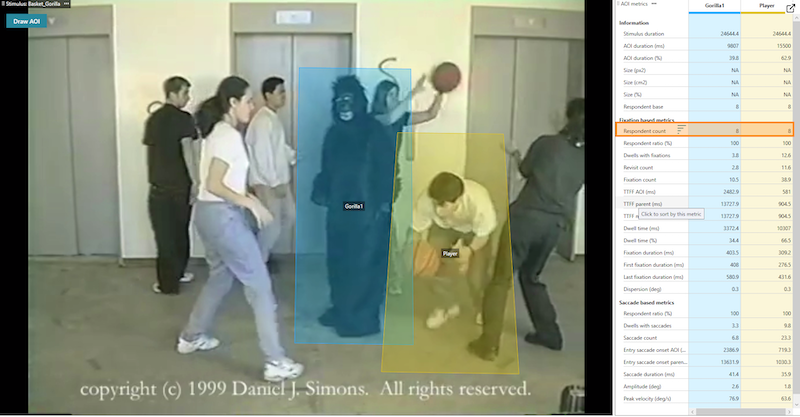

Areas of Interest (AOI)

Areas of Interest, also referred to as AOIs, are user-defined subregions of a displayed stimulus. Extracting metrics for separate AOIs might come in handy when evaluating the performance of two or more specific areas in the same video, picture, website or program interface. This can be performed to compare groups of participants, conditions, or different features within the same scene

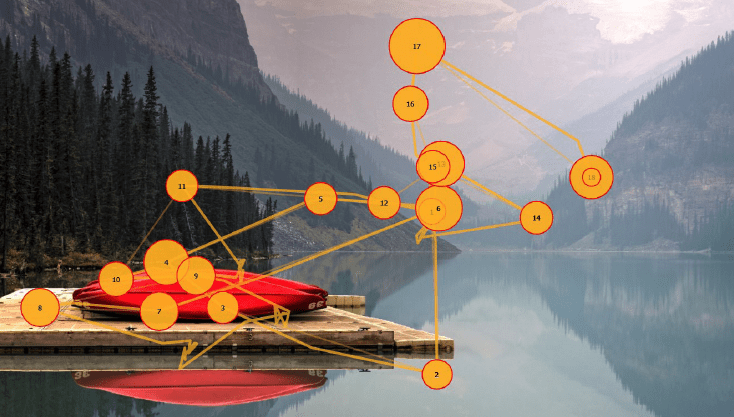

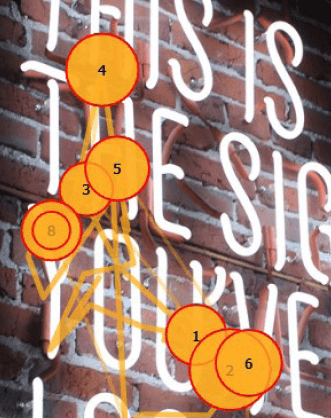

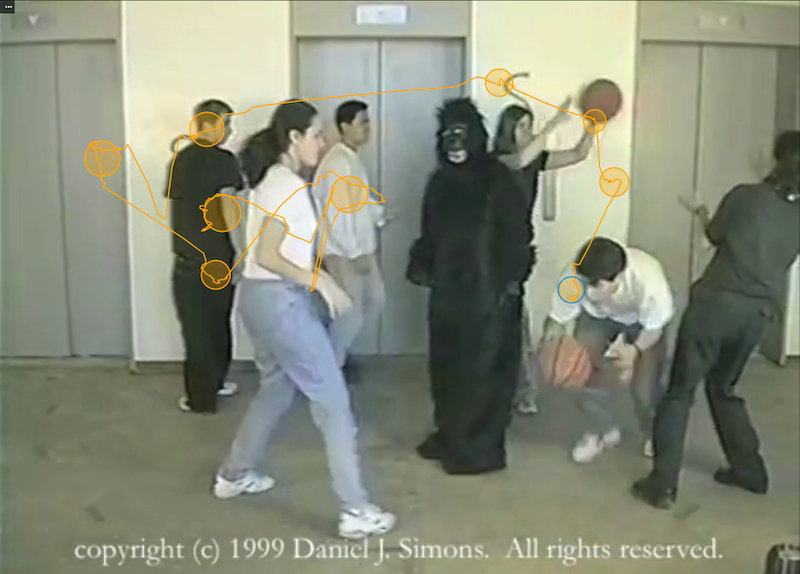

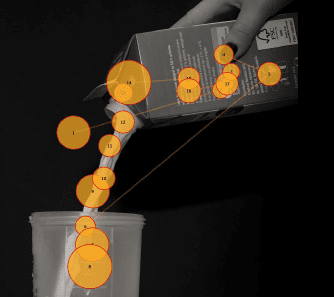

Fixation sequences

Based on fixation position (where?) and timing information (when?) you can generate a fixation sequence. This is dependent on where respondents look and how much time they spend, and provides insight into the order of attention, telling you where respondents looked first, second, third etc. This is a commonly used metric in eye tracking research as it reflects salient elements (elements that stand out in terms of brightness, hue, saturation etc.) in the display or environment that are likely to catch attention.

Time to First Fixation (TTFF)

The time to first fixation indicates the amount of time it takes a respondent to look at a specific AOI from stimulus onset. TTFF can indicate both bottom-up stimulus driven searches (a flashy company label catching immediate attention, for example) as well as top-down attention driven searches (respondents actively decide to search for certain elements or areas on a website, for example). TTFF is a basic yet very valuable metric in eye tracking.

Time spent

Time spent quantifies the amount of time that respondents spent looking at an AOI. As respondents have to blend out other stimuli in the visual periphery that could be equally interesting, the amount of time spent often indicates motivation and conscious attention (prolonged visual attention at a certain region clearly points to a high level of interest, while shorter times indicate that other areas on screen or in the environment might be more catchy).

Respondent count

The respondent count describes how many of your respondents actually guided their gaze towards a specific AOI. A higher count shows that the stimulus is widely attended to, while a low count shows that little attention is paid to it.

Advanced eye tracking metrics

– More than meets the eye

With the core tools at hand, you‘re perfectly equipped to track the basics. You can now find out where, when and what people look at, and even what they fail to see. So far, so good.

Now how about pushing your insights a bit further and stepping beyond the basics of eye tracking?

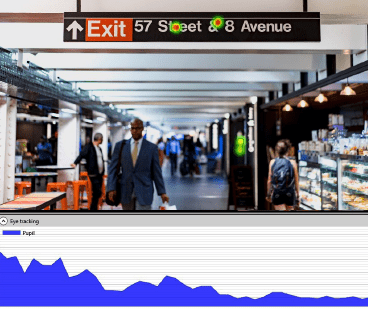

Pupil size / dilation

An increase in pupil size is referred to as pupil dilation, and a decrease in size is called pupil constriction.

Pupil size primarily responds to changes in light (ambient light) or stimulus material (e.g. video stimulus). However, if the experiment can account for light, other attributes can be derived from changes in pupil size. Two common properties are emotional arousal (referring to the amount of emotional engagement) and cognitive workload (which refers to how mentally taxing a stimulus is).

In most cases pupillary responses are used as a measure for emotional arousal. However, be careful with overreaching conclusions as pupillary responses alone don’t give any indication of whether arousal arises from a positive (“yay“!) or negative stimulus (“nay!“).

Distance to the screen

Along with pupil size, eye trackers also measure the distance to the screen and the relative position of the respondent. Leaning forwards or backwards in front of a remote device is tracked directly and can reflect approach-avoidance behavior. However, keep in mind that interpreting the data is always very specific to the application.

Ocular Vergence

Most eye trackers measure the positions of the left and right eyes independently. This allows the extraction of vergence, i.e., whether left and right eyes move together or apart from each other. This phenomenon is just a natural consequence of focusing near and far. Divergence often happens when our mind drifts away, when losing focus or concentration. It can be picked up instantly by measuring inter-pupil distance.

Blinks

Eye tracking can also provide essential information on cognitive workload by monitoring blinks. Cognitively demanding tasks can be associated with delays in blinks, the so-called attentional blink. However, many other insights can be derived from blinks. A very low frequency of blinks, for example, is usually associated with higher levels of concentration. A rather high frequency is indicative of drowsiness and lower levels of focus and concentration.

The bottom line on eye tracking

Objective answers with eye tracking

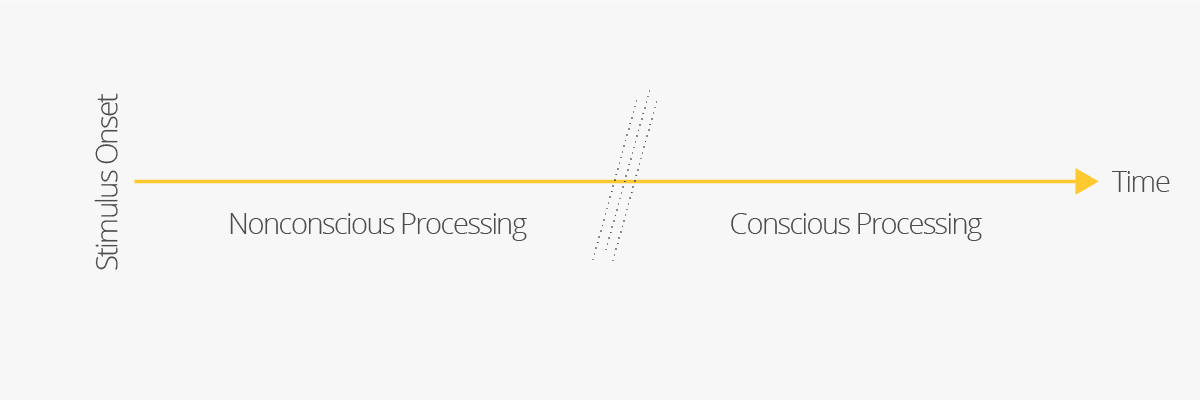

To date, eye tracking is the only method in human behavior research that makes it possible to objectively measure and quantify eye movements.With eye tracking, you can tap into nonconscious mental processing. Eye tracking can be used to assess which elements in your product design or advertisement catch attention, and allow you to obtain insights into your respondents‘ individual preferences by observing which elements they dwell on over time.

The limitations of eye tracking

Eye motion is tightly linked to visual attention. As a matter of fact, you just can‘t move your eyes without moving attention. You can however certainly shift attention without moving your eyes.

While eye tracking can tell us what people look at and what they see, it can’t tell us what people perceive.

Think of this classic example: You open the fridge in search of a milk carton. While it is right in front of you, you can‘t seem to find it. You keep looking until you close the door empty-handed. You have to take your coffee black that day.

What happened exactly in this scenario? Even though you saw the carton(and this is what eye tracking data would tell us), you were probably not paying sufficient attention to actually perceive that it was right in front of your eyes. You simply overlooked the bottle, although eye tracking data would have told you otherwise.

Why is it important to keep this aspect in mind when analyzing eye tracking data?

Take a moment and transfer the milk bottle problem to website testing, for example. Imagine your carefully designed call to action just doesn’t cut it as hoped. While eye tracking can certainly reveal if your website visitors actively guide their gaze toward the call to action (let‘s assume they do), it won’t tell you if they really perceive the flashy button that you want them to click.

The figure below illustrates how nonconscious processing of a stimulus occurs before our conscious processing kicks in. We can miss the milk carton in the fridge because we don’t spend long enough attending to it to become consciously aware of it.

As an objective measure, eye tracking indicates:

- which elements attract immediate attention

- which elements attract above-average attention

- if some elements are being ignored or overlooked

- in which order the elements are noticed

So what‘s the bottom line?

Eye tracking gives incredible insights into where we direct our eye movements at a certain time and how those movements are modulated by visual attention and stimulus features (size, brightness, color, and

location).

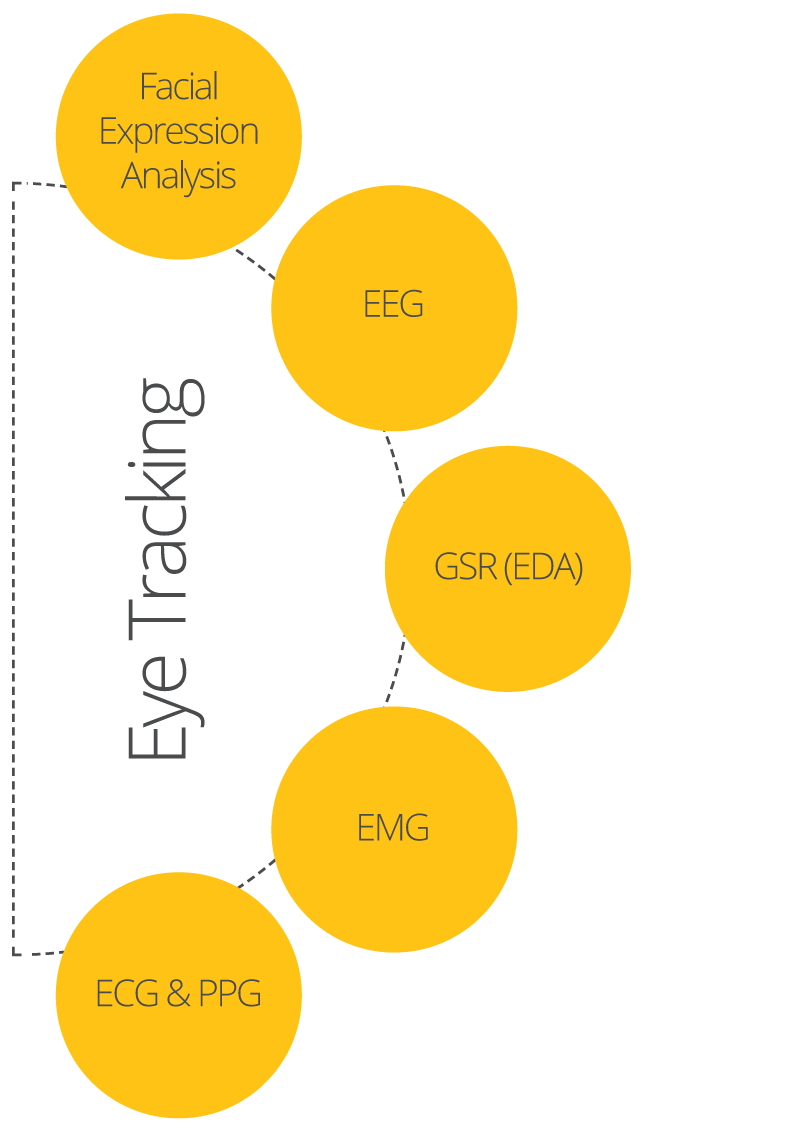

However, tracking gaze positions alone doesn’t tell us anything particular about the cognitive processes and the emotional states that drive eye movements. In these cases, eye tracking needs to be complemented by other biosensors to capture the full picture of human behavior in that very moment.

Let’s find out how.

Get the most from eye tracking

– Next level insights

Why combine eye tracking with other biosensors?

Take the milk carton situation. Although you looked straight at the carton, you didn’t realize it was standing right in front of you. Based on eye tracking alone we would have argued that, as your gaze was directed towards the item, you must have seen it.

The most intuitive way to validate our assumption might be to just ask you – “did you see the milk carton?”. Primarily due to the relatively low costs, surveys are in fact a very common research tool to consolidate gaze data with self-reports of personal feelings, thoughts, and attitudes.

Of course, people might not be aware of what they are really thinking – they weren’t consciously aware of the milk carton after all, and this extends to other stimuli too.

The power of self-reports is also limited when it comes to the disclosure of sensitive personal information (alcohol, drugs, sexual behavior etc.).

Keep in mind that when working with self-reports, any delay between action and recollection introduces artifacts – asking immediately after closing the fridge might yield a different response (“nope, I didn’t see it!”) compared to one week later (“…um, I don‘t remember!”).

Take your study from ordinary to extraordinary

We could have used webcam-based facial expression analysis to monitor your emotional valence while staring into the fridge, for example. A confused expression while scanning the items in the fridge followed by a sad expression would have told us how you were feeling when realizing that the milk was missing.

On the other hand, a slight smile would tell a different story. Furthermore, we could have quantified your level of emotional arousal and stress levels based on the changes in skin conductance (measured as galvanic skin response) or your heart rate (as measured by electrocardiography).

On top of this, we could have used electroencephalography (EEG) to capture your cognitive and motivational state as it is the ideal tool to identify fluctuations in workload (“have I looked everywhere?”), engagement (“I have to find this bottle!”), or even drowsiness levels.

Of course, this example is a simplification of the interpretation of physiological data. In most research scenarios you will have to consider and control for many more factors that might have a significant impact on what you are investigating.

Each biosensor can reveal a specific aspect of human cognition, emotion, and behavior. Depending on your individual research question, consider combining eye tracking with two or more additional biosensors in order to gain meaningful insights into the dynamics of attention, emotion, and motivation.

What‘s the gain?

The true power of eye tracking unfolds as it is combined with other sources of data to measure complex dependent variables.

These 5 biosensors are a perfect complement to eye tracking. Which metrics can be extracted from the different systems?

Have a look.

Facial Expression Analysis

Facial expression analysis is a non-intrusive method to assess emotional reactions throughout time. While facial expressions can measure the presence of an emotion (valence), they can’t measure the intensity of that emotion (arousal).

EEG

Electroencephalography is a technique that measures the electrical activity of the brain at the scalp. EEG provides information about brain activity during task performance or stimulus exposure. It allows for the analysis of brain dynamics that provide information about the levels of engagement (arousal), motivation, frustration, cognitive workload. Other metrics can also be measured that are associated with stimulus processing, action preparation, and execution.

GSR (EDA)

Galvanic skin response (or electrodermal activity) monitors the electrical activity across the skin generated by physiological or emotional arousal. Skin conductance offers insights into the respondents’ nonconscious arousal when being confronted with emotionally loaded stimulus material

Best practices at a glance

- Have optimal environment and lighting conditions

- Work with dual screen configuration

- Clean your computer

- Make sure to properly train all staff involved

- Use protocols

- Simplify your lab setup

EMG

Electromyographic sensors monitor the electric activity of muscles. You can use EMG to monitor muscular responses to any type of stimulus material to extract even subtle activation patterns associated with emotional expressions (facial EMG) or consciously controlled hand/finger movements.

ECG & PPG

Electrocardiography (ECG) and photoplethysmography (PPG) allow for recording of the heart rate or pulse. You can use this data to obtain insights into respondents’ physical state, anxiety and stress levels (arousal), and how changes in physiological state relate to their actions and decisions.

Best practices in eye tracking

– Perfect research every time

Failure or complications in studies most often occur due to small mistakes that could have easily been avoided. Often this happens because researchers and staff just didn’t know about the basics to avoid running into issues.

Conducting an eye tracking study involves juggling with a lot of moving parts.

A complex experimental design, new respondents, different technologies, different hardware pieces, different operators.

It can of course be quite challenging at times. We’ve all been there.

But don‘t worry, we’ve got your back. The following are our 6 top tips for a smooth lab experience in eye tracking research.

1. Environment and lighting conditions

Have a dedicated space for running your study. Find an isolated room that is not used by others so you can keep your experimental setup as consistent for each participant as possible. Make sure to place all system components on a table that doesn’t wobble or shift. For eye tracking, lighting conditions are essential. Avoid direct sunlight coming through windows (close the blinds!) as sunlight contains infrared light that can affect the quality of the eye tracking measurements.

Avoid brightly lit rooms (no overhead light). Ideally, use ambient light. It‘s particularly important to keep the lighting levels consistent when measuring levels of pupil dilation (pupillometry) – this goes for both the luminance of the stimulus and the brightness of the room.

Be aware that long experiments might cause dry eyes, resulting in drift. Try to keep noise from the surrounding environment (rooms, corridors, streets) at a minimum, as it could distract the respondent and affect measurement validity.

2. Work with a dual screen configuration

Work with a dual screen configuration – one screen for the respondent for stimulus presentation (which ideally remains black until stimulus material appears) and one screen for the operator (which the respondent should not be able to see) to control the experiment and monitor data acquisition. A dual screen setup allows you to detect any issues with the equipment during the experiment, without interfering with the respondent’s experience.

3. Clean your computer before getting started

Clean your computer from things you don‘t need. Disable anything that could interfere with your computer, e.g. make sure to turn off your antivirus software so it doesn’t pop up during the experiment and use CPU resources. Disconnect your computer from the internet during data collection if it’s not required for the experiment to avoid any potential interruptions. It can also be a good idea to disable your screen saver.

4. Ensure all people involved are properly trained

It is essential that the people involved in data collection are trained on the systems used so that they have a level of knowledge that allows them to run a study smoothly. Generally, training is important for any kind of position in the lab. Having to train people before the testing process is advantageous and allows you to prepare for potential mishaps or missteps made during the experiment.

5. Always use protocols

Always have protocols! It is essential to have any instructions or any documentation that is associated with setting up and/or running a study at the lab readily available in written form.

Try to have templates for every step of the research process. Literally. Don‘t underestimate the importance of documentation in institutions such as a university.

It‘s common practice for research assistants to switch labs after a certain amount of time – protocols are true lifesavers as they keep track of anything from management to study execution; therefore, make sure that new lab members can jump right in and are able to perform on the fly in line with lab routines.

6. Simplify your technology setup

Chances are that you need a couple of different biosensors to run your study.

To make sure that they interact well and are compatible with each other, use as few vendors as possible for both hard- and software. In the ideal setup everything would be integrated into a single software platform.

Having to switch between different operating systems or different computers can cause difficulties. Keep in mind that it‘s easier to train lab members on a single software than on multiple.

The equation is simple: Having a single software platform decreases the amount of training needed, simplifies the setup and reduces the risk of human error.

Also, in case of problems and support issues it is more convenient to deal with one vendor and have a direct contact person than to be shuffled around between vendors because no single point of contact is responsible.

Choosing the right equipment

– Hardware suited to your need

At this point you might think that all eye tracking systems are pretty much the same. Their only job is to track where people are looking, so how much can they vary? Actually, quite a lot.

The use of eye tracking is increasing all the time, and to keep pace with demand, new systems are continually released. Amidst all the manufacturer specifications it can be difficult to stay current on the available options and evaluate which eye tracker is best for your needs

Which eye tracker is right for you?

Begin with the obvious:

1) Will your respondents be seated in front of a computer during the session? Go for a screen-based eye

tracker. Do your respondents need to move freely in a natural setting? Choose a head-mounted system that allows for head and body mobility.

2) We have covered this already, but here it is again: Even though it‘s a cheaper solution, it‘s best to avoid webcam-based eye trackers. Yes, we know the temptation of a good bargain – however, when it comes to eye trackers it‘s absolutely worth spending a bit of extra money if you‘re aiming for worthwhile measurement accuracy.

3) Make sure the eye tracker you purchase meets the specifications required to answer your research questions. Have a look at the key specifications to the right that can help you find a suitable eye tracker.

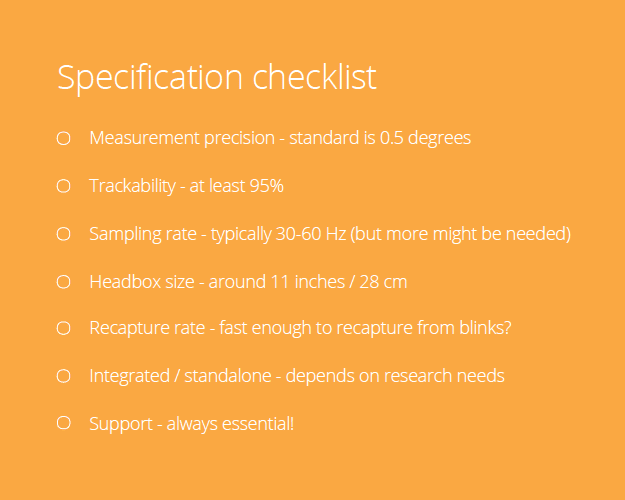

Key specifications to look for to get the ideal eye tracker:

Measurement precision: Measured in degrees. Standard is about 0.5 degree. Low-end hardware starts at around 1.0 degree, medium 0.5 degree, and high end at 0.1 degree or even less.

Trackability: How much of the population can be tracked? The best systems track around 95% of the population, low end systems less.

Sampling rate: How many times per second is the eye position measured? Typical value range is 30-60 Hz. Special research equipment records at around 120-1000+ Hz.

Headbox size: To what extent is the respondent allowed to move in relative distance to the eye tracker? A good system will typically allow around 11 inches (28cm) of movement in each direction.

Recapture rate: How fast does the eye tracker detect the eye position after the eyes have been out of sight for a moment (e.g. during a blink)?

Integrated or standalone: Is the eye tracking hardware integrated into the monitor frame? Standalone eye trackers usually offer high-end specifications, but are also typically a bit more complex to set up.

Does your provider offer support? With most eye trackers, you can usually run your eye tracker out of the box. To get started, however, a live training is helpful to learn the ropes.

Even along the way, a little expert advice often comes in handy. Does your provider offer that kind of support? What about online support? And how long does it take them to reply when you need it the most?

Seriously, good support is priceless.

Eye tracking software

Completing the picture

Hardware is only half the battle – finding the right software solution.

Of course, hardware is only half the battle. Before you can kick off your eye tracking research, you definitely need to think about which recording and data analysis software to use. Usually, separate software is required for data acquisition and data processing.

Although some manufacturers offer integrated solutions, you will most likely have to export the raw data to a dedicated analysis software for data inspection and further processing.

So which eye tracking software solution is the one you need?

Let’s first look at the usual struggles that are encountered with eye tracking software.

Struggle 1: Eye tracking software either records or analyzes Usually, separate software is necessary for data recording and data processing. Despite automated procedures, proper data handling requires careful manual checks along the way. This recommended checking procedure is time-consuming and prone to error.

Struggle 2: Eye tracking software is bound to specific eye trackers Typically, eye tracking soft- and hardware are paired.

One software is only compatible with one specific eye tracker, so if you want to mix and match devices or software even within one brand, you could soon hit a wall.

Also, be aware that you will need separate software for remote and mobile eye trackers.

Not only does operating several software solutions require expert training beforehand, it might even prevent you from switching from one system to another.

Worst case scenario? Your lab will stick to outdated trackers and programs even though the latest generation of devices and software might offer improved usability and extended functionality.

Struggle 3: Eye tracking software is limited to certain stimulus categories Usually, eye tracking systems don´t allow the recording of eye movements across different conditions and types of stimuli.

You will have to use one software for static images and videos, a different software for websites and screen captures, and yet another software for scenes or mobile tracking.

Struggle 4: Eye tracking software can be complex to use Eye tracking software can be quite complex to use.

You have to be familiar with all relevant software-controlled settings for eye tracker sampling rate, calibration, gaze or saccade/fixation detection etc.

In the analytic framework, you have to know how to generate heat maps, select Areas of Interest (AOIs) or place markers. Statistical knowledge is recommended to analyze and interpret the final results.

Struggle 5: Eye tracking software rarely supports different biosensors eye tracking software almost always is just that – it tracks eyes but rarely connects to other biosensors to allow recordings of emotional arousal and valence.

You will therefore need to use different recording software for your multimodal research.

As you most likely will have to set up each system individually, a considerable amount of technical skill is required.

If you happen to be a tech whiz, you might be on the safe side. If not, you could run into serious issues even before getting started.

Also, be aware that you have to make sure that the different data streams are synchronized. Only then you can analyze how different biosensor data relate to each other.

What should a picture-perfect eye tracking software hold in store for you?

Ideally, your eye tracking software:

- Connects to different eye tracking devices (mix and match, remember?)

- Is scalable to meet your research needs: It allows you to conveniently add other biosensors that capture cognitive, emotional or physiological processes

- Accommodates both data recording and data analysis

- Tracks various stimulus categories: Screen-based stimuli (video, images, websites, screen capture, mobile devices etc), real-life environments (mobile eye tracking, physical products etc) as well as survey stimuli for self-reports

- Grows with you: It can be used equally by novices that are just getting started with eye tracking and expert users that know the ropes

Eye tracking done right with iMotions

The iMotions platform is an easy to use software solution for study design, multisensor calibration, data collection, and analysis. Out of the box, iMotions supports over 50 leading eye trackers and biosensors including EEG, GSR, ECG, EMG, and facial expression analysis along with surveys for multimodal human behavior research.

What‘s in it for you?

From start to finish, iMotions has got you covered:

• Run your multimodal study on one single computer

• Forget about complex setups: iMotions requires minimal technical skills and effort for easy experimental setup and data acquisition •

Get real-time feedback on calibration quality for highest measurement accuracy

• Draw on unlimited resources: Plug and play any biosensor and synchronize it with any type of stimulus (images, videos, websites, screen recordings, surveys, real-life objects, you name it)

• Receive immediate feedback on data quality throughout the recording across respondents

• Worried about data synchronization? Don´t be. While you can work on what really matters, iMotions takes care of the synchronization of data streams across all sensors

Contact Us

Want to learn how iMotions can help you with your eye tracking questions and research setup? Feel free to reach out and one of our solutions specialists will get right back to you.

Eye Tracking

The Complete Pocket Guide

- 32 pages of comprehensive eye tracking material

- Valuable eye tracking research insights (with examples)

- Learn how to take your research to the next level

Glossary

- Eye Tracking: The process of measuring either the point of gaze (where one is looking) or the motion of an eye relative to the head. It’s a technology that detects eye movement, eye position, and point of gaze.

- Gaze Plot: A visual representation of the sequence and duration of where a person looked in a given scene, typically indicated by dots (fixations) and lines (saccades).

- Fixation: The act of focusing the eyes on a single point. In eye tracking, it’s a period when the eyes are relatively stationary and are absorbing information from the object of focus.

- Saccade: Rapid and simultaneous movement of both eyes between two or more phases of fixation in the same direction. In simpler terms, it’s the quick, jerky movement of the eyes as they jump from one point to another. Learn more about saccades.

- Heatmap: A data visualization technique used in eye tracking to show areas of different levels of gaze concentration. Warmer colors (like red and orange) typically indicate areas of higher focus or longer fixation. Read more about how to analyze heatmaps.

- Pupil Dilation: The change in the size of the pupil, which can be indicative of cognitive and emotional responses. Eye tracking technology can measure these changes to infer user engagement or emotional state.

- Binocular Eye Tracking: Eye tracking that involves monitoring both eyes to understand gaze direction and depth of field.

- Monocular Eye Tracking: Eye tracking that involves monitoring just one eye. It’s often used in situations where full binocular tracking isn’t necessary or feasible.

- Calibration: The process of configuring the eye tracking system to the individual characteristics of a user’s eyes. Calibration ensures accuracy in tracking the gaze.

- Stimulus: Anything in the environment that can elicit a response from the participant during an eye tracking study. This could be an image, video, or any visual element.

- Eye Tracking Metrics: Quantitative data gathered from an eye tracking study. These metrics can include fixation duration, saccade length, pupil dilation, and more.

- Usability Testing: A research method used to evaluate how easy a website, software, or device is to use. Eye tracking can be employed in usability testing to see where participants look and how they navigate. Read more about usability testing.

- Field of View: The extent of the observable world seen at any given moment. In eye tracking, it refers to the area within which eye movements are recorded.

- Gaze Contingent Display: A display system that changes content based on where the user is looking. It’s used in advanced eye tracking research to study gaze behavior in dynamic situations.

More articles about eye tracking

- Infant Research – The Complete Pocket Guide

- Automated Areas of Interest (AutoAOI) Best Practices

- Virtual Reality (VR) Research – The Complete Pocket Guide

- Best Alternatives to SMI Eye Trackers

- Introducing iMotions’ New Automated AOI Module

- Smart Eye Aurora 60Hz Eye Tracker White Paper: Real-World Performance, Real Results

- Unlocking the Potential of VR Eye Trackers: How They Work and Their Applications

- Exploring Mobile Eye Trackers: How Eye Tracking Glasses Work and Their Applications

- Understanding Screen-Based Eye Trackers: How They Work and Their Applications

- Can you use HTC VIVE Pro Eye for eye tracking research?

- The Importance of Research Design: A Comprehensive Guide

- Top 5 Publications of 2023

- Understanding Cognitive Workload: What Is It and How Does It Affect Us?

- The Best Neuroscience Software

- Neuromarketing Software Solution