To answer the question “What Is Facial Expression Analysis?” we have to take a look at ourselves first. Humans are emotional creatures. Our emotional state informs how we behave from the most fundamental processes to complex actions and difficult decisions [1, 2]. Our lives are in many ways guided by our emotions, so knowing more about emotions allows us to know more about human behavior more generally.

It’s clear that understanding the emotional state of people can be useful for a range of applications – from developing a better understanding of human psychology to investigating behavior for improved user experiences, to developing productive advertising campaigns, and beyond.

But while the importance of understanding emotions is clear, it can be difficult to obtain objective, real-time data about an individual’s emotional state.

There are questionnaires built to measure the emotional state of an individual, although misleading biases can be easily introduced (as with any questionnaire; [3]). Furthermore, they only allow a snapshot of information to be taken at a single time-point, meaning that the data can only can only give a general picture of how someone feels.

Facial expression analysis on the other hand, can provide objective, real-time data about how our faces express emotional content. While there is a debate about the exact emotional content that facial expressions show [4, 5], it remains one of the only tools that allows some insight into how someone could be feeling [6], an opportunity not to be passed up on.

But before we talk more about the analysis, let’s start with the basics:

Table of Contents

What are facial expressions?

Our face is an intricate, highly differentiated part of our body – in fact, it is one of the most complex signal systems available to us. It includes 43 structurally and functionally autonomous muscles, each of which can be triggered independently of each other [7].

The facial muscular system is the only place in our body where muscles are either attached to a bone and facial tissue (other muscles in the human body connect to two bones), or to facial tissue only such as the muscle surrounding the eyes or lips.

All muscles in our body are innervated by nerves, which originally receive input from the spinal cord and brain. The nerve connection is bidirectional, which means that the nerve is triggering muscle contractions based on brain signals, while it can also communicate information back to the brain.

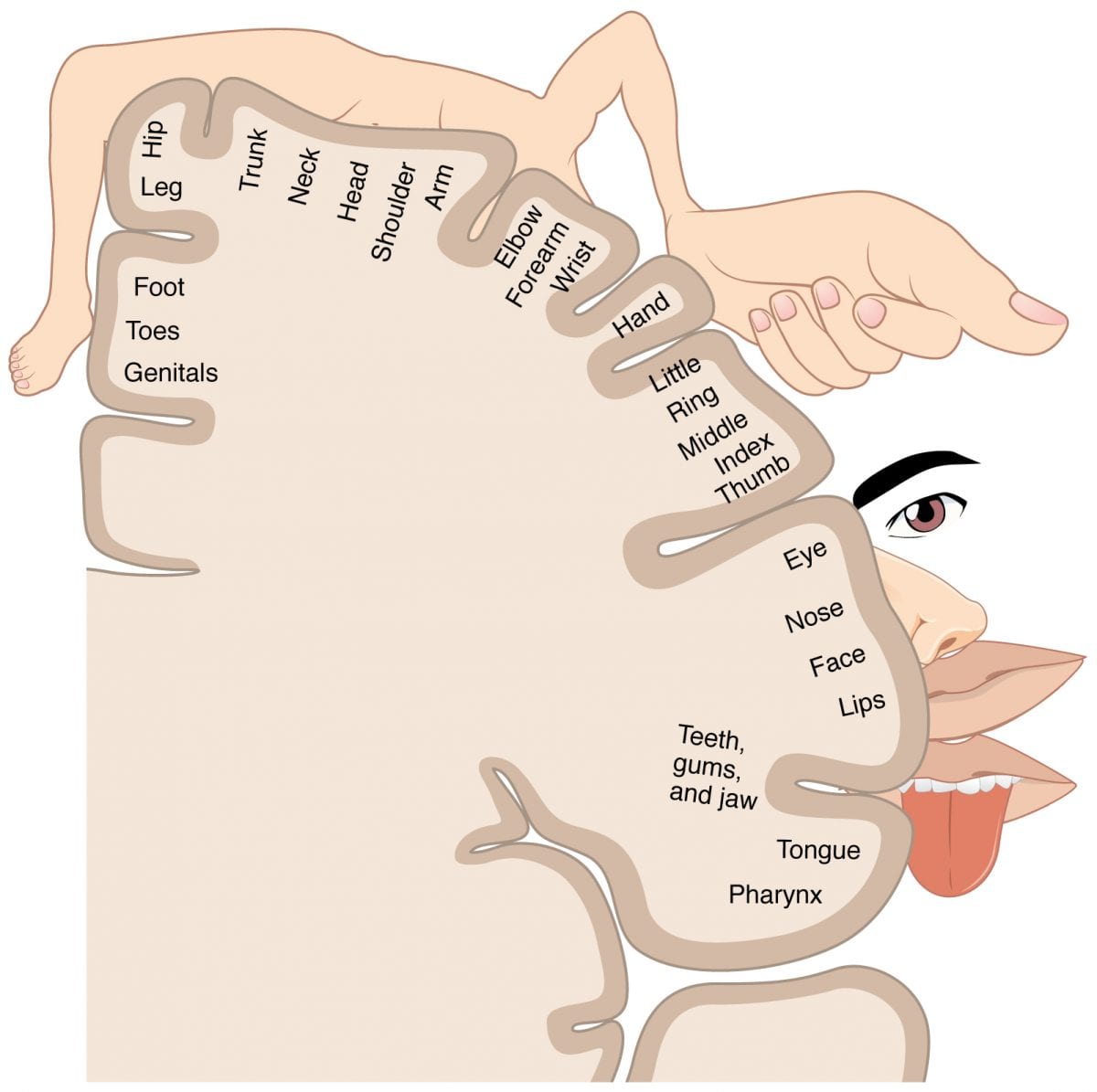

Almost all facial muscles are innervated by a single nerve, the facial nerve, which is also known as cranial nerve VII. The facial nerve emerges from deep within the brainstem (the pons), leaves the skull slightly below the ear (the facial canal), and branches off to all the facial muscles [8]. The facial nerve is also wired up with the primary motor region of our neocortex, which controls all the muscle movements of our body (a representative image of how this is organised is shown below; [9]).

Our facial expressions therefore often have origins within the primary motor region, which receives signals from the thalamus, and the supplementary motor cortex [10, 11]. This pathway can be elucidated further, but essentially leads to a wider and wider network of brain areas that can activate facial expressions at various stages.

Once the facial nerve signals to a muscle, it will contract (or relax), causing a change in the facial muscle(s). This signal may not necessarily lead to an externally observable facial expression change, but that will be discussed more below.

How does facial expression analysis work?

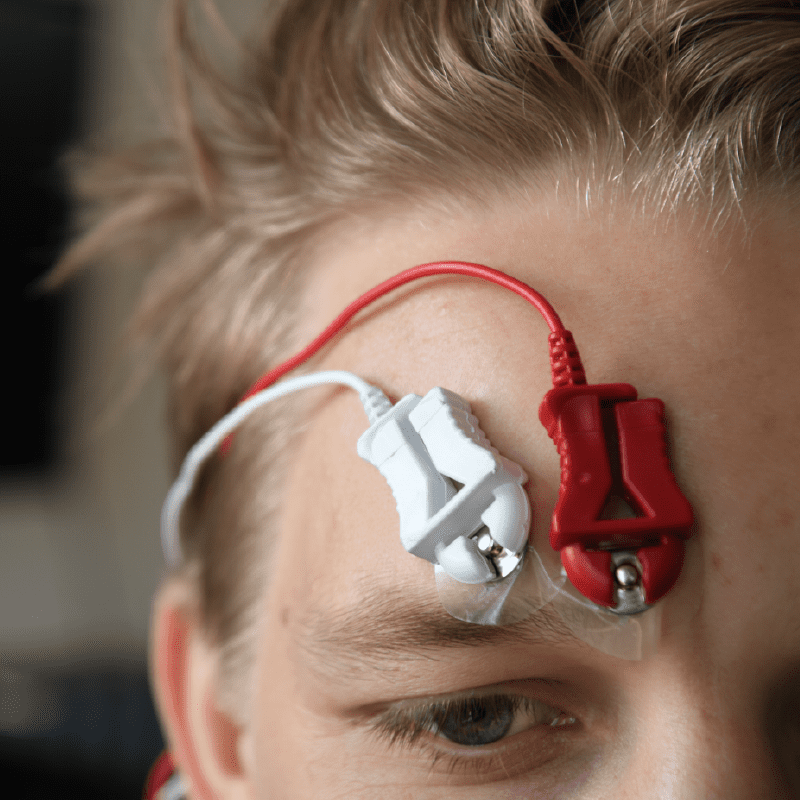

There are three principal methods of facial expression analysis, each with their own advantages and disadvantages. The oldest of these three methods, facial electromyography (fEMG) involves recording the electrical activity from facial muscles.

fEMG

Recordings from fEMG have shown that activity can be detected even when no external change in facial expression can be seen. This activity has been proposed to be related to the associated emotional state of the individual (i.e. increased activity of the zygomaticus major, related to smiling, has been proposed to be related to feelings of joy; [12]).

While fEMG can provide data of facial muscle movements that are impossible to visually detect, the recordings are limited to a finite number of electrodes that can be placed on the face. The application of the electrodes also requires some knowledge about both the facial musculature, and also how to correctly apply the electrodes.

Facial Action Coding System (FACS)

Shortly after the use of fEMG to detect facial expression changes, the Facial Action Coding System (FACS) was introduced by Paul Ekman and Wallace Friesen [13]. FACS was built upon work completed by Carl-Herman Hjortsjö [14] who divided the face into discrete muscle movements.

The FACS has become the fundamental source when studying facial expressions, shaping the field of facial expression analysis from its inception. By outlining a set of facial movements related to facial muscle movement, researchers have been able to quantify the action of the face, and further relate that to emotional expressions.

While the importance of FACS for the field of facial expression analysis is hard to overstate, the practical application of its methods is not without difficulty. To properly quantify facial expressions according to the FACS method, officially certified and trained FACS coders need to determine which muscles are moving, and the intensity of the movement. This means in practical terms that a video of an interaction must be viewed essentially frame-by-frame and processed – which can clearly take a lot of time.

Facial expression analysis software

Subsequently, software has emerged that attempts to tackle the processing requirements needed to accurately process facial expressions according to, or based on the principles of the FACS method. One of the software that was created was called Emotient, featuring Paul Ekman as an advisor. Emotient was subsequently purchased by Apple in 2016.

Other companies have also provided automatic solutions to facial expression analysis, such as Affectiva, a company that has trained their algorithms on over seven million faces (the largest such dataset of any facial expression analysis software company), and which is integrated into the iMotions platform.

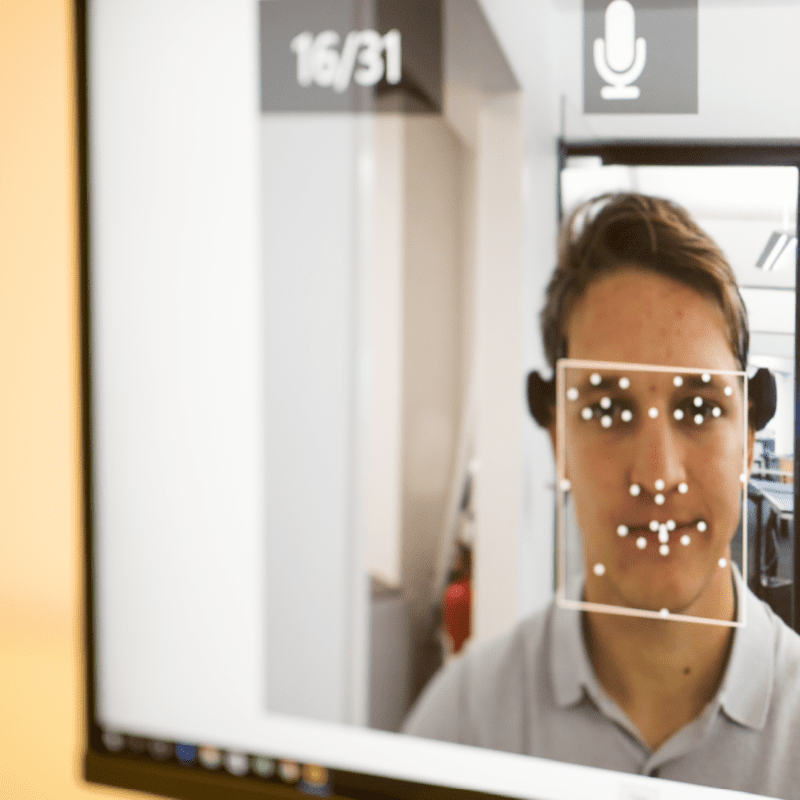

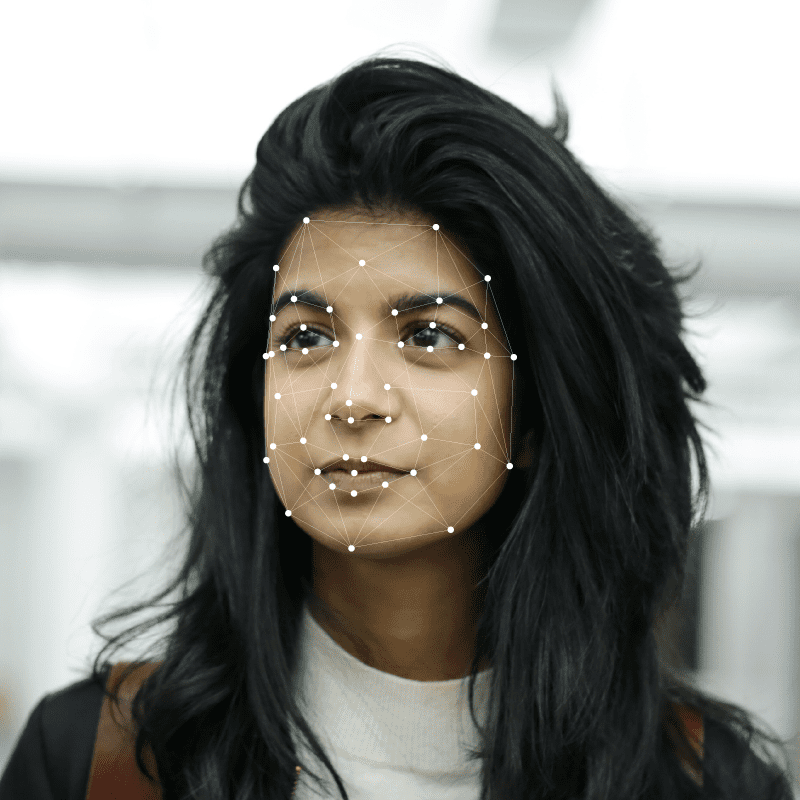

The software first identifies the face, and uses computer vision algorithms to identify key landmarks (similar to the FACS division of the face). Software may then differ in how the images are processed, but Affectiva uses algorithms trained through deep learning to analyze these landmarks, and subsequently predict facial expressions.

Software built to analyze facial expressions clearly has the advantage when it comes to time and resources required – a simple webcam is the only hardware that is needed. The accuracy is however generally thought to be better through fEMG or the manual FACS method. Ultimately, those working with facial expression analysis will need to decide which method best meets their needs and requirements.

Facial expression analysis data

The type of data of course depends on the modality that it is collected from (i.e. fEMG, manual FACS coding, or automatic facial expression analysis through software). We’ll go through the data types for each below.

fEMG

As with EMG, the data refers to the level of electrical activity generated by the muscles, after a baseline has been determined. The baseline is determined from recordings of what is called the Maximum Voluntary Contraction (MVC) – the maximum intensity of muscular contraction that the individual is capable of.

The voltage difference between two electrodes is then recorded from the skin, which is then analysed as relative to the maximum amount of activity.

Facial Action Coding System (FACS)

The FACS system consists of a number pertaining to each different muscle action, and can be further detailed by the side of the face (i.e. left or right), and further, the strength of the action. The strength is scored from ‘A’ (trace activity) to ‘E’ (maximum activity). In this way, a strong opening of the eyes can be referred to as R5C + L5C (left and right Levator palpebrae superioris movement, at intensity ‘C’).

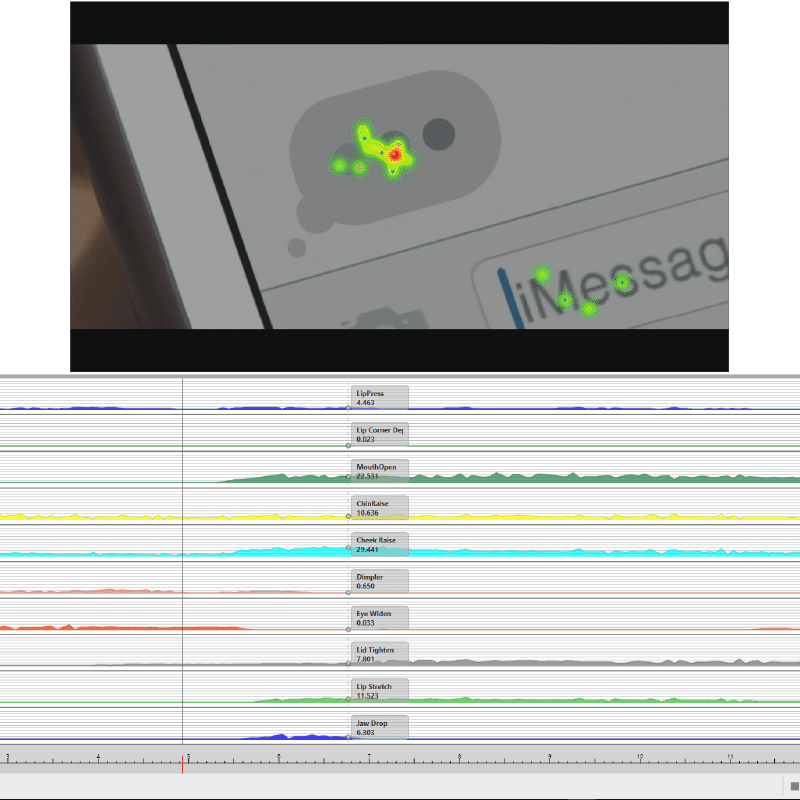

Automatic facial expression analysis

The exact data type will depend on the type of software used for automatic facial expression analysis. Affectiva for example provides values that are said to be “detectors” – with values ranging from 0 (no expression) to 100 (a fully present expression). The values can then be analyzed just like any other data.

Check out: Tips for High-Quality Facial Expression Analysis Data

Conclusion

James Russell and José Fernández-dols stated in 1997: “Linking faces to emotions may be common sense, but it has turned out to be the single most important idea in the psychology of emotion” [15]. While the details of that link to emotions remains a source of debate, it remains one of the few tools available that can provide objective data connected to emotional expressions.

There are various ways in which facial expression analysis can be carried out, and determining which is best for your research will depend largely on the resources you have available, and how they are prioritized. The best decision you can make is an informed one. To keep learning about facial expressions, download our free guide below.

Free 42-page Facial Expression Analysis Guide

For Beginners and Intermediates

- Get a thorough understanding of all aspects

- Valuable facial expression analysis insights

- Learn how to take your research to the next level

References

[1] Sanfey, A.G., Rilling, J.K., Aronson, J.A., Nystrom, L.E., & Cohen, J.D. (2003). The neural basis of economic decision-making in the Ultimatum Game. Science, 300, 1755–1758.

[2] Angie, A. D., Connelly, S. Waples, E. P. Kligyte, V. (2011). The influence of discrete emotions on judgement and decision-making: a meta-analytic review. Cogn. Emotion, 25 (2011), 1393-1422.

[3] Choi, B. C. K., & Pak, A. W. P. (2005). A catalog of biases in questionnaires. Preventing Chronic Disease, 2, 1-13.

[4] Carroll, J. M. & Russell, J. A. (1996) Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology, 70:205–18.

[5] Aviezer H, Trope Y, Todorov A (2012) Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science, 338: 1225–1229.

[6] Sebe. N, Lew, M.S., Cohen, I.,Sun, Y., Gevers, T., Huang, T.S. (2004). Authentic facial expression analysis. In Proc. International Conf. Face and Gesture Recognition, 517-522.

[7] Moore, Keith L.; Dalley, Arthur F.; Agur, Anne M. R. (2010). Moore’s clinical anatomy. United States of America: Lippincott Williams & Wilkins.

[8] Gupta, S., Mends, F., Hagiwara, M., Fatterpekar, G., Roehm, P. C. (2013). Imaging the facial nerve: a contemporary review. Radiol Res Pract, 248039:1–14.

[9] OpenStax College, CC BY 3.0. Retrieved September 24th, 2018, from: https://commons.wikimedia.org/wiki/File:1421_Sensory_Homunculus.jpg

[10] Behrens, T., Johansen-Berg, H., Woolrich, M., Smith, S., Wheeler-Kingshott, C., Boulby, P., Barker, G., Sillery, E., Sheehan, K., Ciccarelli, O., Thompson, A., Brady, J., Matthews, P. (2003). Noninvasive mapping of connections between human thalamus and cortex using diffusion imaging. Nat. Neurosci. 6 (7), 750 – 757.

[11] Oliveri, M., Babiloni, C., Filippi, M. M., Caltagirone, C., Babiloni, F., Cicinelli, P., Traversa, R., Palmieri, M. G. & Rossini, P. M. (2003). Influence of the supplementary motor area on primary motor cortex excitability during movements triggered by neutral or emotionally unpleasant visual cues. Exp Brain Res, 149, 214–221.

[12] Schmidt, K. L., Ambadar, Z., Cohn, J. F., Reed, L. I. Movement differences between deliberate and spontaneous facial expressions: Zygomaticus major action in smiling. Journal of Nonverbal Behavior. 2006;30:37–52.

[13] Ekman, P., and Friesen, W. V. (1978). Facial Action Coding System: A technique for the measurement of facial movement. Palo Alto, CA: Consulting Psychologists Press.

[14] Hjortsjo, C. H. (1970). Man’s face and mimic language. Malmo, Sweden: Nordens Boktryckeri.

[15] Russell, J. A., Fernandez-Dols, J. M. (1997). The psychology of facial expression. Cambridge, MA: Cambridge UP.[/fusion_builder_column][/fusion_builder_row][/fusion_builder_container]