At iMotions, we often talk about the intersection between insights gleaned from biosensor data, and information gathered via more conventional methods of questionnaire and self-report. However, observation-based behavioral data is a very important and often overlooked source of information that you can also record in iMotions. In this blog post, we will cover how iMotions’ solution can be used as a tool for behavioral coding (or coding of any kind of event) as observed in videos or other types of recordings.

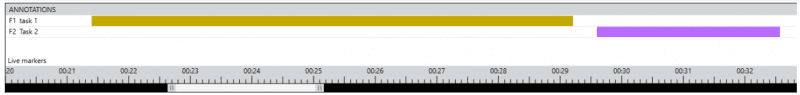

Caption: timeline is divided into segments by use of annotations created after the fact. In this case to mark up onset and offset of a series of tasks performed by the participant.

Caption: timeline is divided into segments by use of annotations created after the fact. In this case to mark up onset and offset of a series of tasks performed by the participant.

What is Behavioral Coding

Behavioral coding is defined as a set of methodical, standardized, quantifiable observations about a participant. Often, these observations are done by human coders reviewing recordings of that behavior. Behavioral codes are widely used and vary heavily depending on the use case – they often serve as essential tools in psychology, human factors and usability research among many other fields.

Did you know that all you need for behavioral coding is our base foundational iMotions software platform? This means that recordings that include high-quality annotations and coding can be created in the absence of biosensor hardware add-ons – although if you are interested in layering in physiological data, we can certainly help you there as well. If you would like to know more about biosensors feel free to browse the vast library of blog posts on this subject.

Check out:

Using tools such as timeline annotations, behavioral coding can be combined with biometric data and self-report in order to make a more comprehensive analysis and provide more profound insights than could otherwise be achieved.

If you are already a user of iMotions, this post aims to make you more familiar with live markers, event markers, and annotation tools for coding purposes. If you are not yet a user and looking for a tool to code your video material, this article is the fastest way to get an understanding of what iMotions offers.

Where can I get my recordings from?

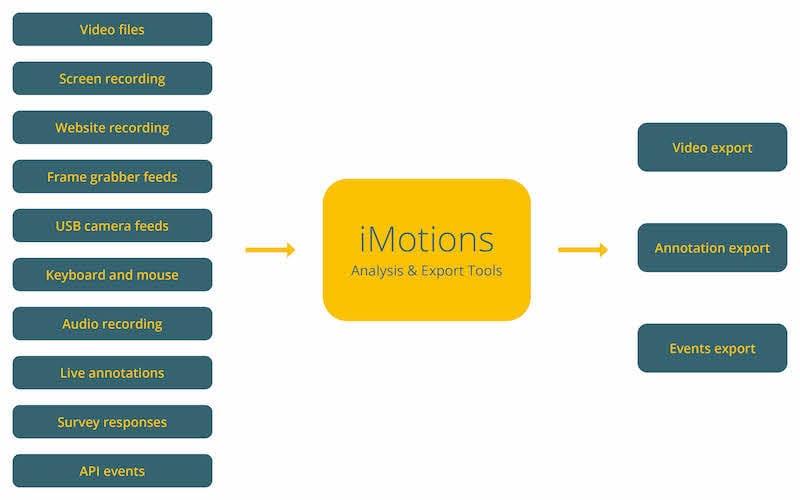

Behavior data can be captured in iMotions in many ways. As indicated on the diagram below, the input can be various types of video feeds streamed directly into iMotions as well as externally recorded videos that are imported as files for analysis. In conjunction with video feeds, the system can also capture live annotations (also known as live markers), keyboard and mouse events produced by the participant. More complex applications can also be streamed in through the iMotions API – for example game telemetry data or events from a driving simulator.

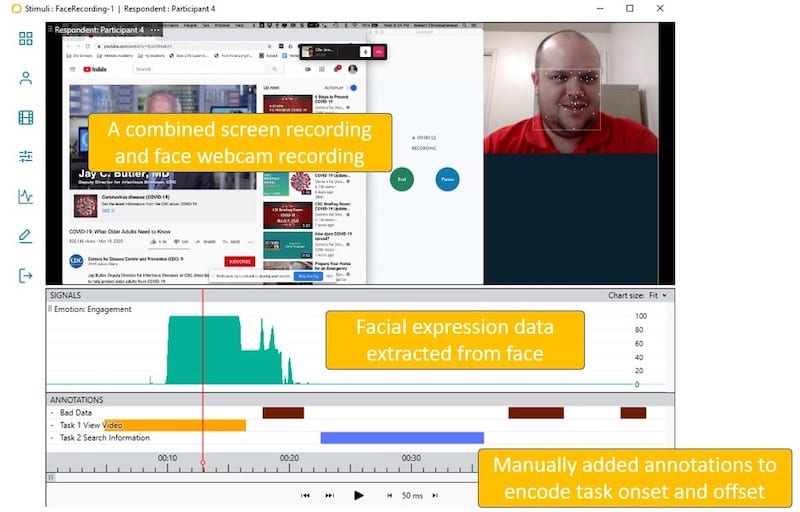

Example: post imported website and face recordings from remote data collection tool

Caption: An example of importing a screen recording obtained from remote data collection tool Lookback.io. The video contains both a screen grab and face recording that can be used for facial expression analysis.

Caption: An example of importing a screen recording obtained from remote data collection tool Lookback.io. The video contains both a screen grab and face recording that can be used for facial expression analysis.

You can use third-party tools to obtain a screen recording along with a face recording while the subject is performing tasks on a website on the subject’s own computer. The recordings can be downloaded and imported into an iMotions study for analysis. Using annotations it is possible to quantify, for example, task performance and time on task. Furthermore, facial expression analysis can be applied to get insights into emotional responses associated with key events in the session.

Example: gameplay recording and multiple video feeds recorded from iMotions

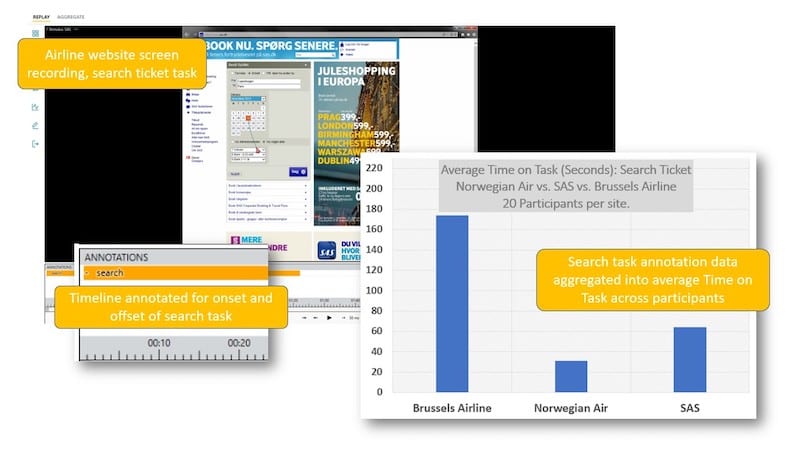

Example: Time on Task on e-commerce website

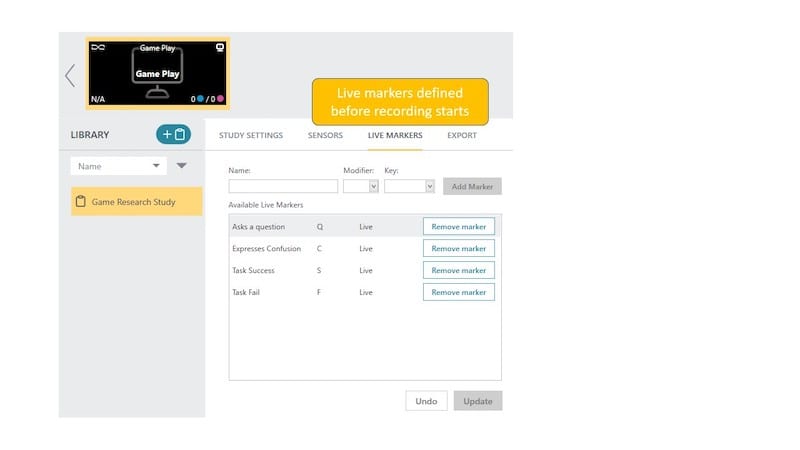

Behavior Coding Technique 1: Annotate Live with LiveMarkers

Behavioral coding is often done live by having a human coder flag specific events. In many usability platforms, these events can be coded live using LiveMarkers. Here is one such schema for a very basic usability test:

Q = Subject asks a question

C = Subject expresses confusion

S = Subject successfully completes a task

F = Subject fails a task

By using the LiveMarkers feature in iMotions, you can embed all of these codes into your study, and log these events live by inputting the hotkeys associated with those codes. These events are shown on the timeline when reviewing the recording in iMotions, as well as exported as user-generated events with their own timestamp in Sensor Data exports.

iMotions also provides alternatives to human-generated coding. For web-based tasks specifically, iMotions can track subject-generated events like mouse clicks, scrolling and page loads.

For more complex setups involving 3rd party hardware, iMotions can receive discrete marker events and time series data via our advanced API. This is especially useful for cases using simulation, where data from the simulator can be synchronized with your biosensor feeds.

Behavior Coding Technique 2: Annotating Post-Collection

Video can capture a wealth of information about participants and the environment they are situated in. Playing back the video in order to gain an understanding of what happened during a session allows a researcher to get an impression of a wide range of aspects, from the performance or strategy of a respondent in an environment to documenting behavior as the foundation for quantitative analysis.

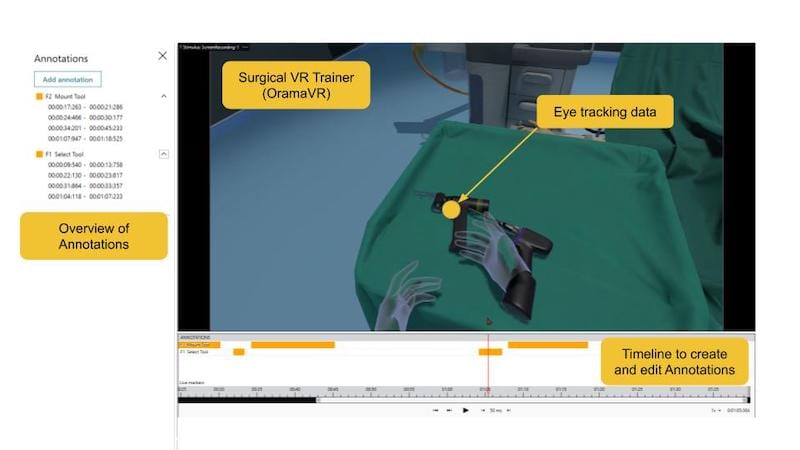

Caption: In this example, annotations are used to quantify the actions of the participant in a VR surgical training environment (provided by OramaVR). Apart from the value of reviewing the video recording of the session each participant’s actions can be coded using Annotations in order to establish benchmarks for task performance – e.g. time on task, task scores, etc.

Annotations are extremely helpful for what we like to call a “Biometrically Informed Interview”. Often, when trying to recall an experience, we might miss details or events that might be better articulated in that particular moment. That’s why Thinkalouds are often so useful in web usability testing. In a Biometrically Informed Interview, you can use the iMotions biosensor replay as the focus of a retrospective – using behavioral and physiological events like a GSR peak or a frown event as cues to dig deeper into that moment for more thorough insights. For this purpose, by right-clicking on an Annotation fragment, you can add comments to that annotation for a more nuanced, detailed markup.

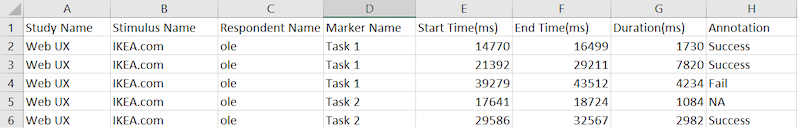

How to Analyze Behavioral Data

The nature of behavioral data is that it tends to be qualitative in nature, describing a particular process or strategy to achieve a goal. However, the use of coding schemes and event markers to code for discrete events allows for some quantification that, when used in conjunction with physiological and self-report data, can provide a well-rounded view of a participant’s experience.

Livemarkers, event markers and annotations can be exported together from our Sensor Data export complete with timestamps, while our separate Annotations Summary Export provides a complete log of annotations with comments attached.

In conclusion, behavioral data can be a hidden gold mine of information, provided the infrastructure is in place for the proper coding, annotation, and quantification of data. Through the use of Annotations, iMotions allows you to easily log and code discrete events, highlight sections of data for review, and export that data for analysis. In this way, iMotions can be a valuable tool for researchers in usability and human factors, providing a solid foundation of behavioral data that can only be enhanced with the addition of biosensors and surveys.

Check out our webinar on:

Remote Research with iMotions: Facial Expression Analysis & Behavioral Coding

Contributing Writers:

Jessica Wilson Phd![]() Nam Nguyen

Nam Nguyen