Let human behavior drive innovation

iMotions helps you examine the relationship between human beings and the systems they use, so that you can focus on improving effectiveness, optimizing ease of use, and minimizing user errors. From A/B and benchmark testing to experience measurement, we help you assess the effectiveness of any interface. Human behavior testing can save companies time and money by avoiding design mistakes that don’t fit a human need, response or interaction.

Product and Usability Testing

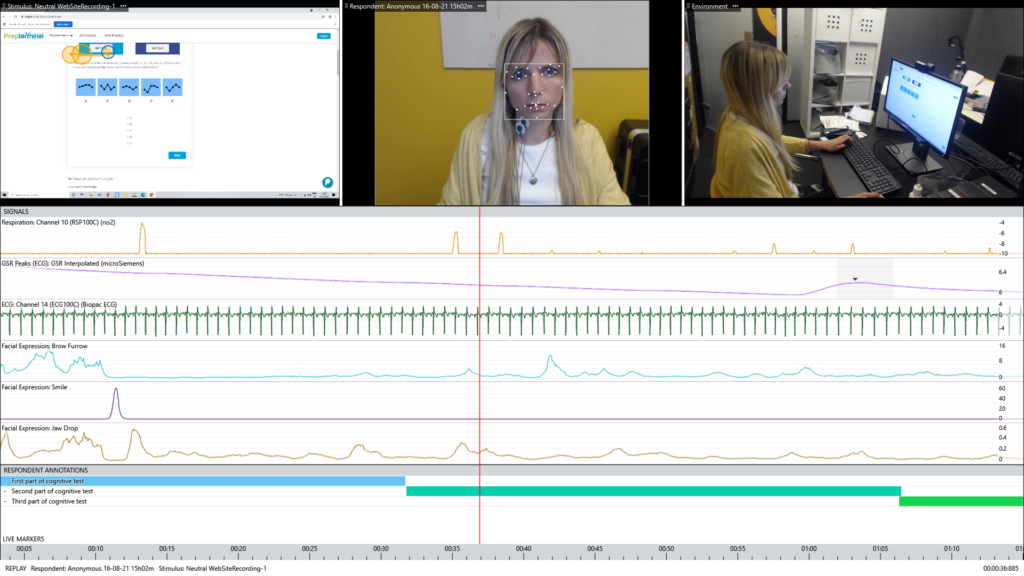

Using biosensors as part of your R&D strategy enables faster, more effective results across the product lifecycle. Incorporating methods like eye tracking and facial expression analysis can be done at the early concept stages of a new product for initial insights on usability and ergonomics, or during prototyping, product testing, and innovation phases. Researching human behavior can even be applied to machine interfaces, software testing, brain-computer interface or human-robot interaction to understand or minimize human error.

HCI and Design

Use iMotions to detect human nonconscious responses in vehicles, interfaces, or machines. Shift the focus on design towards developing a better understanding of and training for needs, reactions, and mental workload. Assess and understand where users are looking and what they fail to see, and study how users are perceiving and responding to new devices and solutions.

Maximizing Eye Tracking Research for Product Development and Testing

R&D and Iterative Testing

During or after the design phase, measuring physiological and emotional responses to products or prototypes will help you unearth design elements or usability features that have gone unnoticed, need improvement, or attract attention. Biometric data help you study ergonomics and human factors to better quantify and validate real-time results so that you save time on designing products that achieve what you intend. Iterative testing and think-alouds using iMotions adds valuable feedback during prototyping or R&D phases.

Human Reponses in Virtual Worlds

Connecting biosensors in VR and simulations gives you comprehensive real-time data, so you can detect reactions to virtual scenarios. From measuring cognitive workload for improving driver or pilot efficiency, to detecting levels of emotional arousal during VR gaming experiences, iMotions surfaces instant data that gets you to quicker, more actionable conclusions.

Test and iterate by soliciting underlying human responses – using APIs

iMotions integrates numerous methods for measurement of human responses by quantifying physiological data with low intrusiveness such as Facial Expression Analysis, Eye Tracking, Galvanic Skin Response and EEG. Our API and Lab Streaming Layer are also key components to feeding inputs and outputs through almost any third-party system. iMotions customers can take advantage of the API for capabilities like creating triggers, visualizing live behavioral data feeds, using external events to control iMotions remotely, and changing the experience in simulations based on behavior.

iMotions API: What is it and how can you use it to maximize your research