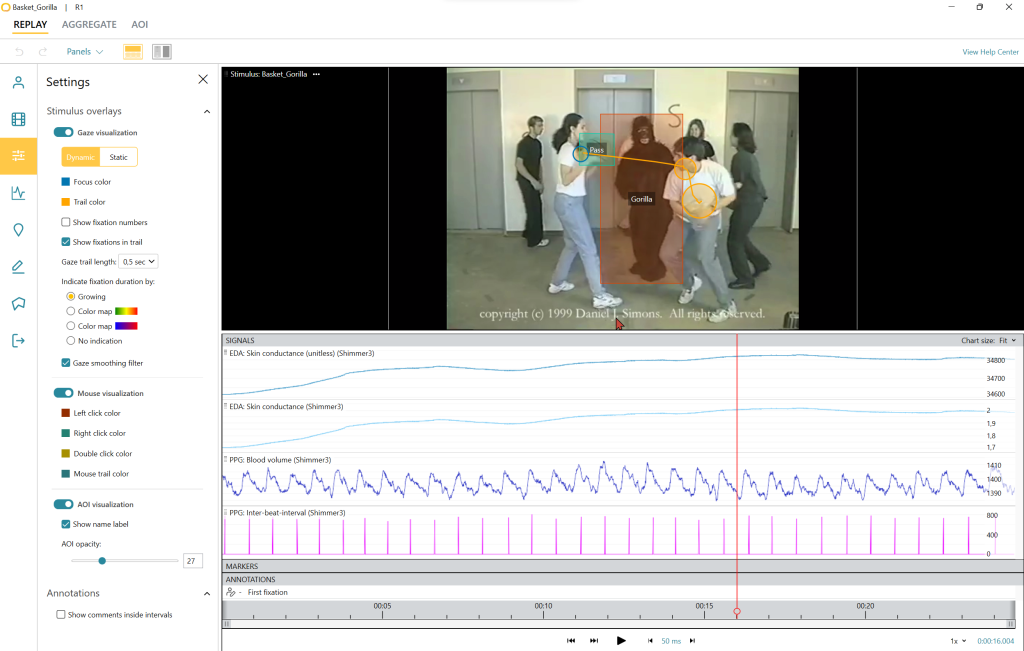

A Trusted Software for Researchers

Scientific research builds knowledge, and biosensors make human behavior measurable, in almost any situation. Through precise measurement and collection of data, it’s possible to create a clearer understanding of the world. iMotions has been built to make this process as streamlined as possible within human behavior research. With iMotions you can complete research within a single field or cross-disciplinarily without having to worry about software limitations. Thanks to the full and seamless integration of multiple data sources in real time, it’s possible to collect the data you need to solve the problems you’re after, no matter how complex the research question.

iMotions is used at over 70+ of the 100 top ranking universities in the world, and by more than 1,300 customers globally.

Communication Research

The landscape of communication, media, and education has changed dramatically in recent years. As our interactions move increasingly online, these fields face new opportunities and challenges that have never before been so important to get right. Using the best scientific methods available to understand human interactions ensures informed decisions.

Knowing how communication is received in different contexts, and how it can inform action, is a challenge for communication researchers that requires empirical backing. The iMotions platform is built for quantification of human responses in any environment, making it ideally suited for teasing apart the complexity of communication.

Education

Virtual reality (VR), augmented reality (AR), mobile technology, and other new technologies offer the possibility of education in environments where learning would otherwise be cost-prohibitive or even impossible, yet the nuances of differences between such an approach and traditional classroom methods remain largely unexplored. With e-Learning, online classes and simulations have been widely used as a replacement for traditional classroom-based teaching, yet further empirical exploration of these methods is needed. iMotions offers the ability to interrogate and understand the nuanced cognitive, emotional, and motivational processes that shape education in any learning environment.

Educational institutions are aiming towards teaching a deeper understanding of how humans behave, make decisions, and react to situations or stimuli. Many are starting to see the potential of biosensors as teaching tools. The integration of biosensor hardware into a unified software platform easily transforms real-time human reactions during an experience into accurate user insights – perfect as a method for teaching human behavior at any level.

Computer Science

The performance of machine learning models is largely dependent on the data that it’s trained with. Inaccurate or limited data won’t be able to guide algorithms in the right direction, no matter how sophisticated they might be. Providing detailed and direct quantifications of the cognitive and physiological computational processes that are performed constantly by humans, provides a clear basis from which to model and predict human action. Human-centric AI is the next frontier for technological innovation. By making devices and software responsive to human behavior and emotions, their use becomes not only more practical, but also more streamlined, smoother, and safer. With iMotions, you can build models that are not just intelligent, but emotionally intelligent.

Behavioral Economics

Predicting human behavior has never been an easy task, but with the advances of neurotechnology, it has become increasingly within reach, and increasingly accurate. Understanding how heuristics, nudges, and perceptions of risk drive the rationality (and irrationality) of behaviors and decisions requires techniques and methods that are well suited to the task. Having access to detailed human behavioral data can bring clarity to complexity. iMotions provides a single platform for behavioral experiments, for collecting biosensor or survey data in any environment.