The iMotions team has created a second series of live, recorded webinar content in a broad range of topics on human behavior research. Our expert panelists included our in-house Ph.D. Scientists, Product Specialists, Customer Success Managers, and even some guest appearances from clients and hardware partners.

Our Webinar Part 1 series had over 1.5k total registrations! We wrapped up our second webinar series with another four webinars expanding on applications and real-world use cases of human behavior research.

Did you miss it? Don’t worry – we recorded the webinars and you can access them on our Webinars page if you’re interested in learning more on the topics discussed.

- Beyond the Lab: Applying Biosensors in Performance Training Environment

- Remote Research with iMotions: Facial Expression Analysis & Behavioral Coding from Your Couch

- iMotions API: What is it and how can you use it to maximize your research?

- Healthcare Training in Virtual Environments with iMotions and Varjo

Here are some of the Part 2 Webinar Series Highlights :

Webinar: Beyond the Lab: Applying Biosensors in Performance Training Environment

David Schulman (Sales Executive) and Nam Nguyen (Neuroscience Product Specialist) hosted our guest panelist, Dr. Derek Mann, an expert in Kinesiology, to speak about his research at Jacksonville University, Florida.

Dr. Derek Mann has more than a decade of experience in the private sector working with high-performing athletes, military, and corporate executives. He is an expert in the mental, emotional, and attentional aspects of human performance and is specializing in perceptional, cognitive reactions and performance in high-stress environments.

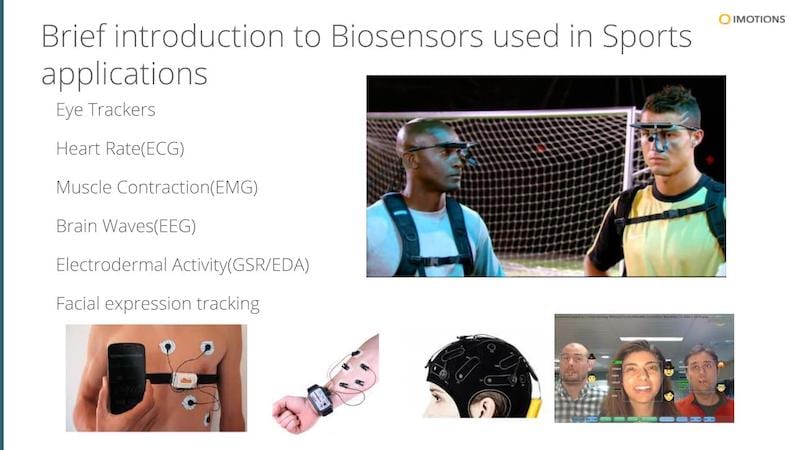

How Biosensors Are Used in Sports Applications: Improving Performance

Eye Trackers to enhance the athletes’ performance

- What are athletes looking at (fixation)?

- How is the player reading plays as they’re happening?

- Is the player focusing on the right thing?

Heart Rate (ECG) to find areas of improvement

- How fast can I recover?

- What is happening to heighten heart rate?

- Identify stress factors and address them to stay cool under pressure

Muscle Contraction – Electromyography (EMG) to understand behavior

- How to better understand muscular movements and activity

- Investigate their association with certain emotions and behavioral outcomes

Brain Waves (EEG) for cognitive workload:

- Get insight into a player’s cognitive workload

- How are athletes taking in their environment for better response time?

- How much time are they taking to make a decision?

Electrodermal Activity (EDA/GSR) to help athletes perform better :

- What is exciting athletes?

- Can we train athletes to pick up on environmental queues to perform better?

Facial Expression Analysis (FEA) for training intensity

- What emotion was expressed during a specific point in time?

- The neurodynamics of game face

- Tracking training intensity through facial expressions

Highlighted Questions

Why is the field of sports training using neuroscience to answer their questions?

Traditionally in the past, self-reporting and observation were the only options. Now there is an additional need for more data to help increase consistency and repeatability for better insights. Biosensors can help give you an additional layer of insights that participants may not be able to recall or explain themselves. This adds both subjective and objective inputs to understanding the player’s performance. You are able to explain at a deeper level what’s actually happening and unpack those different levels of cognitive processes.

What are the biggest challenges with working with biosensor data?

First of all, it is important to be realistic and narrow your data collection down to 1-3 variables that are the most important. It’s imperative to pilot-test and to understand what the data is telling you in a benign environment before you can take it out in an applied context. Secondly, getting athlete and coach buy-in can also be a challenge. Post-processing to get the data to a meaningful point can be time consuming. This highlights the importance of streamlining the process and having a quick turnaround of information. iMotions software has helped with data processing to help deliver results quickly and effectively in this sense.

Webinar: Remote Research with iMotions: Facial Expression Analysis & Behavioral Coding from Your Couch

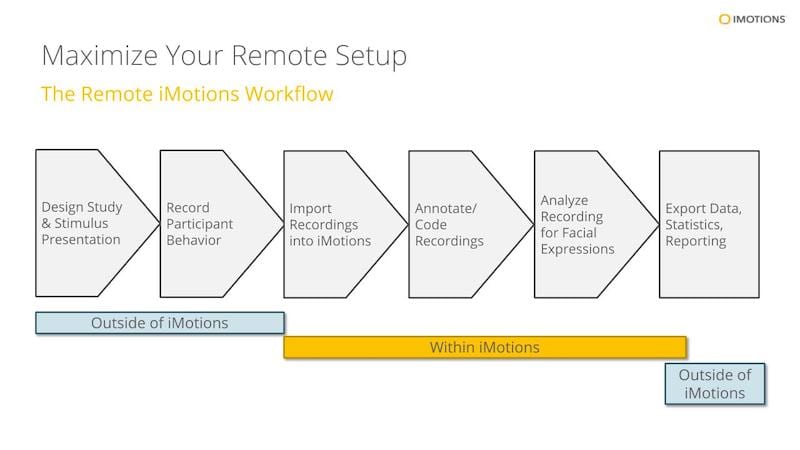

The new age of social distancing has produced new challenges for researchers studying human behavior. What can you do when it’s no longer feasible to bring participants into a lab setting? Dr. Jessica Wilson (Neuroscientist) and Kate Weir (Sales Executive) reviewed how to annotate recordings and post-process pre-recorded face videos to gain insight into emotional valence. They covered all of the remote capabilities of iMotions for behavioral coding with the annotation tool and facial expression analysis with a demo in the iMotions platform.

Behavioral Coding with the iMotions Platform

Behavioral Coding is a set of methodical, standardized, quantifiable observations about a participant, labeled by trained individuals. While surveys relate a participant’s subjective rating of an experience, behavioral data is codified by objective measures according to the researcher. Thus, behavioral coding provides a rich source of information that complements physiological data and self-report.

Behavioral coding systems are typically specific to a given study and heavily dependent on the research question – there is no one-size-fits-all! Behaviors can be coded as discrete or continuous events to give a more holistic explanation of the user’s reaction to the stimuli. Many use cases can be found in psychology, UX, Marketing etc. that employ behavioral coding.

Behavioral Coding using Annotations

By using the Annotations feature in iMotions, you can embed all of these codes into your study with assigned colors and hotkeys to allow for coding frame by frame. Users can also add comments for context of the events, making it easier to analyze in post-processing. These events are shown on the timeline when reviewing the recording in iMotions, and key information like duration, start/stop times and relevant comments can be exported from Marker Data exports.

Check out: How To Do Behavioral Coding in iMotions

Facial Expressions Analysis

With iMotions, pre-recorded videos of participants’ faces can be imported into the software and processed to analyze expressed emotion using automated facial expression technology. Whether at the individual level or in a group setting, individual faces can be defined and analyzed after the fact. This capability means that researchers have the option not only to run facial expression analysis on newly recorded videos, but also to revisit pre-existing content in their databases for further analysis.

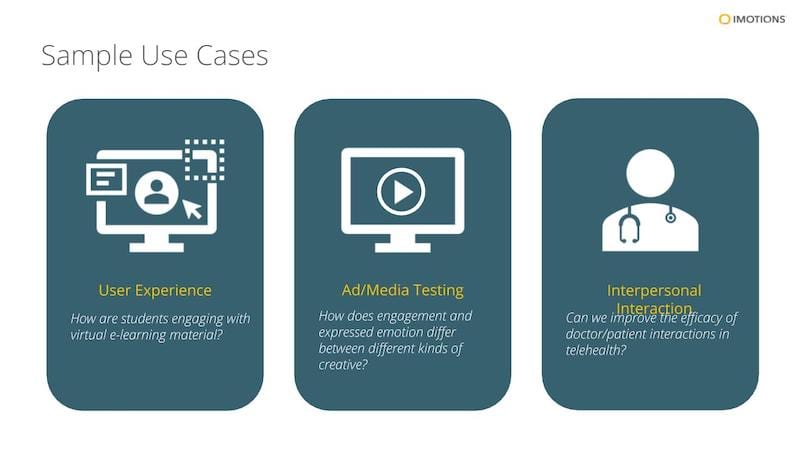

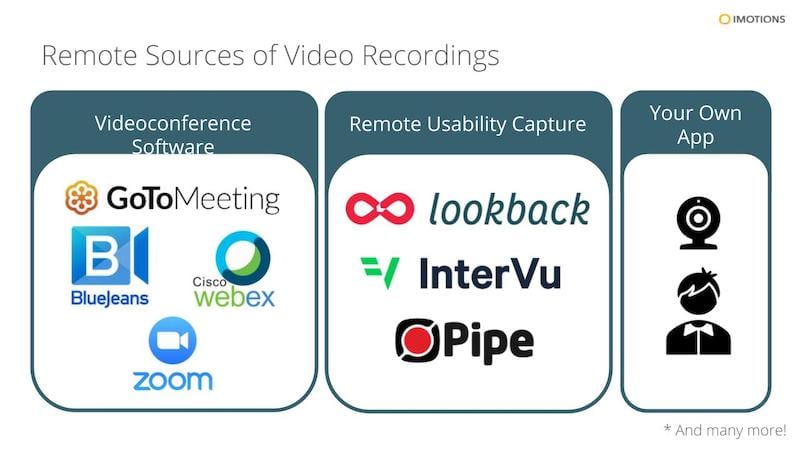

The following image shows just some of the available software researchers can use to record participants’ behavior via webcam and screen share. Examples of studies can be website layouts (UX), images, videos, games, or any other type of visual stimuli.

Check out our blog: How facial expression analysis (FEA) can be done remotely

Highlighted Question

How does behavioral coding in Performance & Training add value?

We’ve worked with the government and universities in performance and training. Quantifiable metrics enable them to understand behavior beyond the sensor feeds. Some examples are: 1) efficiency: time on task & number of clicks, which give a sense of workflow and 2) physician training: novice vs. expert surgery, learning skill sets, etc.

What about the limitations of hardware and environment when doing remote data collection?

One of the strengths in our engine, especially using Affectiva-Affdex, is you’re able to use a regular webcam or even your built-in computer camera to conduct the recording (minimum resolution of 640 x 480 pixels or 120 px face size). Lighting may pose an issue, so it’s important that the participants’ faces should not be over-exposed, and not back lit by another lighting source. Participants should be informed of proper recording setup and adjust their webcams accordingly before collection.

Check out: Top Tips for High Quality Facial Expression Analysis Data

Webinar: iMotions API: What is it and how can you use it to maximize your research?

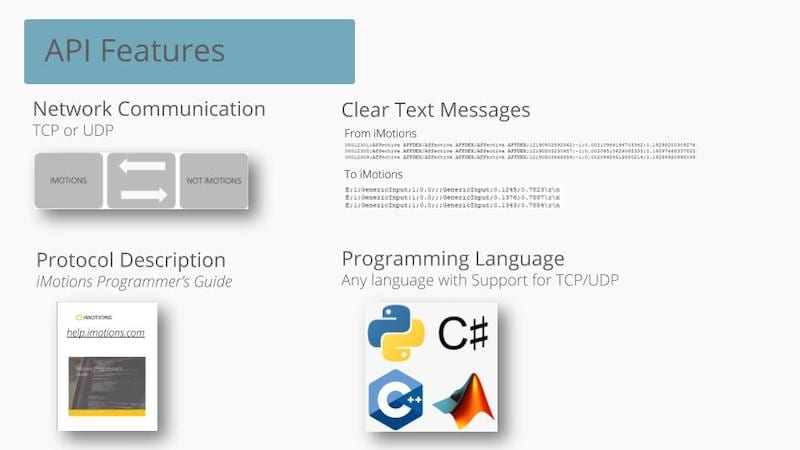

When you have a research setup that requires getting 3rd-party data into and communicating with iMotions, how can you make the iMotions API work for you? Product Director Ole Jensen and Customer Success Manager Tue Hvass, PhD demonstrated some API use cases centered on systems integration and workflow automation, virtual environments (in Unity), wearables, and standard communication protocols with Bluetooth. The demonstration included a peek into code configurations as well as a live demo in the iMotions platform.

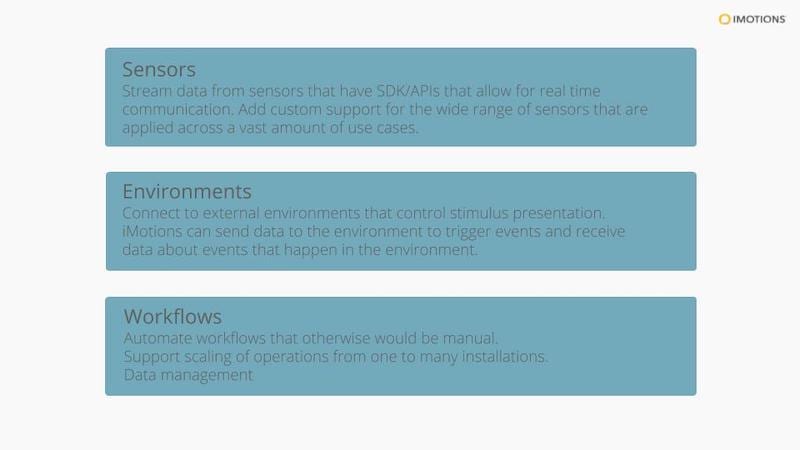

Caption: API can connect to sensors (that are not integrated by iMotions), Environment for Stimulus presentations, & Workflows, allowing for automation

Caption: API can connect to sensors (that are not integrated by iMotions), Environment for Stimulus presentations, & Workflows, allowing for automation

iMotions API Features:

- Input API/Event Receiving: Stream data into iMotions, e.g. from 3rd party sensors.

- Output API/Event Broadcasting: Stream biosensor data out of iMotions, e.g. GSR or eye tracking data.

- Remote Control: Send commands to iMotions, e.g. start and stop recording or export data.

- Lab Streaming Layer (LSL): Stream data into iMotions using the open LSL protocol.

Check out our blog: What is an API? (And How Does it Work?)

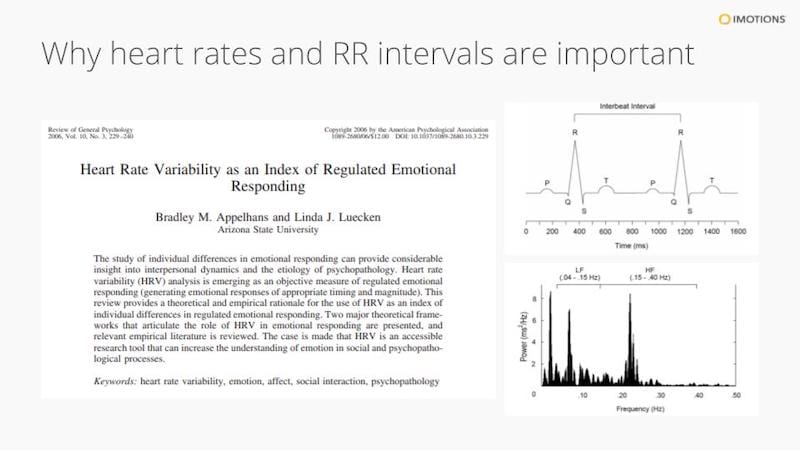

Example Bluetooth Wearable with MATLAB

Tue Hvass, PhD demonstrated an example of a heart rate monitoring wearable, the Polar Chest Strap (H10), with MATLAB. This low-energy, bluetooth wearable has the ability to gather and stream Electrocardiogram (ECG) data for hours without needing to recharge. The API allows for the device to share data via Bluetooth into iMotions software, capturing the data recordings with visualization of the RR intervals. Using transparent R code generated in iMotions, you are able to see average BPM (Beats per minute) and Heart Rate Variability (HRV). HRV is a physiological measure that is associated with emotional regulation capacity.

Why are heart rate and RR intervals important? We can use them as an index for emotional arousal and emotional regulation. Using ECG with other biosensor measurements can provide a more complete way of understanding someone’s thoughts, emotions, or behaviors. By using the complementarity of each sensor to understand a different aspect of human responses (e.g. by pairing facial expression analysis with ECG to understand both emotion and arousal) it’s possible to gain a clear picture of how someone experiences the world.

Check out: Heart Rate Variability – How to Analyze ECG Data

Example of Bidirectional Communication between iMotions and Unity Environment

Ole Jensen demonstrated an example of bidirectional communication using a Shimmer GSR biosensor to control a virtual car in the Unity environment. He used accelerometers embedded in the Shimmer sensor to control the virtual car: forward/backward/left/right motion achieved by tilting the sensor around two axes. The accelerometer data is forwarded from iMotions into Unity using the event-forwarding capability. Concurrently iMotions records a visual representation along with GSR data from the Shimmer sensor. Furthermore the Unity environment is programmed to send game telemetry data back to iMotions using the event-receiving interface.

Telemetry is one of the fundamental terms in game analytics. The collection, analysis and reporting of user-behavior telemetry is the foundation for current analytics in game development – consumer oriented games or serious games (e.g. for learning) and simulation based experiences in general.

Check out: How to Measure human experiences in gaming and VR

Highlighted Questions

How many Polar (ECG) belts can be tracked at the same time? If it’s more than one, how would you deal with signal interference?

People have connected multiple Polars to one phone, but this has not been tested with the demonstrated code. iMotions does have the ability to gather from multiple biosensors, so in theory, it is possible.

Has this been used in the Automotive industry?

We have some publications and case studies relating to this. There are many applications in the automotive industry. For example, semi-autonomous vehicles, human-machine trust interface, and even the user experience of driving the car.

Check out how Stanford Center for Design is using simulators integrating biosensors and other human behavior technologies.

Healthcare Training in Virtual Environments with iMotions and Varjo

Virtual Reality (VR) creates exciting opportunities to train professionals in fields like Healthcare, thanks to its controllable environment. In fact, combining multimodal biosensors together with VR tools provides healthcare professionals with the ability to derive fast, meaningful insights. iMotions and Varjo hosted a webinar with honored guest, Rafael Grossmann, MD, FACS, who has over 25 years of surgical experience and is a strong advocate for VR in healthcare training.

The webinar was hosted by Roxanna Salim, Director of Partnership at iMotions, who has over 15 years of psychophysiology research experience. She was joined by co-host Geoff Bund, Head of Business Development at Varjo, who has over 7 years of experience in Virtual Reality hardware development.

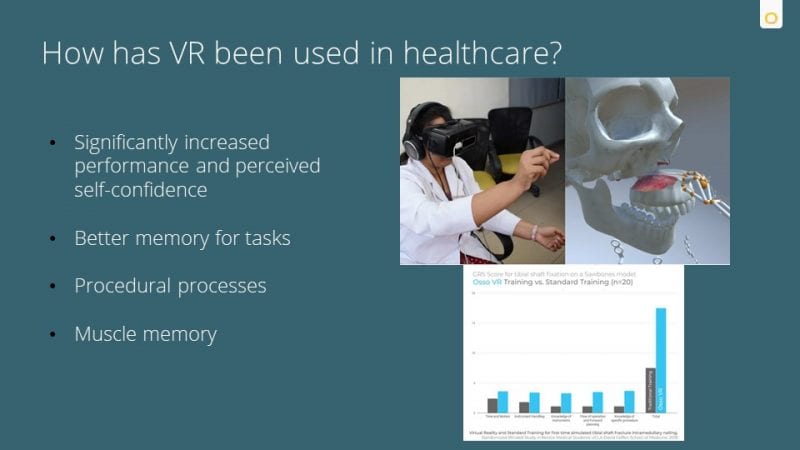

How can you leverage Virtual Reality and biosensors in the healthcare industry? There have been a variety of uses using VR therapy ranging from phobia reduction to most recently for surgical training and surgical research. There have been advances in recent years in the technology behind VR that allow for the widespread use of this technology in different settings. Since 2016, there has been a growth in publications and training around the use of Virtual Reality (VR) technology.

Surgical training can go above and beyond when using virtual environments.

- Significantly increase performance and perceived self-confidence

- Better memory for the tasks

- Procedural processes

- Muscle memory

Check out: Four Inspiring Ways Biosensors are Used to Improve Healthcare Performance

Some of these studies tend to focus on the full surgical experience and are reliant on self-report to gather information about the trainee’s experience. They don’t necessarily tap into the underlying mechanisms that lead to certain behavioral outcomes. There may be certain aspects of the surgery that are more cognitively taxing or more stressful that can be captured and addressed using biosensors.

Biosensors help you ask the right question for Healthcare Professionals

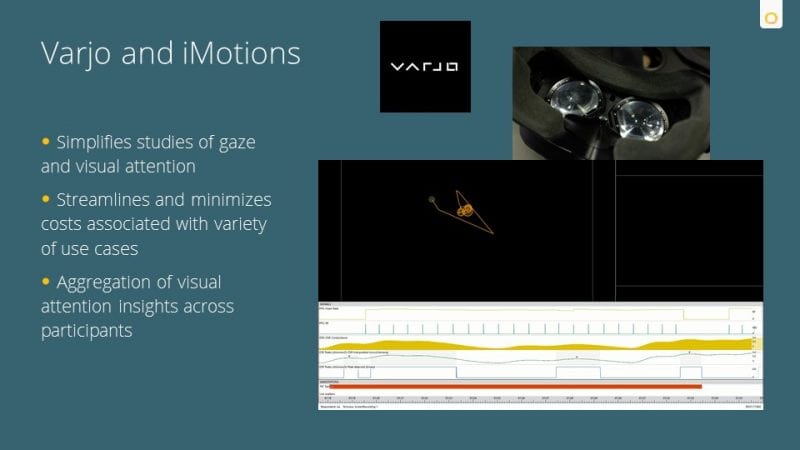

Eye Tracking: built into the Varjo headset, can provide insight into visual attention

- What elements of the surgical room are attended to

- Are there key elements that aren’t attended to that should be?

Electrodermal Activity (EDA) and Electrocardiogram (ECG) can provide insight into emotional arousal and stress

- Can give an idea of what aspects of the training tasks lead to higher levels of stress

- Gives an overview of how novices improve over time and how they compare to experts or trainers

Surgical expert, Dr. Rafael Grossmann, MD, demonstrated how training simulations can be optimized with built-in eye tracking and other biosensors in human-eye resolution VR environments. The expert vs. novice surgical training simulation used in this webinar was made by ORamaVR.

Dr. Grossmann discusses his personal experience in the immersive, realistic environment that VR provides. It has truly helped him and his colleagues learn and continue to innovate with new technologies and techniques in surgical training.

Highlighted Questions

How do I access environments that work with Varjo?

Varjo works with a number of different VR applications such as Unreal, Unity, and UNIGINE, just to name a few. They offer consultation for building your own as well as migrating a VR environment into their headsets.

What are the data analysis options? Can we define AOIs in iMotions?

Yes, there are a number of different analysis tools available in iMotions including the ability to create static and moving AOIs and gain insights into visual attention from fixation and gaze metrics within the AOIs. You can also create and export heat maps and leverage our state-of-the-art gaze mapping technology to quickly and easily aggregate eye tracking data across participants in dynamic environments.

Download iMotions

Medical / Healthcare Brochure

iMotions is the world’s leading biosensor platform. Learn more about how iMotions can help you with your medical / healthcare research.