If you are new to VR and would like to introduce this type of interactive stimulus presentation into your research, this guide will provide you with some relevant information and a number of checkpoints to help you make the right decisions.

As we talk about VR here, we are specifically referring to virtual reality using head-mounted displays such as the Varjo VR-3 and similar platforms.

As you may have realized by now, VR has been a research tool for at least the last few decades. According to a Google Scholar search, the sum total of papers referring to “Virtual Reality” is a whopping 200,000 in the past ten years alone. That number includes research both on the technology and the applications of VR. Indeed, there are many fields in which VR is applied in human behavior research such as therapy, trauma recovery (Gamito & others 2015), training (Sanz, Multon & Lécuyer, 2015), human performance, and many other fields.

Hence, there is an abundance of information that you can benefit from when introducing VR into your own research.

Current trends – Hardware

Many major tech companies have some sort of VR/AR initiative. In this realm, HTC maintains a leading position with their Vive and Vive Pro hardware platform as it generates a high fidelity experience with high resolution, precision tracking using lighthouses, and support of sophisticated controls (i.e. the system is tethered to a high-performance computer to render the content, wired or wireless).

Facebook recently released their Oculus Go as a lowcost stand-alone HMD priced at only a few hundred dollars. Oculus also provides the pioneering [fusion_builder_container hundred_percent=” target=”_blank” rel=”noopener noreferrer” type=”1_1″>Rift platform which requires a computer to render the 3D content. There are many more hardware platforms available, however the aforementioned are the most popular ones where you will find the greatest resources including strong community forums.

Software for content generation

To implement an experiment into VR, you will need a software platform to create and render the 3D content. The Unity3D engine is a very popular choice. The license model of Unity is freemium, i.e. you can get started with a sophisticated rendering environment for free. Other 3D engines for VR content generation that are popular are Unreal and Worldviz.

So, what can you do with a “3D engine”?

- Build 3D content using geometric primitives or import advanced meshes (models) from other programs.

- Program behavior of objects.

- Process user input from controllers.

- Manipulate material properties of objects and light sources.

- Apply physics.

- And much more.

Resources for learning

Unity, Unreal, and Worldviz are rich programming environments originally built to support game development.

Building 3D worlds is a discipline; chances are you will need someone with experience to help you. There are thousands of developers on the Unity platform who can be reached through online forums or meetups (e.g. Boston VR meetup currently has 5000+ members).

If you intend to learn the basics of building 3D worlds from scratch, there are an abundance of resources online. For example, Youtube channels such as Brackey’s are primarily directed towards game developers, however, they provide an excellent resource for someone wanting to get started with Unity. Using tools such as this will allow you to quickly get to a level where you can design your own worlds and program interactions between participant and world objects.

Applying Biosensors

At Motions, we are developing tools that allow you to use biosensors to quantify the human experience whether that experience is delivered through a computer monitor, the real world, or the virtual world. With iMotions, you can get started recording a VR experience synchronized with biosensor data streams relatively quickly.

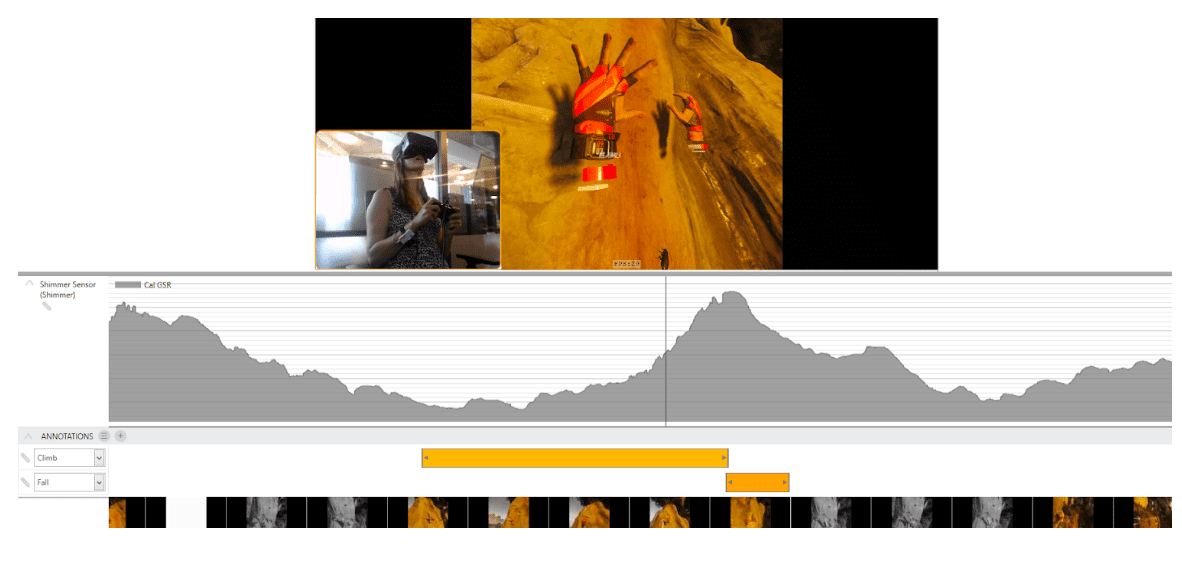

The picture is a screenshot from iMotions showing:

- Capture of the VR content (“The Climb” for Oculus) using a Screen Recording stimulus

- Respondent Camera feed to capture behavior.

- GSR trace to measure emotional responses.

- Annotations of events on the timeline (“Climb” & “Fall” coded)

With these simple tools, you can start analyzing behavioral and physiological responses to VR content. By recording everything through iMotions you can:

- Make qualitative observations from the video.

- Export video snippets of interesting events.

- Mark up the timeline with Annotations to code for certain events. The annotations can be used to segment the GSR data in order to build summary scores for certain events across respondents – or simply calculate task performance scores such as Time On Task.

Advanced options to support VR research:

- Add eye tracking in VR – this allows you to understand visual attention in the immersed experience.

- Stream events from the 3D environment into iMotions using the API – e.g. send information about the location in the 3D world or certain events and behavior to iMotions and have it synchronized with the biosensor data streams in real-time.

If you’re curious about the future of therapy using VR and biosensors, we recently wrote a blog post discussing just that. You can find it here. Otherwise, I hope you’ve enjoyed reading about how to get started with applying mounted VR displays in research. If you would like to know more, don’t hesitate to contact us.

Free 52-page Human Behavior Guide

For Beginners and Intermediates

- Get accessible and comprehensive walkthrough

- Valuable human behavior research insight

- Learn how to take your research to the next level

References

[1] Gamito, P., Oliveira, J., Coelho, C., Morais, D., Lopes, P., Pacheco, J., … & Barata, A. F. (2015). Cognitive training on stroke patients via virtual reality-based serious games. Disability and rehabilitation, 39 (4), 385-388.

[2] Argelaguet Sanz, F., Multon, F., & Lécuyer, A. (2015). A methodology for introducing competitive anxiety and pressure in VR sports training. Frontiers in Robotics and AI, 2, 10.

![Featured image for Virtual Reality Immersion Tested: How Biosensors Reveal the Power of VR [A Case Study]](https://imotions.com/wp-content/uploads/2023/01/pexels-eugene-capon-1261824-300x169.jpg)