This moment in time reflects an uncertain and unprecedented period in our history. In the current climate, researchers are coming together to create innovative solutions for conducting research remotely. At the same time, video chat services like Zoom and Google Meet are booming with new users as companies move operations online.

Although the majority of in-person testing is temporarily on hold, one viable option for continuing biosensor research is to leverage the post-processing of facial expression data. iMotions integrates the leading facial expression analysis (FEA) tools available. In this post, we have highlighted the use of Affectiva’s engine, AFFDEX. Affectiva unobtrusively measures unfiltered and unbiased facial expressions of emotion, using just a standard webcam. This technology enables researchers to view facial expressivity during live testing or run analytics in post.

Table of Contents

What is post-processing of facial expressions

With iMotions, pre-recorded videos of participants’ faces can be imported into the software and processed to analyze expressed emotion using automated facial expression technology. Whether at the individual level or in a group setting, individual faces can be defined and analyzed after the fact. This capability means that researchers have the option not only to run facial expression analysis on newly recorded videos but also to revisit pre-existing content in their databases for further analysis. This data stream can be a great addition to a multitude of research initiatives by providing us with information on the emotional impact of any given content, product, or service.

Examples of Facial Expression Analysis: Use Cases

Facial expression analysis (FEA) can be used to support a range of research efforts. Some methodologies include:

- Usability tests ( UX Research)

- Focus groups

- One-on-one interviews

Check out: UX Research – What Is It and Where Is It Going?

Incorporating FEA into interviews and focus groups remotely can provide valuable information about individuals’ opinions, expressed emotions, and behaviors in relation to a product or content of interest. We have seen these research techniques leveraged across various industries and applications areas, such as:

User Experience Testing: How do users respond to a particular digital experience?

- What workflows or areas of an app may create more expressed frustration or confusion?

- How are students engaging with course material and lectures virtually?

- Does the learning pedagogy align with the student’s expectations? Is the instructional design easy to navigate?

Media/Ad Research: How does engagement and expressed emotion differ across different pieces of creative?

- Is the new creative received more positively by audiences than the old creative?

- Are key moments of a piece of content connecting with viewers?

Interpersonal Interactions: How do individuals respond to particular virtual interactions?

- Are there ways to improve the efficacy of patient and doctor interactions in telehealth?

- How does the tone of the doctor affect the emotion of the patient during a consultation?

To learn more about remote research options and use cases, watch our FREE webinar titled, “Remote Research with iMotions: Facial Expression Analysis & Behavioral Coding”

How to Conduct Facial Expression Analysis using Videos

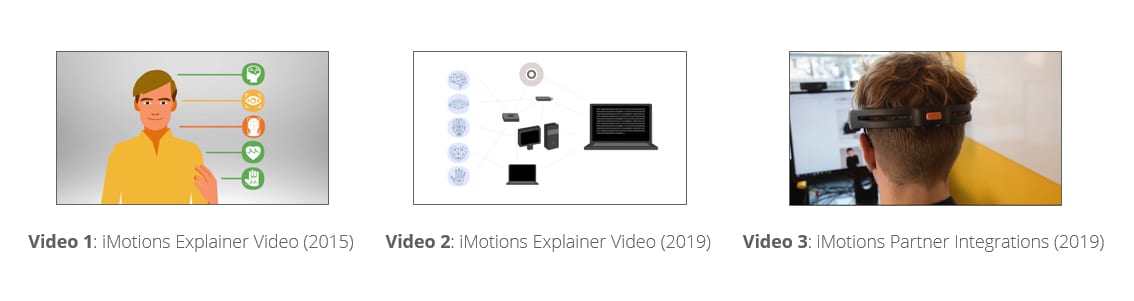

Collecting and processing facial expression data remotely can be done quite easily. For our example, we presented marketing content videos to internal iMotions employees to test which would engage customers more. While some veterans of the company are eager to move away from the old introduction video, the team would like to test all of the videos before launching the campaign.

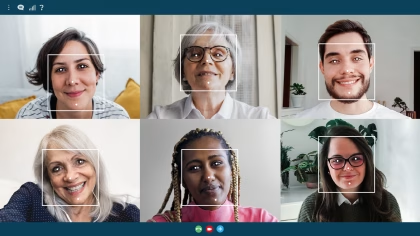

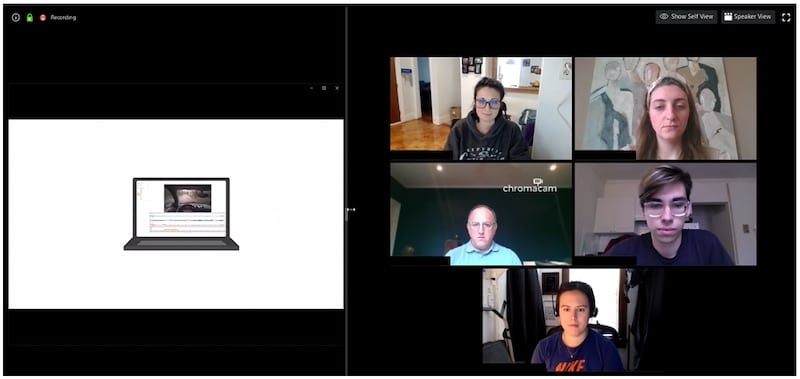

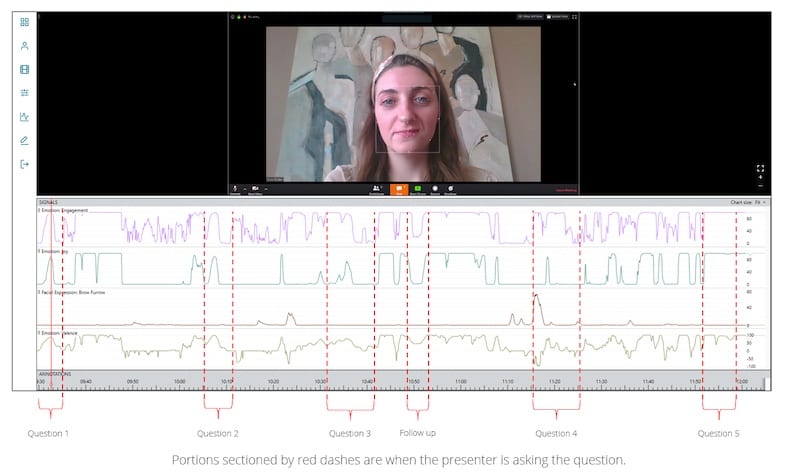

A mix of internal iMotions employees were recruited. Participants joined a remote video call where they viewed all three videos and were asked follow-up questions pertaining to the content. At the end of the showing, a 1:1 interview was conducted with a volunteer from the sample.

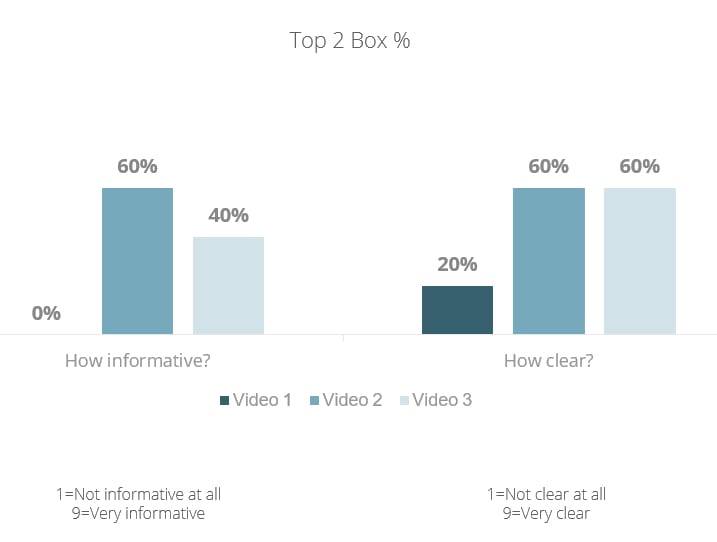

Out of all three videos, the new explainer video (2) stood out as top-rated in self report data for quality of information and clarity, with the partner integrations video (3) as a close second. The old explainer video (1), by contrast, was rated relatively lower. These data fall in line with the hypothesis that the new explainer video would be received better by consumers than the old explainer video.

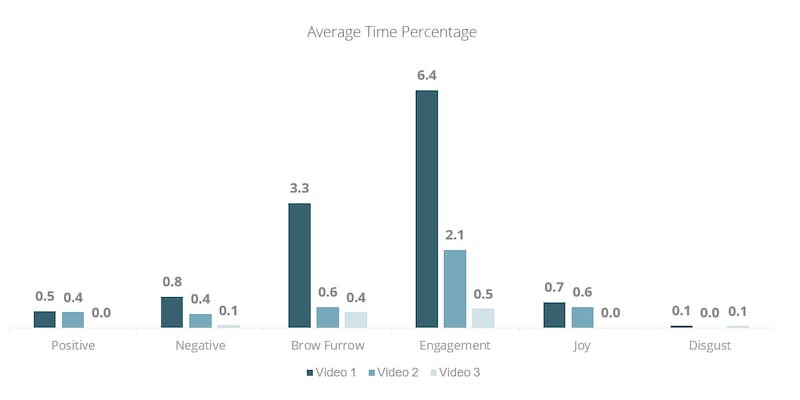

Facial expression data add a unique element to the story. Despite being low rated, the old explainer video elicits the most facial engagement from viewers. However, viewers spend much of this time eliciting negative expressions, furrowing their brow more than 5x as frequently while watching the old explainer video relative to the new video. These data indicate that the old video may have some challenges. Comparatively, viewers elicit low expressivity across the board for the partner integrations video. These two videos-video 1 and video 3-may not be positively engaging for viewers.

When asked if she would have watched all videos in their entirety if she came across them naturally, our volunteer indicated a preference for the new video. She explains, “I would have exited some of them…like video 1 and 3.”

“I liked the second video because…it showed more about the platform than video 3 and it showed the actual software rather than video 1. It was a bit more detailed.”

The new explainer video strikes a good balance between favorable self report and expressed emotion. Viewers rate the content as clear and informative, and facial expression data shows that viewers are engaged, but eliciting low levels of brow furrow and other negative expressions that may indicate frustration or confusion. Thus, while the old, animated explainer video is unlikely to resonate with an audience for which the product and messaging is personally relevant, the partner integration video is not emotionally engaging and may not drive a strong reaction. These data indicate that overall, the new explainer video with a higher production quality and clearer messaging is best received by the audience.

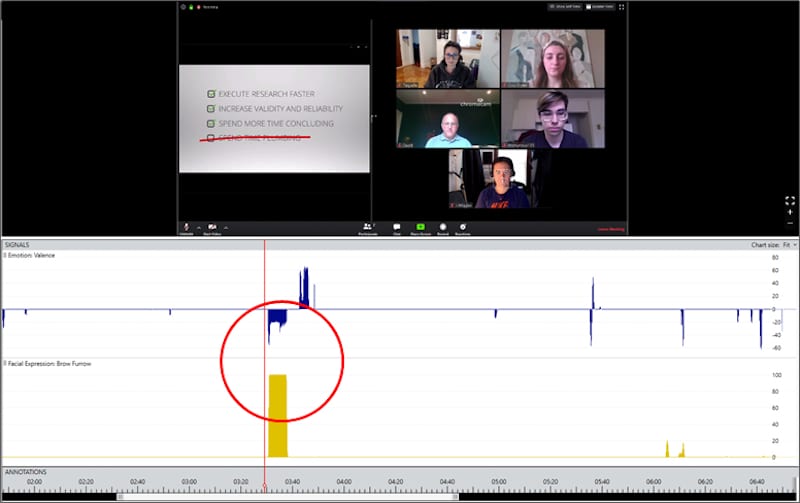

Indeed, this sentiment is reflected in our group data, and viewers’ facial expressions can help us to understand why. Our viewers reported not finding the old explainer video particularly informative, and we see greatly elevated in-the-moment brow furrow from the audience while watching the video. This would suggest to us that the old explainer video may be confusing to viewers, particularly when using cheeky jargon such as the moment illustrated below. Here, the narrator states, “spend more time concluding, rather than plumbing.” The intended value prop may be unclear to viewers, as the “plumbing” analogy may not translate well.

Indications of brow furrow at this moment suggest that the video may be confusing to viewers

On the other hand, both the new explainer video and the integration video receive favorable self-report ratings for being clear and informative. Again, facial expressivity can help add some nuance to our decision: Although both videos are perceived as being effective, the new explainer video elicits higher overall facial engagement, potentially suggesting that the audience is more “tuned in” while watching that video. In cases where different stimuli are receiving similar favorable (or unfavorable!) self-reported ratings, facial expressions can be a helpful way to break the “tie” and determine which video was capturing viewers’ attention more.

Check out our video on How to Conduct Facial Expression Analysis (FEA)

Note: For quantitative insights, we may recommend a larger sample size to account for things like poor in-home lighting, bad video angles, technical difficulties, and so on. This does allow for some convenience to the researcher, as it can provide geographical distribution. These tools may also be applied qualitatively. For the purposes of this demonstration, however, we are using our five employees to represent a full sample of viewers.

Let’s talk!

Schedule a free demo or get a quote to discover how our software and hardware solutions can support your research.

7 Tips for High Quality Facial Expression Analysis Data

While collecting and analyzing facial expression data remotely, it is important to follow the necessary steps to ensure a quality set up.

1. Informed consent

As with any collection of personal data, ensure that your participants are informed about the research and consent to participation before collecting.

2. Participant’s face box must be adequately sized

In any recording setting, the face in view must be large enough for the algorithm to properly recognize and process the face. If using a video platform like Zoom, we recommend a max of 6 participants on the same video recording.

3. Participant’s face must be well-lit

Participants’ faces should not be over-exposed, and not back lit by another lighting source. Participants should be informed of proper recording set up and adjust their webcams accordingly before collection.

4. Participant’s face must be centered, and in view

Participants should sit close to the webcam, looking at the main screen (not a secondary monitor). They should also ensure that their background is simple, e.g. no moving elements or greenscreen filters.

5. Sound is clear and audible

If presenting stimuli with audio, administrators should ensure that all participants are muted, and can hear the sound of the content before beginning the showing.

6. For video stimuli, create a reel for a more seamless presentation

Showing multiple video stimuli works best when the stimuli have been edited together into one long “reel.” This can be done with any editing software. We recommend leaving a blank space in the beginning of the video (5-10 seconds) and a couple of seconds in between each video (~3 seconds) to allow participants to adjust.

7. Recording remote video on a phone is not advised

When recording remote video, ensure participants opt for a computer setup rather than a mobile device. When calling in and recording on a phone, lighting, and angles can be quite varied, which may affect the ability to properly record and analyze the face.

We look forward to hearing about the unique ways in which you have leveraged remote technologies for your research. If you are interested in learning more, please feel free to contact us.

Contributing Writers:

Free 42-page Facial Expression Analysis Guide

For Beginners and Intermediates

- Get a thorough understanding of all aspects

- Valuable facial expression analysis insights

- Learn how to take your research to the next level

![Featured image for How to Set Up a Cutting-Edge Research Lab [Steps and Examples]](https://imotions.com/wp-content/uploads/2023/01/playing-chess-against-robot-300x168.webp)