Comparing eye-tracking methods for human factors research. Discover the optimal eye-tracking techniques for human factors studies through a comparative analysis. Explore the advantages and limitations of various eye-tracking methods used in human factors research. Make informed decisions on selecting the most suitable eye-tracking approach to enhance research outcomes effectively.

Table of Contents

Understanding Human Limitations: The Role of Human Factors Research

We are only human.

This phrase encapsulates many ideas which we know intrinsically: that we as humans are not purely rational beings. That our attention and workload spans are limited. That we are prone to making mistakes and getting distracted.

The field of human factors research focuses on this premise and seeks to understand the capabilities and limitations of humans within a system, and to explore ways in which we can optimize human performance, health, or safety, depending on the context.

Human factors research can address questions as simple as how one can optimize a computer interface to streamline a work process, or questions as complicated as assessing the dynamics of human-robot pairs working in a factory or the decision-making processes of fighter pilots in combat.

Human factors researchers use a bevy of tools in order to address these questions. Standard questionnaires are commonly used; one such example is a questionnaire that can assess one’s perceived mental workload in team dynamics. There are also standard research paradigms, like NASA’s MATB-II, which provide a standard task battery to assess performance and workload. But as we know here at iMotions, self-report can only take one so far.

The use of physiological measures and biosensors in human behavior research is not new to human factors – there are many studies in the literature leveraging tools like eye tracking, facial expression analysis, EEG, EMG, EDA, and ECG to supplement traditional research approaches. [3-6].

Eye tracking in particular is a popular choice because an understanding of one’s visual attention is essential to evaluate one’s effectiveness in processing vital visual information around them. But – there are many different kinds of eye trackers available. How can one choose what tool to use?

To answer this question, we conducted an in-house feasibility study comparing three eye tracking modalities: screen-based eye tracking, eye-tracking glasses, and remote webcam-based eye tracking. We used a simple and easy transferable task – building blocks – to evaluate the strengths and weaknesses of these different eye-tracking setups.

Methods

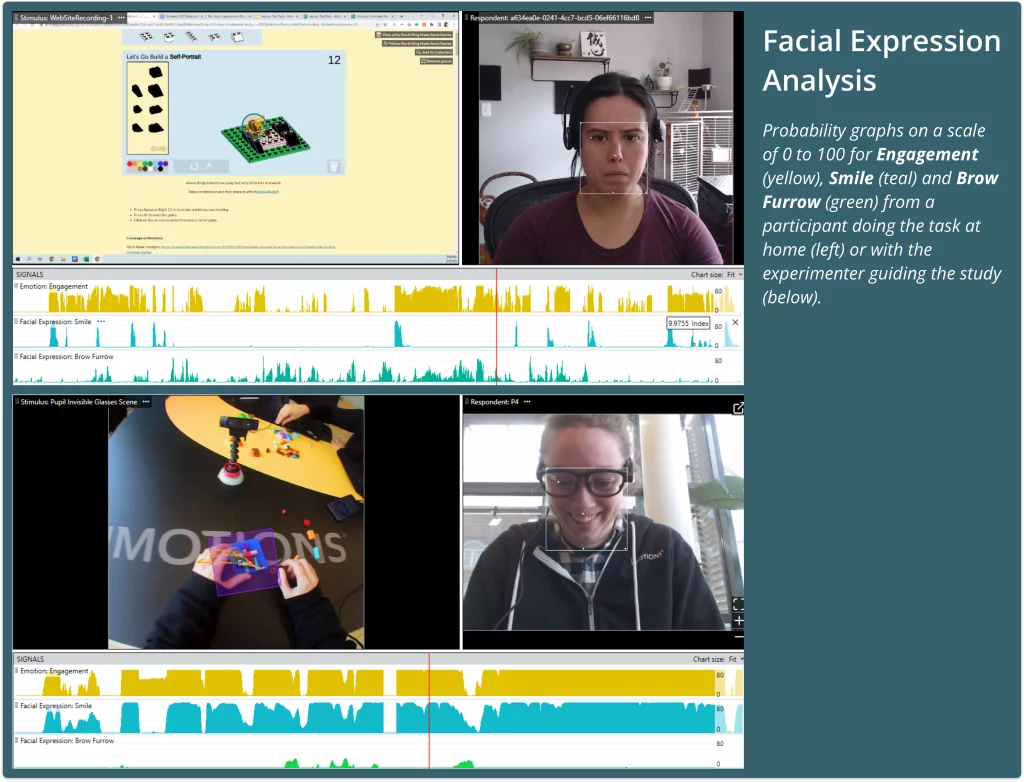

12 participants were recruited for this study and were divided into 3 conditions, one for each eye tracking setup (thus, 4 participants per group). For our screen-based task, we had participants play a freely available brick-building game while recording their eyes with a 60Hz Smart Eye AI-X. Participants in the webcam-based eye tracking condition played the same game, but with their eyes recorded via webcam and using iMotions’ webcam-based eye tracking algorithm. Participants in the eye-tracking glasses condition were given physical building blocks as a real-life analog of the screen-based brick-building game. We also used webcams to record the facial expressions of participants as they did the task.

We asked three questions of our feasibility study, all of which are summarized in an overview table at the end of this article. First, how did the three setups compare on ease of use and accuracy of data? Second, how comparable is the data from different set-ups? Third, how comparable are facial expressions in the three setups?

Accuracy, Scalability, and Ecological Validity

The screen-based and webcam-based eye-tracking had a clear accuracy-scalability tradeoff. While the former provides accurate data, it needs a resource-intensive lab; the latter provides ease of scalability with much-lowered accuracy. The eye-tracking glasses, owing to their larger field of view, also had good perceived accuracy.

When addressing ecological validity, webcam-based eye-tracking allows people to carry out screen-based studies in the comfort of their homes. The glasses set-up, however, allows participants to interact with the building blocks, rendering a different, arguably higher ecological validity. While both screen-based studies can be easily analyzed, giving participants the complete freedom to carry out a motor task the way they usually would, considerably increases analysis time.

Compatibility of Metrics

A common concern for researchers is if the metrics of the processes people want to measure are comparable in different set-ups. In our case, this meant asking the question, “Do people spend an equal amount of dwell time on the board they are building on, irrespective of the process being on the screen or with actual blocks”.

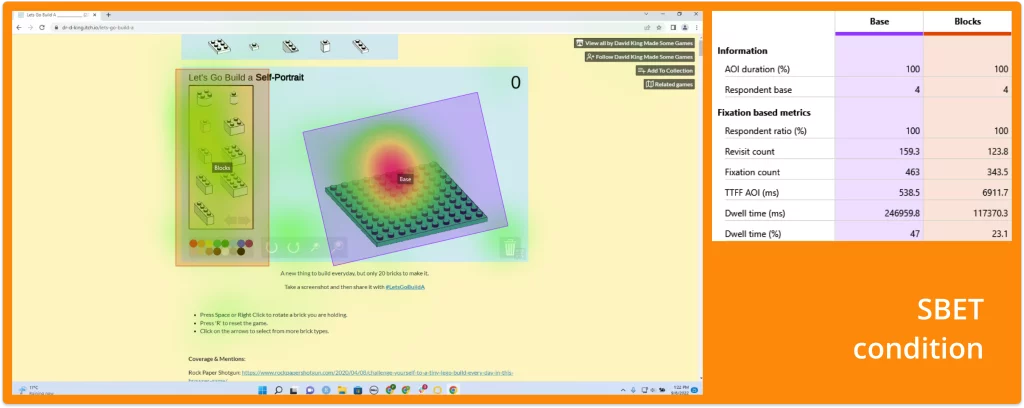

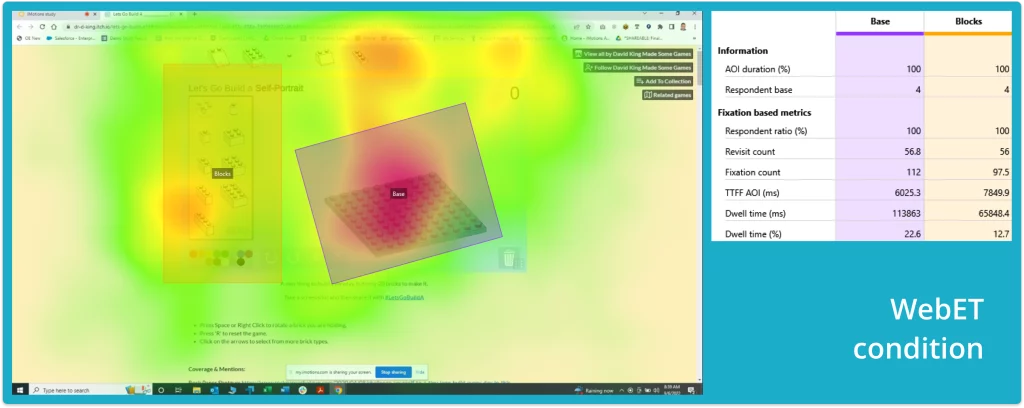

We created areas of interest around the base in all three studies. The analysis showed comparable aggregate dwell time (~47% of the time) on the building board for the glasses and screen-based eye-tracking. This means that, in our small study, people spent around 47% of their time looking at the base of the board in both the screen-based and the more ecologically valid glasses study.

The condition with webcam-based eye-tracking had a much smaller Dwell time (~22%). The heatmaps below reflect the variability of the data inherent to webET, suggesting that many of the true fixations on the building board may have been too noisy and outside the scope of the defined area of interest.

Heatmap and areas of interest from screen-based (above) versus webcam-based (below) eye tracking showing the accuracy-scalability tradeoff.

Facial Expressions in Different Set-ups

Participants had the most facial engagement in the glasses condition where the experimenter was interacting with the participants. People were also more likely to smile when the experimenter was present, and more likely to furrow their brow, likely a sign of concentration, when participating in the task online, in a lab, or at home. This highlights the importance of choosing the correct biosensor for your research question and complementing your eye-tracking study.

A comparison of the different set-ups:

| SBET Condition | Glasses Condition | WebET Condition | |

| Accuracy | High accuracy | Lower accuracy, larger field of view | Lowest accuracy |

| Granularity of analysis | Smaller AOIs (e.g. on blocks) are possible | Increased analysis time to track all AOIs dynamically | Recommended with larger AOIs to account for accuracy errors |

| Ecological validity | Controlled environment | High ecological validity | Higher ecological validity restricted to a screen |

| Scalability | Time-consuming to collect data | Time-consuming to collect and analyze. | Easily scalable |

| FEA | Integrates easily | Affected by movements | Integrates easily |

| Integration with other sensors | Possible to integrate with GSR, EMG, ECG, and EEG | Easy to integrate with GSR, ECG, EMG | Not possible with the Online platform |

| Cost-effectiveness | Requires dedicated hardware and study-specific resources | Requires dedicated hardware and study-specific resources | Webcam based. Minimum human resources. |

To summarize, each set-up has its pros and cons. Some offer higher ecological validity, some enable you to scale your research, others offer higher accuracy, and each of them varies in ease of use. Based on your research design and the scope of your study, iMotions offers a number of flexible solutions to optimize your workflow. Get in touch with us to find the solution that is right for you.

Eye Tracking Glasses

The Complete Pocket Guide

- 35 pages of comprehensive eye tracking material

- Technical overview of hardware

- Learn how to take your research to the next level

Eye Tracking Glasses

The Complete Pocket Guide

- 35 pages of comprehensive eye tracking material

- Technical overview of hardware

- Learn how to take your research to the next level

References

Sellers et al., Proc Hum Factors Ergo Soc, (2014)

Santiago-Espada et al., The multi-attribute task battery II (MATB-II) software for human performance and workload research: A user’s guide (2011).

Mele & Federici, Cogn Process (2012)

Hazlett, CHI ‘06 (2006)

Hardy et al. Proc Hum Factors Ergo Soc, (2013)

Hankins & Wilson, Aviat Space Enviro Med (1998)

iMotions, “Webcam ET Accuracy Whitepaper v2” (2022)

Bishay et al., arXiv.org (2022)