The International Affective Picture System (IAPS) is a widely used tool in psychological research, known for its standardized affective stimulus set. This article delves into the details of the IAPS, its significance, and provides insights into alternative methods in affective picture research. Explore a comprehensive overview of the IAPS and its alternatives here.

Table of Contents

The collection of 700 pictures is over ten years in the making. Image number 420: “woman at beach”. Image number 701: “basket”. Image number 310: “a burn victim”. The list goes on. Despite how it sounds, these aren’t the photographs from a journalistic art project, but material for psychological research.

The International Affective Picture System (IAPS, pronounced in exactly the same way you just pronounced it in your head – “eye-apps”) is a database of photos that have been validated as consistently eliciting a specific emotional response in viewers.

The goal of the database is to standardize what is otherwise a nebulous, subjective experience – that of emotional responses. By forming a standard, validated framework from which to compare and contrast emotional responses, we can create a baseline that can be universally used. Using the same stimuli also allows different researchers to compare results even if the experimental work isn’t otherwise identical. This work can then be tested and replicated – all of which helps build a stronger understanding of emotions.

How the IAPS was made

Emotions are difficult to pin down, which for some creates a desire to use non-specific or non-restricted to language when describing these states. Emotions are said to be personal, and using limited language can take the detail away from the different nuances that exist in each emotional experience. At the same time, gathering consistent, comparable, and standardized information is central to the scientific approach [1].

For researchers this ultimately means that there will have to be a decision regarding where to draw the line in the trade-off between complexity and simplicity. The creators of IAPS made a compromise by using three scales related to emotional responses with a limited number of choices in each. This three-dimensional approach gathers some of the depth of emotions while also limiting the options to a practical amount for analysis.

They use a valence scale (ranging from pleasant to unpleasant), an arousal scale (ranging from calm to excited), and a dominance / control scale (ranging from “in control” to “dominated”). We’ll go through each of these below.

- The valence scale: This scale covers the direction of the feeling or emotion. Ultimately this ascertains if the feeling evoked by the image is positive or negative, without mention how evocative it is.

- The arousal scale: Refers to the intensity of the emotion experienced in response to the image. It captures information about whether the material is calming or exciting, without reference to the positive or negative content of the image.

- The dominance / control scale: This scale gathers data about how the viewer experiences the image in terms of feelings of being in control, or being under control. This scale is less commonly used in research, yet provides another important dimension to the data.

This data was collected by using the Self-Assessment Manikin (SAM) [2]. This scale features 5 cartoon-like figures, each of which provides a visual representation of the commonly perceived physical manifestations of each aspect of the emotional experience.

(Note that this is not the SAM scale used by the IAPS)

For example, the SAM scale for valence shows a frowning character for the most negative response, and a widely smiling character for the most positive response. The level of arousal is represented essentially by an increasingly large explosion in the chest, while the dominance / control scale shows a character that grows in size (and features an increasingly “aggressive look to his eyebrows and arms”).

Participants were required to mark down which of the five characters on each scale represented their reactions most accurately (e.g. medium happy, low arousal, feeling in control). There was also the option to select between the characters, making each a nine-point scale. The photos were shown to participants for 26 seconds each, in sets of 12, to a total of 60 photos in each session. After ten years – that’s a lot of data.

How the IAPS Was Made

You might be wondering at this point what the photos actually look like – it would surely be easier to understand the contents by seeing them. The images are however closed off for access only to academic researchers to “be used only in basic and health research projects”.

The reasoning is that prior exposure to these images would impact your responses to them – by restricting the possibility of participants seeing the images before being tested with them, researchers can hope to capture true in-the-moment reactions that aren’t dampened by familiarity. Below we will go through what that research looks like.

Research Carried out With IAPS

Testing of these images on groups of participants who are naive to the images (not necessarily naive in general) has revealed a great amount of agreement in their responses. This validation process for the images was essential for creating the dataset. Splitting the groups in half and comparing their answers shows a correlation of about 94%, meaning the answers are reliably consistent – one of the central goals of the dataset.

Of course, this is only the data that is collected from the subjective reports of emotional states – how well do these correlate with the objective physiology related to emotions? There are certain biomarkers that indicate aspects of the emotional experience – at least for the valence and the level of arousal (“dominance” is a bit more tricky).

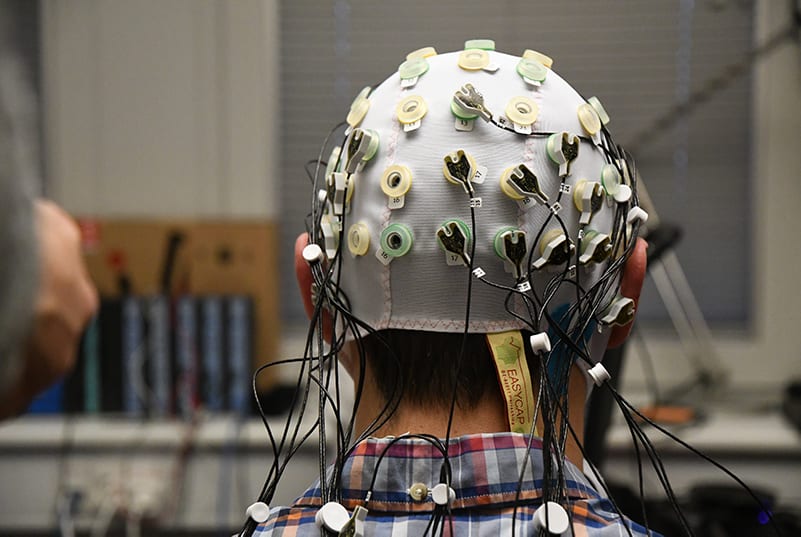

Researchers have used EEG to detect the fine-scale neural changes that occur in response to presentation of photos from the IAPS that are rated as being highly emotional [3]. By using “20 pleasant, 20 neutral, and 20 unpleasant pictures, based on pleasure and arousal ratings” the researchers were able to reliably compare brain-based changes in response to the emotional content of the stimuli.

They found an increased amount of slow wave neural modulation within the occipital cortex (the area of the brain known to orchestrate the experience of vision), and within the posterior parietal cortex (an area involved in planned movements and visual working memory [4]). Both areas showed a right hemispheric dominance, in line with previous research suggesting that the right hemisphere is more involved in semantic or symbolic visual processing [5].

Other researchers have used multimodal methods to investigate emotional responses. Facial electromyography (fEMG) and heart rate data was collected from participants with and without insomnia while they viewed images from the IAPS [6]. Participants were shown positively and negatively valenced photos of people related to sleep – showing people awake at night, or sleeping soundly.

The researchers found that not only did the insomniacs rate the negative sleep stimuli as more distressing – their physiology also mirrored that. Increased heart rate was found in response to the stimuli, while inhibition of corrugator (the muscle just above the eye, often used as a proxy for a negatively valenced response) was also found for the insomniacs when viewing the sleep-positive stimuli – assumed to be an indication of the desire for sleep.

Other researchers have taken this even further, incorporating measurements of heart rate, skin conductance (EDA), and fEMG (detecting several muscles, including the zygomaticus major) alongside the three nine-point scales of valence, arousal, and dominance in response to IAPS photos [7].

The findings showed a corroboration between reports of emotional arousal, and the biosensor-based measures: skin conductance, cardiac deceleration, and the startle-reflex (as measured by fEMG) were all amplified by “pictures depicting threat, violent death, and erotica”. The results showed a clear link between the emotional content of the highly validated IAPS dataset, and the physiological responses – in other words, a clear link between the mind and the body.

Criticism of IAPS

While IAPS has been strongly and thoroughly validated, it has largely just been tested on one type of participants – those known to be WEIRD. An acronym for White, Educated, Industrialized, Rich, Democratic – WEIRD is the demographic that has primarily been tested with IAPS, leaving some to question the true universality of the findings (this is also true of the field of psychology more broadly, a fact that is problematic in several ways).

Alternatives to IAPS

In response to this, several other affect-based photo databases have been formed, in an attempt to provide a more generalizable (or at least more transparent) database. Other databases have also been created to open up access, or widen the choice of material available for affective testing. Some of the main alternatives are found in the list below.

- Nencki Affective Picture System (NAPS)

This database “consists of 1,356 realistic, high-quality photographs that are divided into five categories (people, faces, animals, objects, and landscapes)” and has been rated for both valence and arousal, as IAPS was, but with a measurement of “approach-avoidance” rather than dominance / control. The database also provides measurements of physical properties of the photographs – the luminance, contrast, and entropy, which can be important when these need to be controlled for (such as with pupillometry).

Subsets of the database have also been formed to provide data that has been more intensively categorized, such as for discrete emotional categories, erotic content, and fear inducing material. - Open Affective Standardized Image Set (OASIS)

OASIS is an “open-access online stimulus set containing 900 color images depicting a broad spectrum of themes, including humans, animals, objects, and scenes, along with normative ratings on two affective dimensions – valence… and arousal”. The database is also “not subject to the copyright restrictions that apply to the International Affective Picture System” which opens up the possibilities for use. - Geneva affective picture database (GAPED)

GAPED is a database consisting of 730 photos, “rated according to valence, arousal, and the congruence of the represented scene with internal (moral) and external (legal) norms”. The negatively-valenced photos include “spiders, snakes, and scenes that induce emotions related to the violation of moral and legal norms”, while the positively-valenced photos include “mainly human and animal babies as well as nature sceneries”. Neutral pictures primarily show inanimate objects. - Emotional Picture Set (EmoPicS)

As research into emotions often requires a large number of stimulus presentations, EmoPicS has been built to add to the material available. The database “comprises a total of 378 standardized color photographs with different semantic content (diverse social situations, animals and plants) as well as different emotional intensity and valence”. The database is only available for academic research or clinical work. - EmoMadrid

EmoMadrid is a database of over 800 photos with different affectiva content. The data includes information about the “affective valence, arousal, spatial frequency, luminosity and physical complexity”. - Military Affective Picture System (MAPS)

MAPS is an image database that “provides pictures normed for both civilian and military populations to be used in research on the processing of emotionally-relevant scenes common among military populations”. It consists of “240 images depicting scenes common among military populations”. The data was scored in the same way as IAPS, with measures of valence, arousal, and dominance. - Development and Validation of the Image Stimuli for Emotion Elicitation (ISEE)

The ISEE was built as a “set of reliable pictorial stimuli, which elicited target emotions stably over time”. The ISEE, in comparison to IAPS, GAPED, and others, has been tested for stability in emotional elicitation over repeated presentations. The database consists of 356 photos, selected from an initial pool of over 10,000. - Open Library of Affective Foods (OLAF)

OLAF as a database of images “has the specific purpose of studying emotions toward food” and “depicts high-calorie sweet and savory foods and low-calorie fruits and vegetables, portraying foods within natural scenes matching the IAPS features”. The images are available to be downloaded directly from the website. - DIsgust-RelaTed-Images (DIRTI)

Built specifically for eliciting feelings of disgust, the DIRTI database “consists of 240 disgust-inducing pictures divided into six categories (food, animals, body products, injuries/infections, death, and hygiene)” as well as 60 neutral pictures. The photos were rated on scales measuring disgust, fear, valence, and arousal and can be downloaded directly through the link above.

Other databases also exist for auditory stimuli, for words, and even for sections of text, providing a range of options for research into emotional states.

I hope you’ve enjoyed learning about the International Affective Picture System. If you’d like to learn more about one of the principal methods used to measure emotional arousal, download our free guide to skin conductance measurement below:

Free 36-page EDA/GSR Guide

For Beginners and Intermediates

- Get a thorough understanding of all aspects

- Valuable GSR research insights

- Learn how to take your research to the next level

References

[1] Lang, P.J., Bradley, M.M., & Cuthbert, B.N. (2008). International affective picture system (IAPS): Affective ratings of pictures and instruction manual. Technical Report A-8. University of Florida, Gainesville, FL.

[2] Bradley, M. M., & Lang, P. J. (1994). Measuring emotion: The self-assessment manikin and the semantic differential. Journal of Behavioral Therapy and Experimental Psychiatry, 25, 49-59.

[3] Keil, A., Bradley, M. M., Hauk, O., Rockstroh, B., Elbert, T., and Lang, P. J. (2002). Large-scale neural correlates of affective picture processing. Psychophysiology 39, 641–649. doi: 10.1017/s0048577202394162

[4] Berryhill, M. E., & Olson, I. R. (2008). Is the posterior parietal lobe involved in working memory retrieval? Evidence from patients with bilateral parietal lobe damage. Neuropsychologia, 46(7), 1767-1774. doi:10.1016/j.neuropsychologia.2008.01.009

[5] Gazzaniga, M. S. (2005). Forty-five years of split-brain research and still going strong. Nat Rev Neurosci, 6(8):653–9. pmid:16062172

[6] Baglioni, C., Lombardo, C., Bux, E., Hansen, S., Salveta, C., Biello, S., … Espie, C. A. (2010). Psychophysiological reactivity to sleep-related emotional stimuli in primary insomnia. Behaviour Research and Therapy, 48(6), 467–475. doi:10.1016/j.brat.2010.01.008

[7] Bradley, M. M., Codispoti, M., Cuthbert, B. N., & Lang, P. J. (2001). Emotion and motivation I: Defensive and appetitive reactions in picture processing. Emotion, 1(3), 276–298. https://doi.org/10.1037/1528-3542.1.3.276