Galvanic Skin Response (GSR/ EDA) sensors have helped researchers detect emotional responses in a combination of different ways [1]. Typically the GSR data is interpreted shortly after an event or exposure to a stimulus, as the physiological signals begin to react. An Event-Related Skin Conductance Response (ER-SCR) has shown high accuracy when combined with other modularities, such as fEMG, Facial Expression analysis, and heart rate.

However, speech triggered ER-SCRs methods have not been explored as a possible method for real-time emotion detection. Galvanic Skin Response paired with speech in real-time processing has been recently investigated by the Michigan State University’s, Human Augmentation Technologies Lab (HAT lab).

We have the honor to interview one of the authors of this method, Phd Candidate- Sylmarie Dávila-Montero, on how GSR and speech can expand our understanding of human emotions.

Introduction to Sylmarie Dávila-Montero

My name is Sylmarie Dávila-Montero. I am a Ph.D. candidate in the Electrical Engineering program at Michigan State University working in the Human Augmentation Technologies (HAT) Lab under the supervision of Dr. Andrew J. Mason.

What does the Human Augmentation Technology Lab do?

Michigan State University’s College of Engineering – Human Augmentation Technologies Lab’s overall mission of the lab is bridging the gap between sensor technologies and high impact biomedical research and environmental applications. Our research group has traditionally contributed to areas of design and fabrication of sensor systems, featuring nanostructured biological and chemical interfaces, and hardware efficient real-time signal processing of sensor and sensor array responses. In more recent years, we have been focused on developing approaches to improve brain-machine interfaces and human-computer interfaces for biomedical and social applications.

What does your work involve?

My central research question focuses on how we can best process sensor signals in real-time to better understand human behaviors and how they affect social interaction. Thus, my research employs real-time social signal processing and machine learning techniques to draw relationships between sensor signals collected and those human behaviors of interest. This work involves collecting and processing a variety of sensor signals, including audio signals and physiological signals. The goal is to develop systems capable of understanding human behaviors in real-time and in real-life scenarios to improve human behavior awareness and the quality of social interactions. Improving the quality of our social interactions improves our mental and physical health, positively impacting our lives.

How are you using iMotions, and what kind of biosensors do you use in your research?

For my research, we are using biosensors to better understand human emotions and how they change during social interactions. We are specifically using Galvanic Skin Response (GSR), photoplethysmography (PPG), and electroencephalography (EEG) sensors combined with microphones to collect data of physiological emotion indicators. All these biosensors collect signals from physiological processes that are known to be affected by changes in emotional states. Thus, changes in the characteristics of these signals can be associated back to an emotional state.

iMotions helped me get started in my research by not having to worry about designing a sensor data collection infrastructure and allowing me to start right away collecting the data needed to start designing my algorithms. In addition, onboarding training and consulting services helped me be better prepared to perform research in the area of human behaviors.

Why is the use of biometric sensors important in your research?

Understanding the emotional state of an individual is important when trying to find ways to improve social interactions. Social interactions are highly complex. They are constructed based on the social behaviors of two or more individuals. At the same time, our social behaviors are driven by internal and external stimuli. Internal stimulus comes from our mind and includes things like our emotions, moods, attitudes, and personality, whereas external stimulus includes things related to our environment and the way in which we communicate with each other. We make use of biosensors to capture physiological signals linked to changes in our emotional states, providing us a window to understanding part of those internal stimuli that affect our social behaviors.

In one of our recent publications, we explored the relationship between audio signals and electrodermal activity (EDA) reactions and analyzed a new approach for the classification of skin conductance reactions elicited by emotional arousal using speech signals as a triggering event. EDA reactions come from signals captured using GSR sensors, where measurements reflect the changes in the properties of the skin that are regulated by changes in sweat glands’ secretion. Sweat secretion, controlled by the sympathetic nervous system, increases with increments in emotional arousal. Being considered a good indicator of emotional arousal, numerous works have made use of EDA signals to predict emotional states.

How do these tools help you to answer your research questions?

The use of biosensors helps us capture changes in emotional states that we can then associate to an event, during a social interaction. We perform this association with the help of microphones, which provide us information on external stimuli, i.e., the communication dynamics observed during social interaction. By combining biosensors with microphones, we can extract information of interest that allows us to monitor elements of social interactions.

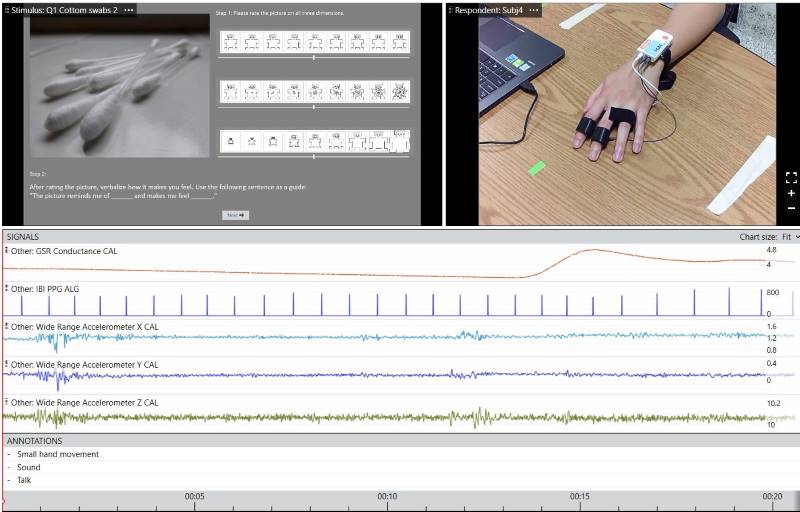

To determine how we can best process sensor signals in real-time to better understand human behaviors and how they affect social interaction, we first started designing our algorithms using pre-recorded data. In our recent publication, we used the iMotions software to simultaneously record audio signals and EDA signals. The EDA signals were obtained using the Shimmer3 GSR+ sensor device attached to the middle phalanx of the index and middle fingers on the non-dominant hand.

We recorded audio and EDA signals while individuals were watching different images and vocalizing what that image made them feel. The analysis of the collected data suggested that speech could be used to decide which EDA reactions should be considered when performing emotion recognition, which could help improve real-time emotion recognition algorithms.

Check out the video below on a presentation of Sylmarie’s research with GSR and Real-time monitoring:

Where do you see future research in your area?

I believe that with the increasing desire to understand human behaviors in natural environments, wearable sensors will become increasingly popular to collect information for research about our bodies and our environments. Having wearable sensors with the capability to accurately detect emotional states in real-time could greatly improve utility in healthcare and workplace interaction applications, among others. At the commercial level, we already see wearable devices such as Fitbit and Amazon Halo [2] measuring stress levels using physiological signals and detecting emotions using microphones, respectively, in natural environments. However, how can we best use this information to improve our social interactions with others? Answering this question will likely take significant research into the future.

Why did you choose iMotions?

I learned about iMotions by looking into sensor devices to use in my research and by trying to figure out how to collect all the data that was of interest to me in a synchronized manner. In this process, I learned about the capability of iMotions software for synchronizing video, audio, and biosensor signals in a user-friendly environment.

In addition, iMotions software offers the opportunity to connect any device beyond their standard biosensors through their Advance API module. This was attractive to me because I wanted to have the liberty to use any kind of sensor that could benefit my research, including custom sensors designed in our lab. Thus, iMotions offered the necessary tools that I needed to get my research started.

As speech and audio are examined as part of stimuli and a way to understand emotional responses, this method in coding speech-triggered events opens new possibilities for human behavior research.

iMotions has recognized the need to allow this feature to test audio sounds for a multitude of applications. From iMotions 8.1.6 and onwards, you have the option to import sound files as a stimulus with the option of an accompanying image. You can choose an image that will be shown while the sound is played or choose to just focus on the audio as the only stimulus.

We look forward to following future research from our clients at Michigan State University- Human Augmentation Technologies Lab, for further research into wearable technology and biomedical research. Their findings will lead to future innovations in Machine learning and machine-human interactions.

References:

[1] GSR and Emotions: What Our Skin Can Tell Us About How We Feel

[2] https://www.bloomberg.com/news/newsletters/2020-08-31/amazon-s-halo-wearable-can-read-emotions-is-that-too-weird