Our visual attention and eye movements are shaped by the environment, influencing how we perceive and interact with the world. Explore the differences between screen-based and real-world eye-tracking studies, and learn how fixation patterns, depth cues, and gaze behavior impact perception, decision-making, and attention in natural and controlled settings.

Table of Contents

- Understanding Eye-Tracking Studies: Screen-Based vs. Real-Life Analysis

- How Do Natural Settings Differ For Eye Tracking?

- Screen-Based vs. Real-World Eye-Tracking: Key Differences

- What Drives Our Gaze Guidance? Bottom-Up vs. Top-Down Attention

- Goal-Oriented Fixations in Eye-Tracking Studies

- Conclusion: Generalizing Eye-Tracking Findings

- Download Our Free Guide on Eye-Tracking Methodologies

- Eye Tracking

- References

Understanding Eye-Tracking Studies: Screen-Based vs. Real-Life Analysis

In eye-tracking research, presenting stimuli on a screen is often more practical than studying real-world viewing behavior. While eye-tracking glasses allow researchers to conduct studies in natural environments, they require more resources and introduce complexities that screen-based setups do not. However, the choice between screen-based and real-life eye-tracking studies significantly influences visual attention and gaze behavior.

How Do Natural Settings Differ For Eye Tracking?

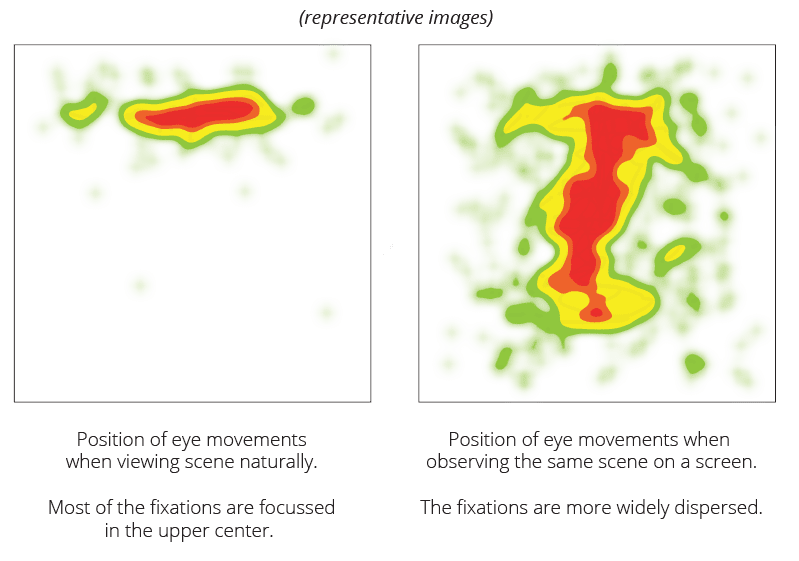

One of the prominent features that can bias the results of screen-based eye-tracking studies is that viewers have the tendency to look at the center of the screen regardless of the task or the distribution of features in the scene [1].

This may be a simple response to center the eye in its orbit, or it could simply be a convenient location that makes the exploration of the scene more efficient. In contrast to this, a study using eye tracking glasses in a real-life supermarket found that the tendency to center the eyes is much less robust [2].

It has been shown that in natural viewing environments, saccade amplitudes (how far each saccade moves) are much greater than in screen-based, laboratory settings [3]. Similarly, it has also been found that large gaze shifts are more likely to be made with head movements in natural settings, in contrast to a screen-based setup [4].

A study that involved showing participants a video taken from the perspective of a person walking outdoors found that eye movement distributions from the laboratory setting only matched the real gaze patterns about 60% of the time.

When participants walked in the real world, they selected objects with head movements, and their eyes tended to stay fixated on a ‘heading point’, that was slightly above the center of the head frame-of-reference. In contrast, when the same video sequence was shown on the screen, participants shifted their gaze more often to the edge of the visual field [5].

Real-world behaviors differ from screen-based setups in that the viewer is also an agent within the environment and can partly control the dynamics of the scene by moving around and interacting with objects [6].

Studies of eye movements during natural behavior show a strong link between the actual behavioral goals and overt visual attention. For example, it has been shown when people carry out real-life tasks, all fixations fall on task-relevant objects and the range of fixation durations is wider, reflecting the acquisition of information that is required for the task at hand [7].

This means that in natural tasks, attention serves the purpose of extracting information and coordinating motor actions. Screen-based setups, in contrast, rarely involve any active manipulation of objects [8], and the attention may therefore be allocated in a different manner.

Further to this, when stimuli are displayed on a screen, many depth and motion cues are absent. As the observer’s viewpoint in a still image is fixed, the stimulus context is limited, and the dynamic, task-driven nature of vision is not represented.

The size of the stimulus display has also been shown to impact information processing. As an example, a study that compared visual search on large and small shopping displays found that larger and more realistic displays tend to promote faster search times.

Even though a small screen display requires less time for complete coverage, large displays offer better utilization of peripheral vision and are closer to physical reality [9]. Peripheral eyesight provides a basic overview of the scene, and therefore also supports search behavior [10].

Screen-Based vs. Real-World Eye-Tracking: Key Differences

- Fixation Patterns – Real-world settings encourage fixations based on task relevance, while screens often promote central fixation bias.

- Peripheral Vision Usage – Large, realistic displays improve peripheral vision engagement, leading to faster and more natural visual search behavior.

- Depth & Motion Cues – Screen-based studies lack the depth perception and motion dynamics present in real-life environments.

- Behavioral Goals & Attention – In real-world tasks, visual attention is strongly guided by goal-oriented behavior, while screen-based studies limit interactive engagement.

What Drives Our Gaze Guidance? Bottom-Up vs. Top-Down Attention

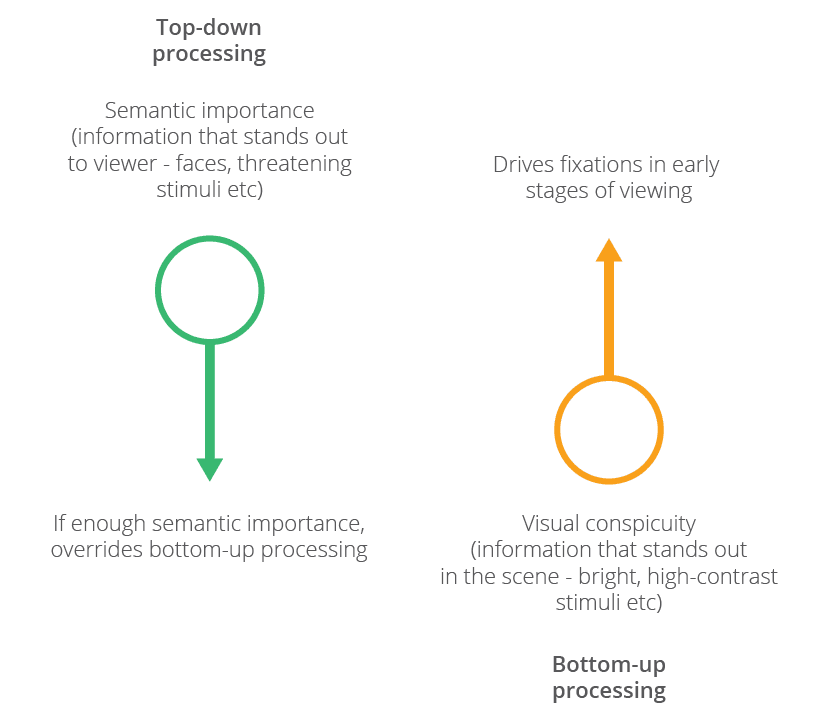

While some studies suggest that stimulus-driven, bottom-up attention dominates during the early phases of viewing [11] [12], other studies have shown that top-down cognitive processes guide fixation selection throughout the course of viewing.

For example, viewers’ initial fixations still tend to land on meaningful visual areas, such as faces, even when they are reduced in contrast and do not stand out [13]. Both visual conspicuity (how apparent or obvious something is) and semantic importance (how meaningful something is) play a crucial role in terms of where people look.

Beyond this, it has been shown that when the relationship between conspicuity and meaning is controlled, only the semantic importance accounts for the unique variation in attention [14]. This may suggest that the semantic importance – the top-down processing – is a stronger determinant of fixation selection.

Neuroscientific findings also support the notion that visual attention is dependent on the distribution of semantic content in a scene, and more specifically, that salience is encoded based on novelty and reward (the more novel and / or rewarding a stimulus is, the more likely it will be paid attention to).

From that perspective, the two central factors that guide attention can be summarized as value considerations (reward) and uncertainty, or the need to acquire new information (novelty) [15].

Goal-Oriented Fixations in Eye-Tracking Studies

In eye-tracking studies that involve a search task, the viewer’s goal is to reduce spatial uncertainty, as the object of interest needs to be distinguished from other components of the scene (distractors). Similar to this, under a choice task the goal is to reduce preference uncertainty [16].

Both conditions also entail some value considerations and imply that fixations are essentially goal-oriented. This also applies to free-viewing conditions where no task or goal is defined. In such situations, viewers choose their own internal agendas that guide their information intake [17].

It is especially evident in real-life environments and during natural behavior that there is an intimate link between where viewers look and the information they need for the immediate task goals [8]. For that reason, to understand the principles that underlie fixation selection, the eye movements must be considered in the context of behavioral goals.

Conclusion: Generalizing Eye-Tracking Findings

The setting of an eye-tracking study significantly influences visual attention patterns. To accurately interpret gaze behavior, researchers must account for factors such as stimulus presentation, task relevance, and real-world interaction. While screen-based eye-tracking provides controlled, repeatable conditions, real-life studies offer greater ecological validity, ensuring findings better reflect natural human vision and decision-making processes.

Download Our Free Guide on Eye-Tracking Methodologies

For an in-depth comparison of screen-based vs. real-world eye-tracking studies, download our free guide below and explore how different methodologies impact visual attention research.

Eye Tracking

The Complete Pocket Guide

- 32 pages of comprehensive eye tracking material

- Valuable eye tracking research insights (with examples)

- Learn how to take your research to the next level

References

[1] Tatler, B. W. (2007). The central fixation bias in scene viewing: Selecting an optimal viewing position independently of motor biases and image feature distributions. Journal of vision, 7(14), 4-4.

[2] Gidlöf, K., Wallin, A., & Holmqvist, K. (2012). Central fixation bias in the real world?: evidence from the supermarket. In Scandinavian Workshop on Applied Eye Tracking, 2012.

[3] Land, M., Mennie, N., & Rusted, J. (1999). The roles of vision and eye movements in the control of activities of daily living. Perception, 28(11), 1311-1328.

[4] Stahl, J. S. (1999). Amplitude of human head movements associated with horizontal saccades. Experimental brain research, 126(1), 41-54.

[5] Foulsham, T., Walker, E., & Kingstone, A. (2011). The where, what and when of gaze allocation in the lab and the natural environment. Vision research, 51(17), 1920-1931.

[6] Smith, T. J., & Mital, P. K. (2013). Attentional synchrony and the influence of viewing task on gaze behavior in static and dynamic scenes. Journal of vision, 13(8), 16-16.

[7] Hayhoe, M. M., Shrivastava, A., Mruczek, R., & Pelz, J. B. (2003). Visual memory and motor planning in a natural task. Journal of vision, 3(1), 6-6.

[8] Tatler, B. W., Hayhoe, M. M., Land, M. F., & Ballard, D. H. (2011). Eye guidance in natural vision: Reinterpreting salience. Journal of vision, 11(5), 5-5.

[9] Tonkin, C., Duchowski, A. T., Kahue, J., Schiffgens, P., & Rischner, F. (2011, September). Eye tracking over small and large shopping displays. In Proceedings of the 1st international workshop on pervasive eye tracking & mobile eye-based interaction (pp. 49-52). ACM.

[10] Clement, J. (2007). Visual influence on in-store buying decisions: an eye-track experiment on the visual influence of packaging design. Journal of marketing management, 23(9-10), 917-928.

[11] Parkhurst, D., Law, K., & Niebur, E. (2002). Modeling the role of salience in the allocation of overt visual attention. Vision research, 42(1), 107-123.

[12] van Zoest, W., Donk, M., & Theeuwes, J. (2004). The role of stimulus-driven and goal-driven control in saccadic visual selection. Journal of Experimental Psychology: Human perception and performance, 30(4), 746.

[13] [1] Nyström, M., & Holmqvist, K. (2008). Semantic override of low-level features in image viewing–both initially and overall. Journal of Eye Movement Research, 2(2).

[14] Henderson, J. M., & Hayes, T. R. (2017). Meaning-based guidance of attention in scenes as revealed by meaning maps. Nature Human Behaviour, 1(10), 743.

[15] Gottlieb, J., Hayhoe, M., Hikosaka, O., & Rangel, A. (2014). Attention, reward, and information seeking. Journal of Neuroscience, 34(46), 15497-15504.

[16] Wedel, M., & Pieters, R. (2008). A review of eye-tracking research in marketing. In Review of marketing research (pp. 123-147). Emerald Group Publishing Limited.

[17] Tatler, B. W., Baddeley, R. J., & Gilchrist, I. D. (2005). Visual correlates of fixation selection: Effects of scale and time. Vision research, 45(5), 643-659.

[18] Ballard, D. H., & Hayhoe, M. M. (2009). Modelling the role of task in the control of gaze. Visual cognition, 17(6-7), 1185-1204.

[/fusion_builder_column][/fusion_builder_row][/fusion_builder_container]