The modern study of facial expressions started with a med-school dropout who traveled the world for most of his early 20’s. After coming back to land, he wrote up a book. While one reviewer at the time said that “some parts are a little tedious”, it set the foundation for how we largely think about facial expressions today. That med-school dropout was Charles Darwin, who shaped the fields of knowledge for both evolution and emotion.

As Darwin wrote the book, he used engravings, illustrations, diagrams, and photographs to study the muscular movements of the face when displaying emotion. He claimed that the face could produce sixty disparate facial expressions, that could be boiled down to just six emotions: anger, fear, surprise, disgust, happiness, and sadness [1].

Much has changed in the study of facial expressions since the time of Darwin. While his core concepts still inform how we think about the relationship between the face and emotions, this understanding has become more nuanced, and the methods more advanced. Rather than relating facial expressions directly to emotions, we now know that the relationship can be more subtle and complicated [2]. And rather than illustrations and diagrams, we use video recordings that can be automatically analyzed.

The iMotions Facial Expression Analysis module has been built to make the analysis of facial expressions both impactful and streamlined. Facial expressions are automatically analyzed through video – either live or recorded. Results are then calculated providing you with an understanding of the facial movements, and how they relate to emotional expressions. Below, we will provide a walkthrough of all of the functions that iMotions can carry out to make your research great.

Table of Contents

FEA Data collection: Live or pre-recorded data

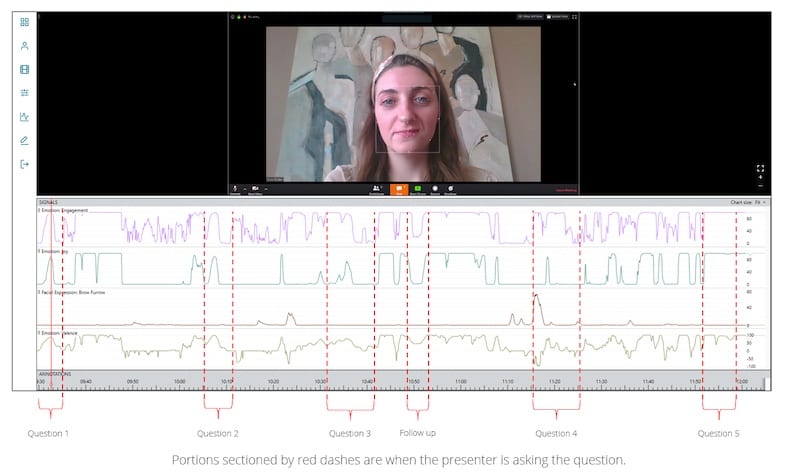

Facial expression analysis can be carried out virtually anywhere – all that’s needed is a video feed or a video recording. This flexibility means that it’s possible to have participants brought to the lab, or you can gather videos that are recorded externally. It’s possible to use recordings from TV interviews, livestreams, or self-recorded footage – anywhere where facial expressions are shown. This flexibility also means that multiple data collections can happen simultaneously – recordings need not be limited to one person at a time being present at a data collection computer. Post-processing can then be queued to happen in the background, meaning you’re never slowed down – and can instead focus on understanding the data.

Read more: How Facial Expressions Analysis can be done remotely

Real-world behavioral science

In addition to being able to post-process any previous recording, it’s also possible to live analyze data coming in from mobile devices, such as phones or tablets. This can also be easily coupled with other biosensors, opening up a wealth of opportunities for understanding interaction on the fly. This can also be coupled with timeline annotations and LiveMarkers for advanced behavioral coding.

Data Export and visualization capabilities

iMotions allows data to be exported easily to a range of different formats for further analysis if required. Annotation and markup of the data allows for specific segments to be selected and exported as desired.

iMotions allows you to choose exactly which sensor data (or combination of sensor data) to export, meaning that you can focus on what you need. The wide range of data that’s collected can remain in iMotions if it’s needed later, or for exporting again.

iMotions: A Complete experimental platform

Almost every step in the experimental process can be carried out in iMotions – from experiment design (stimuli order, participant details, grouping), to stimuli presentation, to data collection, processing (or just post-processing), and data export.

There is therefore no need for differing software running the stimulus presentation, face tracking, face coding, or for keeping track of which participant is in which group. This makes for a streamlined and easy-to-run process when running experiments.

20+ Facial Expression measures

The facial expression analysis module provides 20+ facial expression measures (otherwise known as action units), data regarding 7 core emotions (joy, anger, fear, disgust, contempt, sadness, and surprise), facial landmarks, and behavioral indices such as head orientation, rotation, and even the level of participant attention.

The sensitivity of the measures can also be altered depending on the experimental context, giving you full control over the data collection process. Insight into emotions can be a challenging pursuit, but facial expression analysis with iMotions brings you as close to this as possible.

Check out: Facial Action Coding System (FACS) – A Visual Guidebook

Multi-modality integrations

iMotions excels at integrations – allowing the connection of multiple, different biosensors in order to create a deeper analysis of human behavior. Biosensors such as eye trackers (screen-based, glasses, and in VR), EEG, EDA, ECG, and EMG (among others) can be seamlessly included in the experiment.

These sensors can complement the data by providing a depth of understanding that is otherwise missing. The data can provide information about attentional processes, cognitive changes, and physiological arousal levels – which are otherwise not available when considering facial expression data alone.

It’s also possible to connect a variety of other sensors that aren’t natively integrated by using the Lab Streaming Layer (LSL) protocol. This allows data from other sensors to be sent into iMotions and synchronized with other data sources (here is a list of LSL supported devices and tools).

Disclaimer: Make sure you inspect the data quality when connecting via third-party LSL applications before executing your final study – some LSL apps may not have been recently tested and may contain bugs that need updating.

Beyond this, it’s also possible to use our open API to connect essentially any other data stream. This can be used to, for example, create brain-computer interfacing, or for biofeedback. Virtually any data-producing device can be connected to iMotions, creating new research possibilities.

Read more: What is an API and how does it work?

Facial expression analysis has a surprisingly long history, yet has only recently stepped into the modern era. The facial expression analysis module from iMotions helps you carry out research at the forefront of these changes – to assess emotions like never before.

Free 42-page Facial Expression Analysis Guide

For Beginners and Intermediates

- Get a thorough understanding of all aspects

- Valuable facial expression analysis insights

- Learn how to take your research to the next level

References

[1] Darwin, C. (1872). The Expression of the Emotions in Man and Animals. London: J. Murray.

[2] Russell, J.A., Barrett, L.F. (1999). Core affect, prototypical emotional episodes, and other things called emotion: dissecting the elephant. Journal of personality and social psychology, Vol. 76. No. 5.805-819.