What is New

iMotions Lab 11

iMotions 11 is packed with new features and optimizations giving you the edge in your research. A number of significant improvements to features such as Advanced Surveys, and AutoAOI’s. And of course brand new features like the Data Visualization Dashboard and more device integrations.

On top of this we added a number of new hardware integrations as well, expanding the possibilities for your research even further.

Watch iMotions’ VP of Engineering Jacob Ulmert present highlights from iMotions 11.

New Features in iMotions Lab 11

fNIRS Module

We partnered with Artinis to bring you the best of the crop fNIRS. So you can now record fNIRS data as well as export it in SNIRF format

Data Visualization Dashboard

This analysis tool enhances your data visualization capabilities. You can create multiple types of visualizations (bar, scatter, and circumplex plots) and customize their appearance. You can import other multimodal summary metrics or aggregated AOI metrics and select which specific intervals or AOIs you want to compare. To learn more, check out the Help Center Article!

Surveys

Our surveys have gotten smarter, too. We have introduced improved Skip logic, rich styling, and AOI creation are all built in for advanced survey questions. You can add images or videos, even for remote data collection, and exports clearly tag every stimulus for easier analysis.

Areas of interest are automatically added to track survey questions. This makes it easy to use eye tracking with surveys without having to manually draw where each question is located.

Webcam Respiration

Since iMotions 10 we have added a brand new module allowing for contact-free breathing measurements in remote research (online).

Find out more about the Webcam respiration module.

R Notebooks

R Notebooks translate hardware signals into the metrics you use for your research. As technology advances and research evolves, we add metrics so that our Notebooks remain relevant for the numerous fields of research we support. The following metrics for facial expression, voice analysis and ECG have been added.

- Facial Expression Analysis: Speaking, Adaptive Engagement, and Adaptive Valence.

- Voice Analysis: Average Anger, Happiness, Neutrality, Sadness, Activation, Dominance, and Valence

- ECG:

- Photoplethysmography (PPG, only for Plux). PPG allows you to use light to measure changes in blood volume and vasculature. For more information about PPG, visit our blog.

- Missed Peaks Count metric gives you a better idea of the quality of your data.

- Blink support added for HTC Focus Vision and Meta Quest Pro

R Notebooks now also process significantly faster for a number of metrics, as a result of more efficient calculations.

Accelerometry Notebook

New Accelerometry Notebook for movement detection. The notebook supports Shimmer, Neon Eye Tracking glasses, and Pupil Invisible glasses. The 3-axial accelerometry signals are filtered and thresholded to detect when movement is occurring. This is great for understanding anomalies in your data or determining precisely when a movement occurred.

Multi-face tracking and emotion analysis processing with Affectiva

With the new Facial Expression add-on it is now possible to easily track and analyze multiple faces in videos. This is ideal for video conferences and calls.

Multi-stimulis Replay

The new multi-stimulus replay timeline lets you visualize a respondent’s full exposure, including signals, annotations, and events, in one continuous flow.

Updates to video workflows

Video Segment Detection

Automatically create video segmentation based on scene detection and utilize a built-in vision language model for automatic scene descriptions.

Face blurring

When conducting studies that include a scene recording, such as eye tracking glasses studies, it is often needed to anonymize the people in the recording. With the new face blurring feature you can now do this completely automatically, saving you countless hours and ensuring proper handling of privacy.

Auto AOI

Although this feature was introduced in iMotions 10, a lot of significant updates has been introduced since then, such as: Support for Ellipse and Polygon dynamic AOI’s. Support for static stimuli. General improvements.

Aggregated Multimodal Exports

You can combine data from all study participants participants and all notebook metrics into one export file. This data is in a “long” format, making it easy to use with filters, pivot tables, and other analysis tools.

UI and Usability Improvements

Search for your study by name. Switch between EU and non EU AssemblyAI endpoints. Dynamic device information over iMotions API. Improved context menus. And much more!

Other

We’ve also expanded analysis features. Gaze mapping now includes mouse-event tracking and exportable calibration results.

Studies exported from iMotions Online can now be imported

For detailed release notes on all releases see our release page.

Release notes for Remote Data Collection module and iMotions Online can be found here.

New Hardware Integrations in iMotions Lab 11

We always strive to give you access to the best hardware on the market for human behavior research. With iMotions 11 we have added a number of new devices that will compliment your research. In addition to this we continuously maintain and update our existing integrations to bring you the latest features and metrics from your device straight in to iMotions Lab.

We now support Smart Eye Pro v12 with new drowsiness and profile-ID signals, plus raw-video sampling for Smart Eye AI-X and Aurora.

Pupil Labs Neon gets full blink, fixation, and eye-state imports. And we’ve added support for Viewpointsystem 3.0 and Biosignalsplux respiBAN, so running multimodal studies is easier than ever.

iMotions now support hardware from over 20 partners and don’t forget you also can connect any device that supports LSL.

Neurable MW75 Neuro (EEG)

With Neurable MW75 Research Kit you not only get a great pair of headphones, you also get a unique high quality EEG headset in the form factor of over the ear headphones. We partnered with Neurable to bring their EEG data directly into iMotions Lab allowing you to conduct completely new types of research.

Plux Blood Volume Pulse

The biosignalsplux Blood Volume Pulse (BVP) sensor is an optical, non-invasive sensor measures changes in the arterial translucency using the light emitter and detector built into its finger-clip housing.

Plux respiBAN

Plux respiBAN gives you mobility and durability in your respiration research at an attractive price point.

Artinis fNIRS

It is now possible to use fNIRS devices with iMotions Lab. We partnered with Artinis to bring you the best of the crop fNIRS devices.

New VR Device Support

iMotions Lab now supports Meta Quest Pro and HTC Vive Focus via a Unity Plugin.

iMotions Lab in Action

-

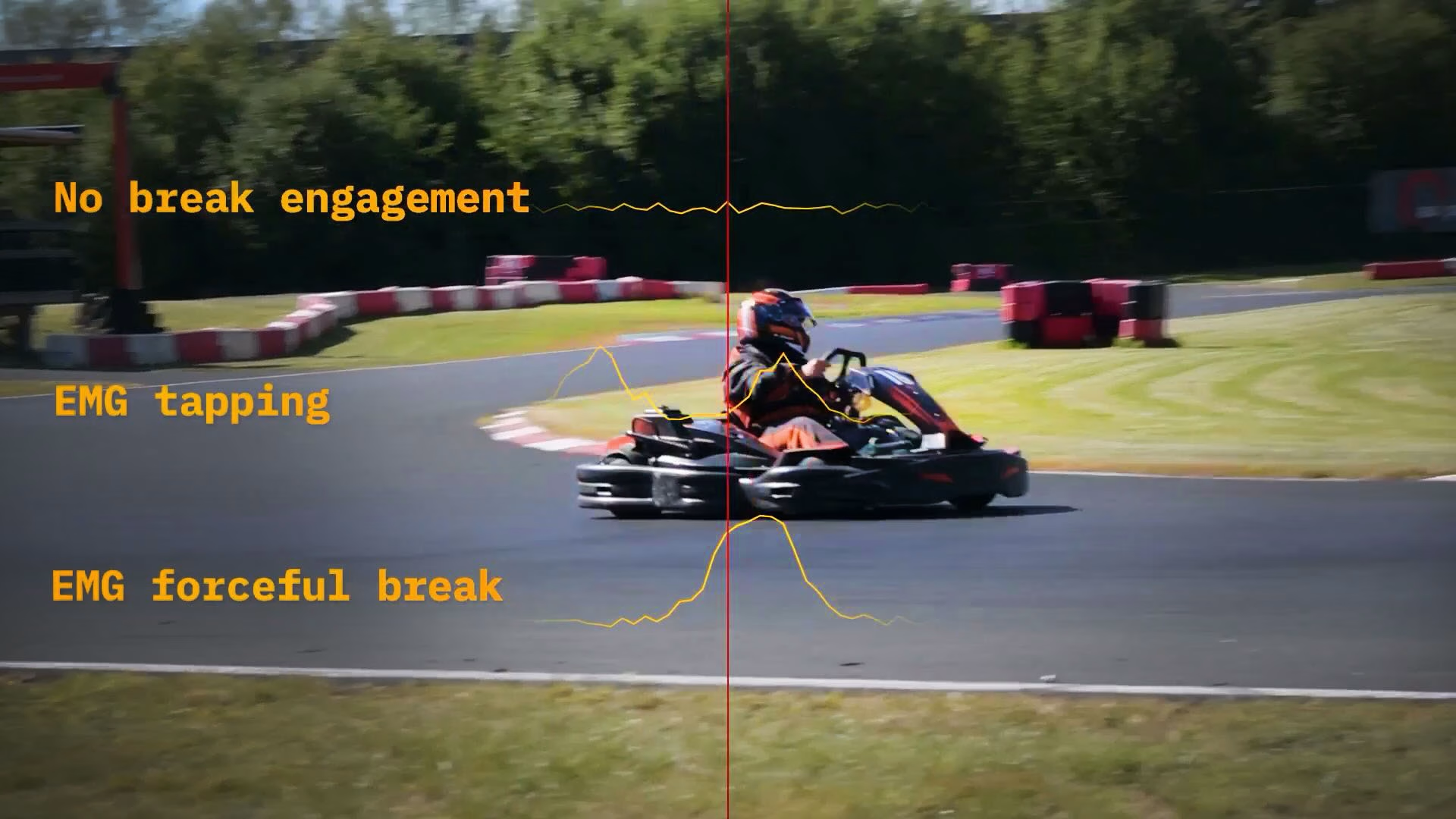

GoKart Racing: Research Outside The Lab

We took eye-tracking glasses, EMG sensors, a respiration belt, and ECG monitoring to the go-kart track to measure driver attention, stress, arousal, and braking behavior in a real driving scenario.

-

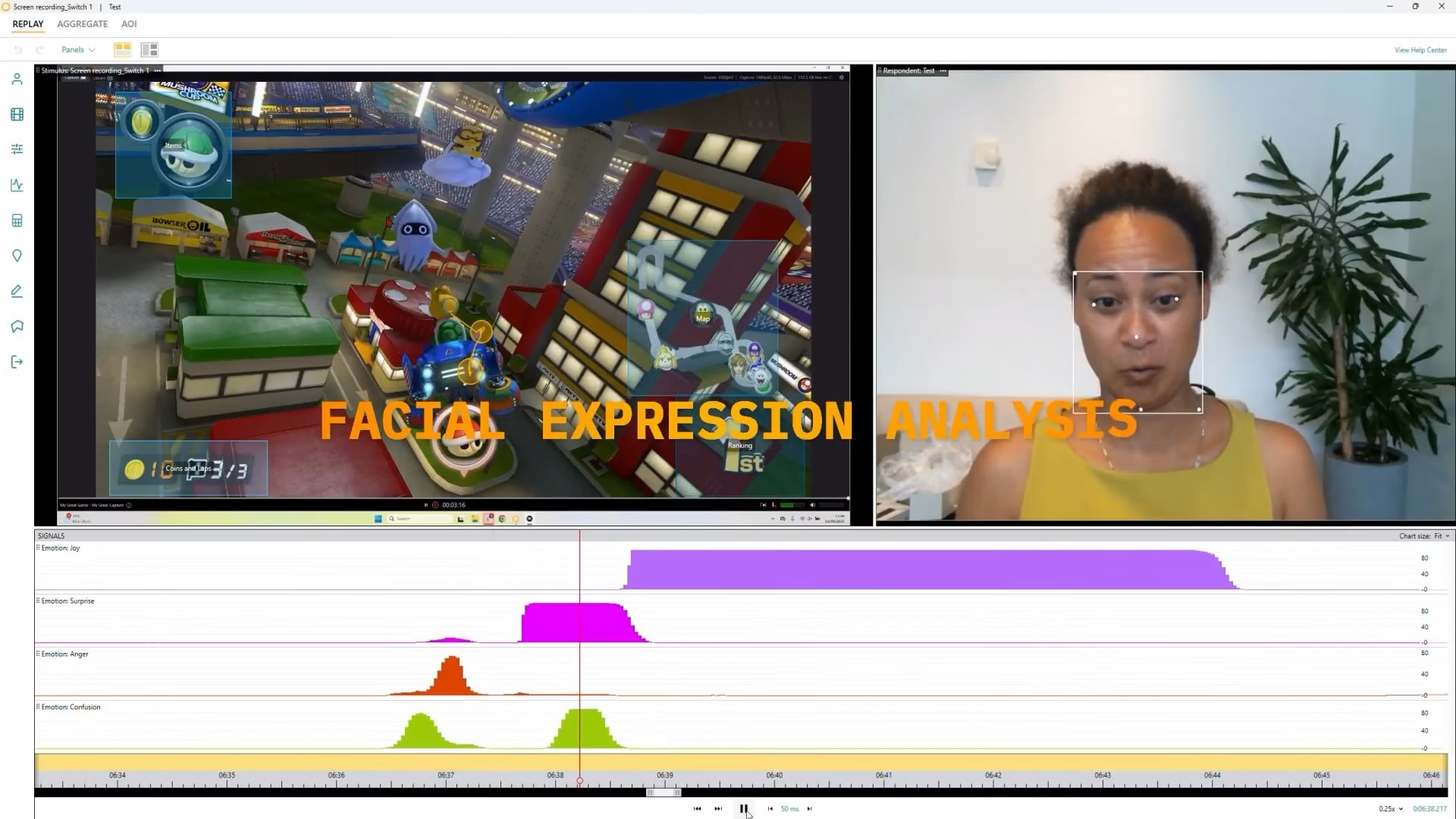

Mario Kart: More sensors, more insights

More complex multimodal analysis looking at engagement in terms of crash events with ECG, Resp, SB ET, Voice, and FEA

-

Mario Kart: From data to insights

Look at engagement through facial expression analysis and blinks.

Ready to upgrade?

iMotions 11 is a free update for all users with an active license. Head over to my.imotions.com and grab your copy now.