So, what is an API? An API (Application Programming Interface) is essentially a gateway that allows a software to talk to other software – and also defines how that conversation takes place. This allows a programmer to write code to one software, that can then carry out certain desired actions, without a programmer having to write that software from scratch.

An example – think of a mobile app that you have that uses your phone’s camera – the creators of the app didn’t have to program how the camera represents images on your phone, or take images, they just used an API that can access the pre-existing framework.

In the abstract, it works a bit like a power socket – it’s a standardized way for a device to access and use pre-existing infrastructure. Your hairdryer doesn’t need a portable generator to work, you just plug it in. In the same way, a developer can “plug in” their software into existing software.

An API in essence provides the key and the map for how other software can be accessed. This is obviously good for reducing the amount of programming work needed to carry out certain actions – you don’t need to reinvent the wheel every time – but also for protecting the software being communicated with.

As an API ensures that the actions requested by one software to another are limited to predefined behaviors, this reduces the risk of something malicious being carried out (a request for the software to reveal passwords, for example).

This is all explained in further detail below, but first:

What does this have to do with human behavior research?

A vast majority of contemporary human behavior research uses software in some form. As an API can enhance software, understanding why, and how, this can be done opens the opportunity for making the most of the software that is being used, in a behavioral research context.

For example, iMotions doesn’t automatically collect data from devices that it doesn’t have an integration with – such as a car, or a simulation device. It can however receive, respond to, and react to data that it receives from them with the use of its API.

This means that it’s possible to measure, for example, how an individual’s GSR (galvanic skin response) reacts in response to known car speeds. Or how the movement of a simulator impacts our visual behavior through eye tracking. These are just some examples, but there are abundant possibilities.

But how does an API actually work?

APIs unlock a door to software (or web-based data), in a way that is controlled and safe for the program. Code can then be entered that sends requests to the receiving software, and data can be returned.

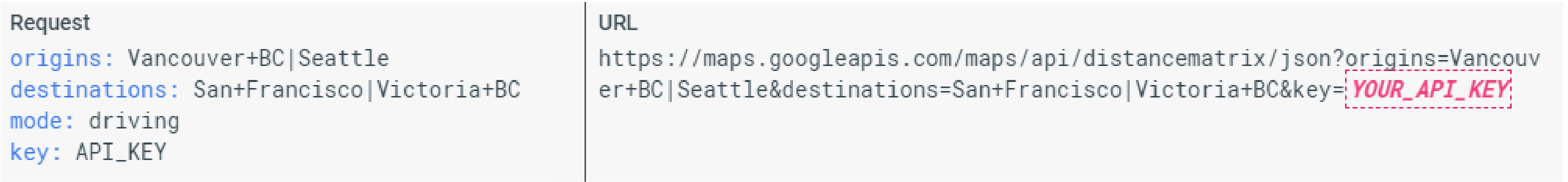

A clear example of this in action is the Google Maps API. Users first have to sign up to receive an API key – once they have this the website can retrieve information from Google Maps.

There is a predefined list of requests that the user can enter. In the Google Maps example below, the origin is listed to the left, and is entered in the URL to the right. Once this is entered on a web page (or entered into the web browser), Google can process the request, and return the desired values – in this case, driving directions from Vancouver to San Francisco.

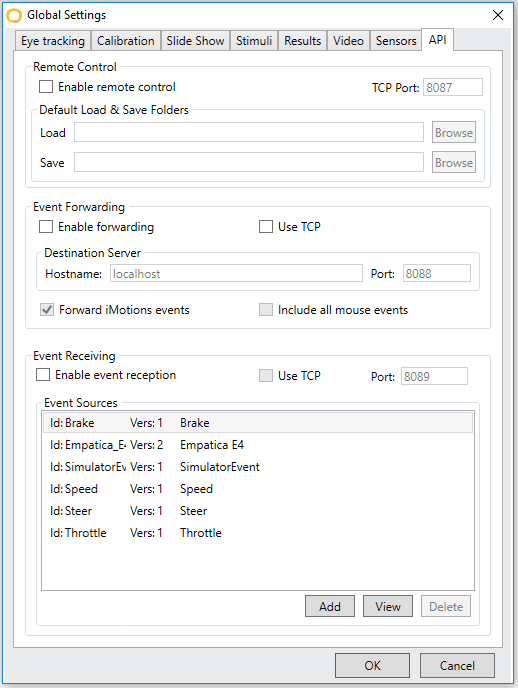

Another example with iMotions is shown below, in which the API can be enabled (or disabled of course). This is shown through a GUI (graphical user interface), which allows the user to choose which device can send or receive information, and where that information should go, or where it should be saved.

Making the most of the API in iMotions

As long as data can be digitally sent out from a device, it can in all likelihood be integrated into iMotions, with other data streams. The API is agnostic to both the programming language, and therefore the device – as long as the device can send requests (through UDP or TCP), then iMotions can receive the information.

Check out our Webinar: iMotions API: What is it and how can you use it to maximize your research?

This provides a huge expansion of possibilities for human behavior research, as previously distinct data, from previously distinct environments, can be examined in new ways. It provides the possibility of exploring situations that were once confined to the lab, to be carried out in the wild (and vice versa).

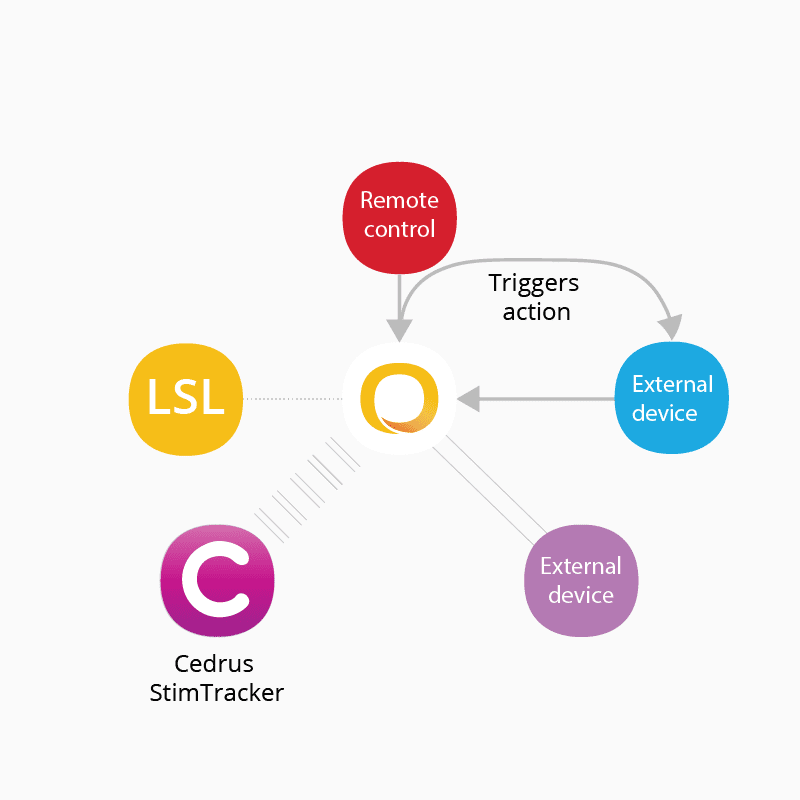

It’s possible to carry out a range of actions with the use of the API and iMotions, some of which are illustrated below.

Data can be sent forward from iMotions, meaning that real-time analysis, customized visualizations, or other processes can be carried out alongside data collection (depending on where the data is being sent, of course).

In addition to simply synchronizing the data between disparate devices, it’s also possible to use the data to trigger events in iMotions, and even loop the data back to the original device.

For example, commands can be built that can tell iMotions to present a new stimulus once a certain facial expression has been exhibited, a number of GSR peaks detected, or if a participant looks in a certain direction.

As iMotions responds with the next part of the experiment (a stimulus, or instructions for example), it also continuously feeds the information back to the connected device (or software) that can then continue to tell iMotions how to respond. With just a little technical know-how, there is a huge amount of flexibility that can be readily explored with APIs in human behavior research.

Free 52-page Human Behavior Guide

For Beginners and Intermediates

- Get accessible and comprehensive walkthrough

- Valuable human behavior research insight

- Learn how to take your research to the next level