Virtual Reality (VR) is transforming research across fields like healthcare, education, and design by offering immersive environments to study human behavior, emotions, and performance. This detailed guide explores VR’s history, hardware, software, applications, and integration with biosensors, emphasizing its role in enhancing user engagement, realism, and research methodologies.

Table of Contents

Introduction

Virtual Reality (VR) denotes an immersive computer-generated environment accessed through specialized devices such as VR headsets, enabling users to engage with and explore lifelike digital surroundings. VR can be experienced through computer monitors or head-mounted displays (HMDs). While this guide focuses on the latter, many of the concepts apply to both mediums.

Although the roots of virtual reality trace back to the 1950s, significant progress has occurred over the decades. In the mid-1950s, Morten Heilig introduced the Sensorama, an early attempt at a multisensory cinematic experience. By the 1960s, computer scientist Ivan Sutherland and David Evans developed the first head-mounted display (HMD), a rudimentary precursor to modern VR headsets.

The integration of VR into the gaming industry began in the 1980s, but it was the critical technological advancements in the 2010s that catapulted VR into mainstream markets. Over the intervening 30 years, VR gained traction in academia, notably in military applications, eventually expanding into diverse research domains. Today, VR plays a crucial role in fields such as healthcare, architecture, professional training, and education.

This document delves into the application of VR in research, covering use cases, hardware, software, and data analysis. We explore recent advances in virtual reality and their impact on strengthening research methodologies employing VR technology. To get started, we delve into the historical milestones in the VR research timeline and explore the roles of immersion and embodiment as key factors to the success of VR in research.

Virtual Reality (VR) has permeated diverse research domains, ranging from military training simulations to honing public speaking skills. Despite the vast applications, common challenges persist across these fields, primarily revolving around technological barriers. Recent strides in VR headset technology, such as wireless capabilities, easy access to realistic environments, and seamless data recording methods, position VR to further integrate into various research practices.

Clinical treatment centers now harness VR for mental health interventions, while medical schools leverage it for surgical training. Pilots and military professionals undergo immersive training in safe environments to develop skills to navigate complex and hazardous tasks in a safe training environment. Compared to conventional approaches, VR offers an unparalleled opportunity to merge individuals with simulated environments, fostering the development of real-world skills-a key factor contributing to the success of VR research.

In contrast to traditional methods, VR excels in fostering immersion and presence -a state where users are fully absorbed in a simulated environment-as well as embodiment, the sensation of having a physical presence within that environment [1]. These features are largely absent in traditional methods, and appear to be one of the driving factors in motivating user compliance, leading to higher retention rates in the clinical realm.

Immersion and presence are often, though incorrectly, used interchangeably. Immersion refers to the objective technological quality and sensory experience of the virtual environment, while presence refers to the psychological experience of how much you feel present in the virtual world [2]. Immersion can be enhanced by including features such as haptic feedback, and greater immersion level typically correlates with higher subjective presence.

Interestingly, while presence and immersion appear important for sustaining user motivation [3], high immersion or presence is not necessary for triggering emotional responses [4]. Interestingly, they are also not necessary for users to benefit from virtual exposure therapy, suggesting that presence and immersion are not always critical in the context of VR [5].

While it remains challenging to quantify immersion and presence, researchers have successfully quantified embodiment. For example, one study demonstrated that participants have the same physiological response, including in the brain and the autonomic nervous system, when they experience being attacked with a knife [6].

The physiological similarity indicates that they have ‘adopted’, their virtual body part as their own. As an extension, the emotional intensity of a virtual experience is modified by the degree of embodiment, with higher levels of embodiment correlating with higher emotional intensity [7]. In other words: when people feel embodied, the virtual environment is capable of triggering real-life brain and body reactions.

Because of that it is perhaps not surprising that a greater sense of embodiment in the virtual environment correlates with better treatment outcome and performance [8]. The attentive reader may be puzzled by the opposite results embodiment and presence on a user’s physiological and emotional experience [9]. While embodiment includes a sense of presence, it also encompasses a sense of agency and location [10]. While presence alone may not improve user experience, the broader experience of embodiment may. We encourage curious readers to address this topic elsewhere [11].

In summary, while virtual reality research is still advancing, it is clear that its success depends largely on its ability to induce an immersive experience previously unknown. VR has not only started changing the therapeutic landscape, but is also changing education, professional training and marketing practices. Next, we will dive into six different research areas in which VR is being applied.

Virtual Reality Research Use Cases

Virtual reality has become an integral tool across a myriad of research domains focusing on human behavior and performance. Its applications span diverse fields, from marketing and public speaking to military training and surgical interventions. In the subsequent sections, we will delve into a selection of these exciting use cases.

Mental Health & Illness

Mental illness is highly heterogeneous, both within and across diseases, and individuals. While people rarely present exactly the same set of symptoms in the same way, at the same time, there are defining and typical characteristics of each defined illness that can be treated through standardized therapeutic programs. VR is heavily explored in the setting of improved therapy outcomes, but is also increasingly used for identifying new biomarkers and risk factors.

Phobia

VR enhances standardized therapy for phobias by facilitating graduated exposure, starting from a manageable fear level (e.g., viewing a spider picture) and progressing to actual stimuli (e.g. the spider comes closer). Traditional exposure methods have challenges (for example, how do you induce a fear of flying in a therapy office?) that VR can overcome with its immersive and accessible technology. While VR may seem novel, it has been used to treat phobias for decades.

In fact, a paper from 1998 describes how VR was utilized for fear of heights, fear of public speaking and fear of flying ((North et al., 1998)). Since then, the field of VR therapy has exploded with papers demonstrating the effects of VR therapy on treating agoraphobia (fear of places or situations) [12], arachnophobia (fear of spiders) [13], driving phobia [14], fear of heights [15], and blood-injection injury (BII) phobia [16].

Other mental illnesses

While VR therapy has been most extensively demonstrated in addressing phobias, it also exhibits efficacy in reducing depression [17], anxiety [18], post-traumatic stress disorder (PTSD) [19], addiction [20] and stress levels [21]. Additionally, VR therapy has the potential to predict depression and anxiety levels [22], with emerging studies suggesting its capacity to enhance the quality of life for individuals with schizophrenia [23] and psychotic disorders [24]. There is no doubt that VR presents a revolutionary approach to understanding and treating mental illness.

Pain Management

A large number of publications address the positive effects of VR therapy on acute and chronic pain management [25]. While several commercial solutions have evolved, the research findings are still conflicting and there is an urgent need for more randomized control studies ((Brady et al., 2021, Smith et al., 2020, Wittkopf et al., 2019)). Factors such as individual differences, specific pain condition, pain severity level and age may influence whether VR therapy for pain is effective.

Physical Rehabilitation

Physical Rehabilitation is one of the most well studied areas within VR research. According to the search engine Pubmed, 1473 articles have been published on “virtual reality therapy AND rehabilitation” since 2000. Researchers at the University of South Carolina have pioneered a transformative approach to stroke rehabilitation, integrating virtual reality (VR) and Brain Computer Interface (BCI) principles [26].

This innovative method utilizes VR to display avatars of upper limbs, employing brain (EEG) and muscle (EMG) sensors for real-time visualization of attempted movements. This multimodal approach significantly enhances motor imagery, re-engages motor circuits, and accelerates the recovery of upper limb motor functions in chronic stroke survivors. Moreover, studies led by Wittman and researchers from University Hospital Zurich reveal that implementing VR systems in stroke rehabilitation shows promising outcomes, with increased usage correlating with improved motor functions [27].

The potential for VR to serve as a valuable addition or partial replacement for physical therapy in clinical settings becomes evident. Additionally, VR-based interventions have demonstrated notable success in improving motor function in children with cerebral palsy, surpassing non-VR training methods [28]. These advancements underscore the broad applicability and efficacy of VR in diverse rehabilitation contexts, from stroke survivors to pediatric populations.

Education and Training in VR

Virtual reality is transforming education and skills training by providing immersive, practical learning experiences. It breaks geographical barriers, making education accessible globally. In the corporate sector, VR reduces training costs and enhances real-world readiness. This evolving technology is reshaping the future of learning and professional development.

Surgical and Medical Training

Early on in the use of virtual reality (VR) for medical training, it became evident that it not only posed no risk to patients but also served as a valuable adjunct for surgical residents. A study by Seymour and colleagues revealed that VR-trained individuals dissected gallbladders 29% faster and were less likely to cause harm to non-target tissue compared to those with only standard training [29]. Similar benefits were found in laparoscopic surgery [30], where prior VR training equated to substantial performance improvement, as confirmed by a meta-analysis [31].

A recent study in 2019 demonstrated transferable skills from VR to real-world hip replacement surgery, indicating the potential for VR training to significantly enhance surgical skills [32]. These findings suggest the possibility of a transformative shift in operating room education and improved surgical outcomes. One review paper emphasized the strengthening of surgeons’ skill sets and the potential for cost reduction and enhanced patient outcomes through structured VR-based curricula [33].

Employee Training

In professions such as power line engineering, where safety is paramount, researchers have leveraged virtual learning environments to enhance training effectiveness [34]. A study highlighted the cost-effectiveness of this approach, emphasizing its ability to efficiently transfer skills and knowledge to new workers while minimizing time and financial investments in training [35].

This supports the broader application of virtual reality (VR) in training scenarios. To further enrich research insights, the integration of biosensors can provide valuable data on physiological arousal and cognitive load during the learning process. This data can offer nuanced understanding, identifying critical moments where accidents may be more likely and informing the refinement of training methodologies.

Product Design

VR transforms product design by enabling 3D prototyping, collaborative design sessions, and user experience testing in a virtual environment [36]. It facilitates real-time design iteration, supports remote collaboration, and aids in market research by providing immersive product experiences that can capture both the physical and emotional aspects of product interaction [37].

While many companies have started to adopt VR, researchers have also demonstrated its efficacy spanning fields from food truck design [38], to street light design [39]. In addition, VR reduces time and costs by catching design flaws early, making the design process more efficient and cost-effective. This article published in Forbes dives deeper into the positive aspects of utilizing VR in product design.

Marketing

Virtual Reality (VR) is revolutionizing marketing strategies, particularly in the tourism and real estate sectors. In tourism, VR offers a game-changing approach by not only showcasing destinations visually but also providing an immersive experience, boosting motivation and intent to visit [40].

The real estate industry has also embraced VR, with studies highlighting its positive impact on attitudes and purchase intentions [41]. Furthermore, VR has proven to enhance positive brand attitudes compared to traditional marketing methods [42]. In summary, VR’s dynamic and immersive nature captivates audiences, resulting in increased engagement and favorable perceptions for marketers.

VR Technology Overview

Components of head mounted display (HMD) VR systems

Virtual Reality (VR) systems typically consist of several key components that work together to create an immersive virtual experience. The main components include:

- Headsets:

- Display: The headset incorporates a high-resolution display or displays that are positioned close to the user’s eyes. This provides a stereoscopic view, creating a 3D effect.

- Lenses: Lenses are used to focus and shape the images displayed on the screen, enhancing the user’s field of view and depth perception. The screens inside VR headsets are very close to the user’s eyes, and without lenses, the images would appear blurry. Lenses help to focus the light from the display, making the virtual content clear and sharp. Lenses also play a crucial role in determining the field of view that users experience in VR. They help to magnify the images on the display, providing a wider and more immersive field of view. They also contribute to the 3D effect and proper lens choice is important to increase comfort during the VR experience. While lenses are a standard component in VR headsets, the specific types of lenses, their shape, and their optical properties can vary between different VR devices. VR headsets employ either fresnel lenses, known for their lightweight design, or traditional convex lenses [43]. Fresnel lenses are popular among vendors due to their compatibility with smaller headsets, offering ease of production. However, this convenience comes at the expense of introducing optical artifacts, potentially distracting users. On the flip side, traditional convex lenses, though pricier to produce for wearable displays, are utilized by a select few, like Varjo, prioritizing optical quality in their VR headsets. Ultimately, the choice of lens design is influenced by factors such as weight, size, and the desired optical characteristics of the VR experience.

- Controllers:

- Hand Controllers: These are handheld devices that users interact with to manipulate objects in the virtual world. They often have buttons, triggers, and touch-sensitive surfaces to enable various interactions.

- Gesture Controllers: Some newer VR systems use cameras or sensors to track the user’s hand movements and gestures without the need for physical controllers.

- Sensors:

- Positional Tracking Sensors: These sensors track the user’s physical movements within a defined space. They can be external sensors placed around the room or built into the VR headset itself. Positional tracking sensors monitor the user’s head movements and orientation within a limited space. They track the position and rotation of the VR headset to update the virtual display accordingly.

- Room-scale Sensors: In room-scale VR setups, sensors are strategically placed in the physical space to track the user’s movements accurately. This allows users to move around freely in a defined area. Room-scale sensors are specifically designed to track the user’s movement throughout a larger physical space. They enable users to walk around, crouch, and interact more freely within the designated area.

- Inside-out Tracking: Some modern VR headsets feature inside-out tracking, using onboard cameras and sensors to monitor the user’s position and movement without the need for external sensors.

- Cables and Connectors:

- Cables: VR headsets are often tethered to a computer or console by cables that transmit data and power. Wireless also known as “standalone” VR solutions are becoming more common to enhance freedom of movement. We will talk more about these in the next section.

- Connectors: The cables are connected from the computer to the VR headset and, in some cases, to external sensors or processing units.

- Computing Device:

- PC, Console, or Standalone Device: VR experiences require significant computing power. VR systems are connected to a compatible computing device, which can be a powerful PC, a gaming console, or a standalone device with built-in processing capabilities.

- Software:

- VR Applications: While this section is focused on hardware, it cannot be overstated how important software is to successfully run a VR experience. There are various software applications and games developed for VR platforms, providing users with immersive experiences. We go into these in sections below.

- Haptic Feedback:

- Some VR systems incorporate haptic feedback devices to simulate the sense of touch. This can include vibration or force feedback in controllers to enhance the feeling of interaction with virtual objects.

Under ideal circumstances, these components work together seamlessly to create a compelling virtual experience, allowing users to engage with and explore digital environments in a more immersive way. Your precise hardware needs will always depend on the research study at hand.

Wired vs. standalone headsets: a comparative analysis

Wired and standalone VR headsets each have their own set of advantages and limitations when used for research purposes. The choice between the two depends on the specific requirements of the research project. The figure below provides an overview of what we consider the major differences.

| Tethered VR Headset | Standalone VR Headset | |

| Graphics Quality | Wired headsets can support more advanced graphics and visual effects due to the direct connection to a high-performance computing device. | Processing power is limited compared to wired setups, potentially impacting the graphics quality and complexity of the virtual environment. |

| Mobility | The tethering cable limits the user’s movement and can be a potential tripping hazard. However, some wired headsets are now offering longer cables or cable management solutions. | Standalone headsets offer complete freedom of movement since they are not tethered to an external device. This is advantageous for research scenarios where mobility is crucial. |

| Tracking Precision | Tethered VR headsets may provide external sensors or base stations, or inside-out tracking. Extern sensor/base stations often provide precise tracking, allowing for accurate head and controller movements. Inside-out tracking, further defined in the Standalone VR headset column provides increasingly more accurate tracking. | Standalone VR headsets typically use inside-out tracking, which relies on built-in sensors and cameras on the headset itself to track the user’s movements and the position of the controllers. Inside-out tracking in standalone headsets has evolved, and many modern devices offer relatively accurate tracking for head movements and controller interactions. |

| Costs | Typically, wired VR setups can be more expensive due to the need for a powerful computer or console to drive the VR experience. | Standalone headsets can be more cost-effective, as they eliminate the need for an external computing device. This can be advantageous for research projects with budget constraints. |

| Ease of Use | The setup process for external sensors may be more involved, and the user needs to be within the sensor’s line of sight. | Standalone headsets are generally easier to set up and use, as there are no external sensors or cables to manage. |

| Power | Tethered headsets can run continuously due to constant power supply. | Standalone headsets are battery powered and have limited usage time before needing to be recharged. |

| Content Availability | Wired headsets often have robust support for content development with access to powerful development environments and tools. | The standalone VR ecosystem may have limitations in terms of available content and development tools compared to more established wired VR platforms. |

In summary, the choice between wired and standalone VR headsets for research depends on the specific needs of the project, considering factors such as performance requirements, mobility, ease of use, and budget constraints. Researchers should carefully assess these factors to determine which type of VR headset aligns best with their research objectives.

Varjo XR-4 is a high-end mixed reality

headset with built-in eye tracking. It is

a tethered headset and needs to be

connected to a computer.

Apple Vision Pro is also a high-end mixed reality headset, however it has onboard computing power so it does not need to be connected to an external computer.

Building and Leveraging the VR environment for Research Purposes

In order to perform VR research, it’s not enough to have a VR headset. You also have to think about your virtual environment: Will you build it yourself or can you purchase it online? Should it be gamified? Does it need to be interactive or is passive viewing enough? Does it need to be monoscopic or stereoscopic? And what challenges are you willing to face? This section will cover the most essential aspects of building a VR environment for research purposes.

Building the VR environment

Developing a virtual reality (VR) environment for research encompasses essential stages, ranging from selecting an appropriate development environment to navigating challenges unique to VR content creation. It’s crucial to note that even if you aren’t directly involved in building the VR environment, you may still need to leverage a development platform to execute a pre-designed environment for your research purposes. Below, we provide an overview of what you will have to consider and navigate.

3D Development Environments

3D development environments are specialized software platforms designed for the creation and manipulation of three-dimensional digital content. These environments provide tools and features that empower developers, designers, and artists to build immersive and interactive 3D experiences. Within these environments, users can model, animate, simulate, and render virtual objects and scenes.

Two prominent examples of 3D development environments are Unity and Unreal Engine, both widely used in various industries, including gaming, simulation, virtual reality (VR), and augmented reality (AR). These environments play a crucial role in shaping the digital landscape, enabling the creation of realistic and engaging content for a wide range of applications. Below we have provided a comparison of Unity and Unreal to help guide you in deciding which platform is best for you.

Keep in mind that various industry-specific tools for VR content creation are available, and it is beneficial to explore these alternatives. For instance, Revit is widely utilized in the field of architecture, while Prepared3D is specifically designed for military applications.

| Unreal Engine | Unity | |

| Programming Language | Utilizes C++ as its primary programming language, offering a more performance-centric approach but requiring a deeper understanding of programming. | Primarily uses C# for scripting, making it accessible to developers with varying levels of experience. |

| Ease of Use | Has a steeper learning curve due to its more complex interface and the use of C++. However, Blueprints in Unreal provide a visual scripting system, simplifying development for those without extensive programming skills. | Known for its user-friendly interface and ease of use, making it a popular choice for beginners and indie developers. |

| Graphics and Rendering | Renowned for its advanced graphics capabilities, offering realistic rendering, high-quality lighting, and sophisticated visual effects out of the box. Has more advanced tools for procedural generation of content. | Provides good graphics capabilities, and with the introduction of the High Definition Render Pipeline (HDRP), it can achieve high-quality visuals. |

| Asset Store and Marketplace | Offers the Unreal Marketplace, providing a variety of assets, including 3D models, textures, and plugins. | Features a large Asset Store with a vast library of assets, plugins, and tools that developers can purchase or use for free. |

| Community and Support | Has a strong community, with comprehensive documentation and support forums. Epic Games, the company behind Unreal Engine, provides support and resources. | Boasts a large and active community, with extensive documentation and a wealth of tutorials available. |

| Platforms | Supports multiple platforms, with a strong emphasis on high-end gaming platforms, but its mobile and VR/AR support has grown over time. | Known for its excellent cross-platform capabilities, allowing developers to deploy games and applications on a wide range of platforms, including mobile, desktop, consoles, and VR/AR devices. |

Ultimately, the choice between Unity and Unreal Engine often depends on the specific needs of a project, the preferences and expertise of the development team, and the desired platform for deployment. Both engines are capable of creating high-quality applications, and the decision is often subjective based on the context of the project.

3D Virtual World vs. 360 Video

When building or choosing a virtual environment, you will also have to decide whether it should be a 3D virtual world or a 360 video. A 3D virtual world is a computer-generated, interactive environment enabling users to navigate and engage with digital objects. Particularly well-suited for research scenarios emphasizing user interaction, spatial comprehension, and dynamic content, it necessitates skills in 3D modeling and scripting for the implementation of interactive elements and behaviors.

In contrast, 360-degree video captures real-world environments, delivering an immersive yet passive experience for viewers. Ideal for research projects that prioritize real-world observation or storytelling, it involves the use of specialized 360-degree cameras and post-production editing to ensure optimal visual quality. The choice between them depends on the desired level of interactivity and the nature of the content or experience being created.

Monoscopic vs. Stereoscopic

The primary difference between monoscopic and stereoscopic virtual reality (VR) lies in how they present depth perception: while monoscopic VR provides a single, flat image to both eyes, stereoscopic VR delivers a more immersive experience by presenting separate images to each eye, enabling a more realistic perception of depth and dimensionality.

Stereoscopic VR is ideal for scenarios where accurate spatial perception is crucial, such as training simulations, architectural visualization, or any application where a realistic 3D environment is desired. In contrast, monoscopic VR is suitable for experiences where depth perception is less critical, and the emphasis is on simplicity or where 3D effects are not a primary concern.

Challenges in Producing VR Environments

Developing VR environments is challenging, requiring optimization for performance, hardware compatibility, intuitive user interfaces, and addressing ethical considerations. Collaboration among programmers, researchers, designers, and even artists is essential to balance technical intricacies and user experience. The graphic below mentions six common challenges when developing a virtual environment.

| Hardware compatibility: | Ensuring compatibility across diverse VR headsets and devices is challenging, given variations in tracking systems, input methods, and performance capabilities. “Open” development platforms, such as OpenXR, which allows for the creation of virtual environments that can be run on any VR headset, are becoming increasingly available, but technical restrictions still exist especially with older hardware. |

| Optimization for Performance: | VR environments need optimization for smooth performance, preventing motion sickness and ensuring user comfort. This includes managing polygon counts, textures, and rendering techniques. |

| User Interface (UI) Design: | Designing user-friendly VR interfaces is challenging, as traditional 2D UI principles may not directly apply. Depending on the hardware, readability of text on the head mounted display can be a challenge. Additionally traditional input response methods like mouse and keyboard are not typically readily available in VR. |

| Motion Sickness Mitigation: | Addressing motion sickness is crucial, considering factors like comfortable locomotion, reduced latency, and minimized vestibular-ocular mismatch. |

| Content Creation Expertise: | Often off the shelf assets may not exactly fit your needs and creating new 3D models, textures, and animations demands expertise. Research teams may require skilled 3D artists or designers. |

| Ethical Considerations: | VR research may pose ethical challenges regarding user safety, privacy, and the impact of immersive experiences on participants. |

Building a VR environment for research involves a multidisciplinary approach, combining technical skills, creativity, and an understanding of the specific research goals. Researchers should carefully choose the development environment and consider the unique challenges associated with VR content creation.

Leveraging the VR environment

In the course of our research endeavors, there is a keen interest in understanding the human experience-namely, identifying points of visual focus, discerning favored sections within the virtual environment, and gauging interaction with virtual objects. Within the domain of virtual reality (VR), the quantification of these experiences is facilitated through game telemetry.

Whether employing an event-based or continuous approach, we gain insights into users’ visual attention, movement patterns, and interactions with virtual elements. By meticulously analyzing game telemetry, researchers glean valuable information regarding participants’ experiences, preferences, and reactions. This analytical process contributes to a nuanced comprehension of the psychological and emotional dimensions inherent in the VR experience, enabling a more comprehensive evaluation of how virtual environments influence users’ perceptions and behaviors.

In essence, game telemetry stands as a quantitative instrument empowering researchers to measure and scrutinize the intricate facets of the human experience in VR. To delve further, researchers may opt to synchronize game telemetry data with eye tracking or other biosensor modalities, thereby enhancing the depth of understanding-an exploration that is elaborated upon in subsequent sections.

Biosensor Integration in VR

Integrating biosensors into virtual reality (VR) research enhances our understanding of the human experience. By measuring physiological responses like heart rate and brain activity, researchers can analyze real-time data within immersive VR environments. This combination provides nuanced insights into emotional and cognitive states during simulated experiences, offering valuable applications in psychology, neuroscience, and human-computer interaction.

Nowadays, almost any biosensor can be combined with VR headsets. In fact, some VR headsets even include integrated biosensors. The following table offers a concise overview illustrating how various biosensors contribute to the improvement of insights in VR research.

Sensors

| Eye Tracking | |

| Benefit to VR research | Enables you to measure people’s visual attention patterns in the VR environment. |

| Considerations | Increasingly more common to have integrated into higher quality VR headsets. |

| Relevant use cases | – Performance evaluation (Makransky et al., 2017) – Road safety with autonomous driving (Brown et al., 2018) – Architectural design (Zou and Ergan, 2019) |

| Heart Rate (ECG/PPG) | |

| Benefit to VR research | Enables you to measure people’s physiological and psychological response to a virtual event |

| Considerations | An easy addition, in particular when using chestbands. Some more advanced headsets may have an integrated PPG sensor. |

| Relevant use cases | – Stress reduction effects of VR therapies (Kim et al., 2021) – Stress level classification (Ham et al., 2017) – Monitoring emotional affect and immersion (Marin-Morales et al., 2021) |

| Respiration | |

| Benefit to VR research | Enables you to measure people’s physiological and psychological response to a virtual event, in a non-invasive and even contactless approach. |

| Considerations | An easy addition, in particular when using chestbands. Your respiration sensor can be contactless by using a remote video feed, but keep in mind that in this setup your participant has to sit still. |

| Relevant use cases | – Motion sickness intervention (Russell et al., 2014) – Stress classification (Ishaque et al., 2020) – Breath skill training (Lan et al., 2021) |

| Electrodermal activity (EDA/GSR) | |

| Benefit to VR research | Enables you to measure people’s psychological response to a virtual event |

| Considerations | While easy to add, it’s important to consider the location of the sensor. VR studies that require hand movements will have to consider the best placement for the EDA sensor to minimize movement artifacts. |

| Relevant use cases | – Detection of Autism Spectrum Disorders in children (Alcaniz Raya et al., 2020) – Embodiment of a virtual prosthetic limb (Rodrigues et al., 2022) – Motor skill training (Radhakrishnan et al., 2022) |

| Voice analysis | |

| Benefit to VR research | Enables you to measure people’s psychological response to a virtual event through a completely contactless setup. |

| Considerations | Increasingly more common for VR headsets to have high-quality internal microphones that are sufficient for voice analysis data collection. |

| Relevant use cases | – Screening for mild cognitive impairment in VR (Wu et al., 2023) – Real time detection of stress during VR gaming (Brambilla et al., 2023) – Public speaking training (Arushi et al., 2021) |

| EEG | |

| Benefit to VR research | Enables you to measure people’s cognitive response to a virtual event. |

| Considerations | Adding EEG to a VR setup has numerous methodological drawbacks, but advanced headsets are starting to circumvent these. For example, Galea has integrated EEG into the headset, and Wearable Sensing offers a VR headset that is designed to accommodate an EEG headset. It is important to keep in mind that adding EEG to your VR research will significantly increase the setup time. |

| Relevant use cases | – Measuring cognitive workload in the VR environment (Tremmel et al., 2019) – Monitoring meditative states during VR (Lan et al., 2021) – Measuring presence and immersion in VR (Dey et al., 2023) |

| Motion sensors via EMG or accelerometers. | |

| Benefit to VR research | Enables you to measure people’s movements in the VR environment. |

| Considerations | An easy addition, in particular when you use wireless sensors. |

| Relevant use cases | – Rehabilitation with a virtual prosthetic limb (Rodrigues et al., 2022) – Comparing muscle movements to natural motion in sports (Ida et al., 2022) – Decoding muscle movements inv VR (Dwivedi et al., 2020) |

| Facial expression analysis (FEA). | |

| Benefit to VR research | Enables you to measure people’s facial expressions in the VR environment. |

| Considerations | Facial Expression Analysis (FEA) significantly contributes to understanding users’ emotional experiences in VR scenarios, traditionally measured through facial Electromyography (fEMG). fEMG, a well-published and established approach, captures electrical activity in facial muscles unaffected by VR headsets. However, fEMG has limitations, such as the inability to compare facial expression intensity and challenges posed by VR headset obstruction for electrode placement. Integrating EMG sensors into VR research does increase setup time. An emerging alternative involves computer-based FEA during VR, utilizing cameras to capture unobstructed facial muscle movements. Products like the VIVE offer headset add-ons, like small cameras, to track lower facial movements during virtual experiences. Yet, the scientific community is still in the early stages of testing the reliability of this form of tracking for high-quality facial expression data. |

| Relevant use cases | – User affect engagement in a virtual cognitive training program (Reidy et al., 2020) – Facial palsy rehabilitation in VR (Quidwai and Ajimsha, 2015) Social affect in VR (Philipp et al., 2012) |

In addition to using biosensors for monitoring, identifying and predicting behavioral, emotional and physiological states, biosensors are also leveraged for biofeedback. Biofeedback is a technique that involves monitoring and providing individuals with real-time information about their physiological functions, such as heart rate, muscle tension, or skin conductance.

The goal of biofeedback is to raise awareness and control over these bodily processes, typically through visual or auditory cues. By observing the immediate effects of their thoughts, emotions, and behaviors on physiological responses, individuals can learn to self-regulate and achieve desired changes in their health and well-being. Biofeedback is commonly used in various settings, including stress management, performance enhancement, and rehabilitation, offering a non-invasive and empowering way for individuals to influence their own physiological responses.

Biofeedback is increasingly implemented as a part of VR research, and demonstrated to improve the outcomes of physical therapy [44], enhance stress reduction techniques [45], enhance sports performance [46] and is even used to increase human empathy capabilities [47]. Implementing biofeedback as a part of your research requires bidirectional communication between your biosensor and your virtual environment. In iMotions, this can be accomplished through our API interface.

Leveraging APIs and LSLs to synchronize and control VR data

Virtual Reality (VR) unfolds as an invaluable tool in research, delivering immersive experiences beyond the reach of traditional stimuli. To fully exploit VR’s potential, it’s crucial to explore how participants can engage more organically and meaningfully within these virtual landscapes.

For instance, envision a theatrical setting where VR assists actors in stress reduction during performances by providing real-time feedback on their elevated heart rates and encouraging therapeutic interventions like deep breaths. In the realm of military training, the synchronization of game telemetry with biometric signals becomes a powerful tool, offering precise insights into how virtual events impact a military member’s physiology and performance.

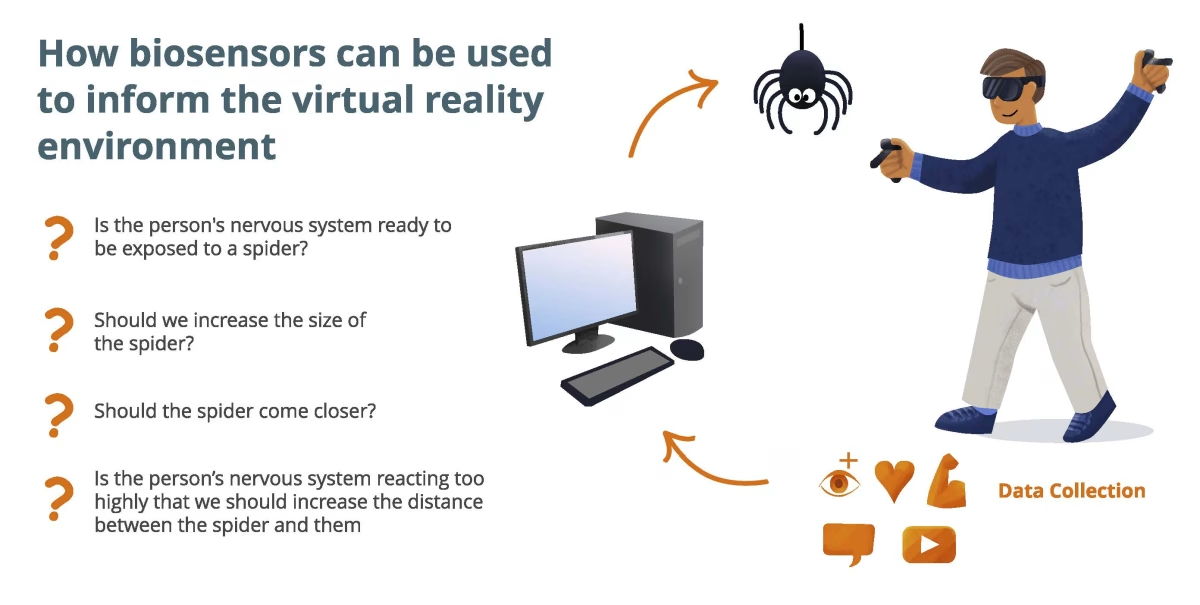

Introducing an additional layer, biofeedback and multimodal biometrics emerge as potent instruments for behavior modification and enhancement. Imagine a therapeutic VR session targeting someone with spider phobia. By integrating biofeedback, the virtual environment could adapt exposure therapy content based on the patient’s heart rate. As the patient becomes more physiologically comfortable with the virtual spiders, the environment dynamically adjusts, bringing the spiders closer.

This intricate integration of biofeedback and multimodal biometrics into the VR experience has demonstrated a remarkable increase in the effectiveness of VR research. Making this integration seamless are Application Programming Interfaces (APIs) and the Lab Streaming Layer (LSL) (https://labstreaminglayer.org/#/).

APIs serve as indispensable bridges, defining protocols that facilitate seamless communication between different software applications, exchanging data and functionalities. Meanwhile, LSL operates as a sophisticated framework for real-time data interchange, ensuring smooth communication and synchronization between diverse research devices and software applications in laboratory environments.

In summary, the strategic use of APIs and LSL empowers researchers to establish a bidirectional flow of information, unlocking innovative ways to synchronize and control VR data. This not only enriches the depth of VR research but also opens avenues for groundbreaking applications in fields ranging from performance arts to therapeutic interventions.

Feeding Biosensor Data into VR Environments:

APIs play a pivotal role in integrating biosensor data into VR environments. By establishing a connection between biosensor systems and VR platforms, developers can utilize APIs to seamlessly feed real-time physiological data-such as heart rate or EEG-directly into the virtual experience. This integration enriches VR interactions by allowing adaptive responses based on users’ physiological states.

Extracting Telemetry Data from the VR Environment:

LSL, a real-time data synchronization system, proves valuable for extracting telemetry data from the VR environment. By incorporating LSL into the VR setup, researchers can create data streams capturing various aspects of user interactions, gaze patterns, and navigation. These data streams, facilitated by LSL, enable the extraction of detailed telemetry information for comprehensive analysis, aiding in refining VR content and understanding user behavior.

Controlling the Virtual Experience Using Biosensor Data:

The bidirectional capabilities of APIs enable the dynamic control of the virtual experience using biosensor data. By integrating biosensor information through APIs, developers can implement real-time adjustments to the VR environment based on users’ physiological responses. For example, changes in stress levels or engagement detected by biosensors can trigger adaptive modifications in the VR content, enhancing personalization and immersion.

Challenges in Analyzing VR Data

VR generates vast amounts of multidimensional data, including sensory inputs, interactions, and physiological responses. Analyzing and making sense of this complex dataset can be challenging. In the following we focus on three of the challenges that researchers often encounter:

- The challenge of aggregating data from unique VR experiences

Each VR user embarks on a distinctive journey, interacting with diverse objects, exploring different zones, and reacting uniquely within the virtual environment. Regardless of the biosensors employed in VR research, aggregating this individualized data is essential for drawing meaningful conclusions. A strategic approach involves assigning participants specific tasks, like following a designated path or performing a particular action.

Although users may deviate slightly, this helps align their experiences, minimizing unique differences between datasets. Another effective method is pinpointing specific areas or activities of interest where all participants converge, such as landing a plane on an airport’s path or encountering an approaching spider. This allows researchers to compare individual behaviors in the same virtual scene, offering insights despite the diverse paths each user may have taken.

In iMotions, our gaze-mapping feature allows users to aggregate eye tracking and other biosensor data from distinct user experiences. This feature enables researchers to overlay data from specific scenes, irrespective of the timing when each user engaged with those scenes. This functionality ultimately empowers scientists to derive overarching conclusions from a multitude of diverse virtual journeys.

- The challenge of real-time synchronization of biosensor data and virtual environments

Achieving real-time precision in synchronizing biosensor data with the user’s virtual reality (VR) environment is crucial for accurately linking physiological responses to specific moments in the VR experience. Delays or inaccuracies in this synchronization can lead to misinterpretations of the relationship between the user’s physiological responses and virtual events.

Simultaneously, addressing the challenge of hardware and software compatibility involves ensuring seamless integration between different biosensors and VR systems with varying technical specifications and protocols. Compatibility issues, arising from differences in data formats, communication speeds, or calibration methods, must be overcome to establish a standardized and interoperable framework for effective synchronization. While APIs and LSLs can often address this issue, their adequacy may not be universal.

- The challenge of analyzing eye tracking data from VR headsets: transitioning from 3D to 2D

The challenge of analyzing eye tracking data from VR headsets involves the transition from a three-dimensional (3D) virtual environment to a two-dimensional (2D) representation for analysis. Eye tracking captures gaze data in the immersive 3D space of VR, but to interpret and analyze this information effectively, researchers often need to convert it into a 2D format.

This transition poses challenges as it requires careful consideration of depth perception, spatial relationships, and the dynamic nature of gaze points within the VR environment. Accurately representing and interpreting eye tracking data in a 2D context while preserving the nuances of users’ visual interactions in the 3D virtual space is a complex task that researchers and analysts need to address for meaningful insights.

Eye Tracking Virtual Reality

Conduct eye tracking studies in immersive environments to gauge respondents’ emotional responses.

See Features NowAdvancements in VR Technology

Recent technological enhancements have ushered in a transformative era, with significant advancements in Augmented Reality (AR), Hand Tracking, Eye tracking and Social Virtual Reality (VR). As these technologies continue to mature, the potential for innovation in research and the broader societal landscape appears limitless.

Augmented Reality (AR)

Augmented Reality (AR) is a technology that overlays computer-generated content onto the real-world environment in real-time, enhancing the user’s perception of their surroundings. It integrates digital information, such as images and 3D models, with the physical world using devices like smartphones, smart glasses, or AR headsets. AR applications vary from informational overlays to interactive experiences, offering users a more enriched and immersive interaction with their surroundings.

Augmented Reality, with its seamless integration of digital information into the real world, has found applications in fields ranging from gaming to healthcare, offering users an enriched and interactive experience.

Hand Tracking Technology

Hand tracking for VR is a technology that allows users to interact with virtual environments using their natural hand movements, eliminating the need for physical controllers. Sensors or cameras capture real-time hand movements, translating them into virtual actions within the VR space. This provides a more intuitive and immersive experience, enhancing user engagement without requiring handheld controllers.

Social Virtual Reality

Social Virtual Reality (VR) refers to the use of virtual reality technology to create digital spaces where users can interact with each other in real-time. In these shared virtual environments, participants can communicate, collaborate, and engage with one another as avatars, even if they are physically located in different places. Social VR platforms often provide features like voice chat, hand gestures, and customizable avatars to enhance the sense of presence and social interaction.

This technology is employed for various purposes, including virtual meetings, collaborative workspaces, social events, and multiplayer gaming, allowing users to feel a sense of shared presence and connection within the virtual world. While still a new technology, several research studies have been conducted to measure the impact of social VR on remote education learning outcomes ((Mystakidis et al., 2021)), socialization [48], and loneliness and social anxiety [49].

Advancing User Engagement

While more research is needed, studies are starting to demonstrate how these advances in VR research increase user engagement. For example, one group found that AR increases user engagement during the purchase journey [50]. On the other hand, a surprising finding is that hand tracking does not seem to increase user engagement or experience in the virtual environment ((Masurovsky et al., 2020)).

However, despite the lack of improvement in user engagement, one study found that motor rehabilitation patients preferred hand tracking over using controllers [51]. Moreover, hand tracking offers an easier approach to tele-rehabilitation by reducing the amount of equipment a patient needs to understand and use. Compared to traditional social media platforms, social VR scores higher on presence and relatedness [52].

These findings suggest that while some VR advancements may not universally enhance user engagement, they carve out niches in specific applications, showcasing the diverse impact of technology in different contexts and expanding VR’s relevance in new research fields.

Implications for Research

Advances in VR technology, including features such as hand tracking, social VR, and augmented reality, have profound implications for scientific research across various disciplines. Hand tracking allows researchers to explore more natural and intuitive human-computer interactions, providing insights into cognitive processes and ergonomic considerations. Social VR opens new avenues for studying social dynamics and human behavior in immersive digital environments, offering a unique perspective. As these technologies continue to mature, the landscape of research and user engagement is undergoing a profound transformation, opening up new possibilities for innovation and exploration.

Best Practices in VR and Multimodal Research

Embarking on multimodal VR research introduces numerous considerations, but with careful planning, you can yield insightful data with high ecological validity and real-world impact. To guide your approach, here are key questions and steps to consider:

Questions Before Starting Research:

- Research Question Suitability: Does your research question benefit from VR, and is your test group suitable for VR research? What are the key moments that need to be measured and how will you measure it? How will you track when those key moments happen?

- Virtual Environment Design: Will you design the virtual environment or use a pre-made one? Are there specific expectations in your research area regarding the virtual environment’s appearance or functionality?

- Biosensor Selection: Which biosensors are most relevant to your VR study, considering any restrictions imposed by your setup or task?

- Data Collection Software: How will you quantify the human experience in a way that facilitates aggregation, and does your data collection software support this?

- User Experience and Side Effects: How will users acclimate to the virtual environment without compromising research goals, and how will you assess cybersickness (Kim et al., 2018)?

Steps to Take During Research:

- Pilot Study: Conduct a pilot study to test the virtual environment’s effects on users, addressing issues like cybersickness, task coherence, and user comfort.

- Biosensor-Synchronization Check: Verify that biosensors synchronize effectively with the virtual environment, ensuring a seamless integration that supports meaningful data analysis.

- Analysis of Pilot Data: Analyze pilot data to confirm your ability to capture expected physiological effects from the virtual environment.

This guidance serves as a starting point, recognizing that the complexity of multimodal VR research demands ongoing consideration and adaptation throughout the research process.

iMotions’ Commitment to VR Research in Healthcare

As a pioneering force in multimodal biosensor software, iMotions is dedicated to the relentless pursuit of cutting-edge software solutions and research platforms. Comprising a team of biosensor experts and researchers, we acknowledge that true breakthroughs emerge through collaboration with the academic community.

In a significant milestone in 2019, iMotions initiated a collaborative venture with Syddansk Universitets Hospital in Denmark, aiming to revolutionize the treatment of social anxiety. This collaboration brought together iMotions’ expertise and Syddansk Universitets Hospital’s psychologists and research team to refine the collection, visualization, and exportation of data obtained through virtual reality and multimodal biosensors, encompassing variables like heart rate and electrodermal activity. In these peer-reviewed articles, you can learn more about the ongoing progress of this project: Quintana et al., 2023 and Ørskov et al., 2022.

Our direct involvement in research endeavors positions us at the forefront of understanding the evolving needs of the research community. Importantly, this engagement allows us to contribute substantially to the progress in delivering effective treatments to those who need them most. iMotions remains unwavering in our commitment to advancing the field and fostering impactful collaborations that drive innovation in biosensor technology and its applications.

You can read more about the foundation grant supporting this collaborative effort here: https://vr8.dk/en/virtual-reality-for-social-anxiety/

Summary: What you need to consider as a VR researcher

Virtual Reality (VR) represents a significant leap forward in enhancing realism within controlled research environments. This cutting-edge technology enables researchers to assess both the psychological and physiological impacts of typically hazardous or hard-to-reach scenarios. Virtually no field of research remains untouched by the transformative potential of VR. Whether it involves refining construction site protocols, instructing novice pilots, or assisting individuals in overcoming their fear of heights, virtual environments provide a revolutionary tool to propel research endeavors to new heights.

Upon choosing to integrate VR into your research, a set of crucial questions arises that merit careful consideration:

- Hardware: Which headset type best suits your needs? What features are essential for your research? Any preferences on lens or headset weight? Do you require extras like hand tracking or AR tools?

- Virtual environment: How do you plan to design the virtual environment? If utilizing a pre-designed game, can you access its telemetry data? Is the virtual environment compatible with various platforms, or is it limited to a specific game engine?

- Biosensors: What data do you aim to gather from your VR research? Is embedded eye tracking necessary for your study? Are you considering recording facial movements with an add-on camera? Do you intend to measure autonomic nervous system activity using sensors for heart rate or electrodermal activity?

- Software: Where will you gather your data? Does the software platform support synchronization of all data streams? Can it assist in analyzing telemetry and biosensor data? Is it capable of visualizing the virtual reality experience? Can you aggregate data from multiple respondents using the software?

- Professional expertise and training: Is there sufficient professional expertise and training available? Do you have researchers familiar with setting up, running, and collecting data from VR headsets? Is there a team member proficient in analyzing and interpreting the collected data? If building your own virtual environment, do you possess the requisite expertise in coding and UX design?

Embarking on VR research presents distinctive advantages and challenges. It is crucial to invest time in pinpointing solutions tailored to your specific use case. While each researcher has individual requirements, the following encapsulates common patterns observed among our clients.

The inexperienced VR researcher often opts for:

- Research-grade VR headset with eye-tracking capabilities

- Previously published, pre-designed virtual environment/game

- Few additional biosensors such as heart rate and electrodermal activity

- Software that can easily help them visualize, synchronize and analyze their data

- Providing expert training for their team to cultivate academic and practical expertise in VR research.

Experienced VR researchers generally opt for:

- Research-grade VR headset with eye-tracking, hand-tracking and AR capabilities

- A customized virtual environment designed and tested by their own team

- Several additional biosensors, ranging from EMG to EEG to heart rate sensors, depending on the nature of their research question

- Software that can help them synchronize all of their data while providing them with the flexibility to stream data and commands back and forth between the data collection platform and virtual environment using API and LSL

- Expert training and consultation on an “as needed” basis

Download iMotions VR Eye Tracking Brochure

iMotions is the world’s leading biosensor platform.

Learn more about how the VR Eye Tracking Module can help you with your human behavior research

References

Alaker, M., Wynn, G. R., & Arulampalam, T. (2016). Virtual reality training in laparoscopic surgery: A systematic review & meta-analysis. International journal of surgery (London, England), 29, 85–94. https://doi.org/10.1016/j.ijsu.2016.03.034

Alcañiz Raya, M., Chicchi Giglioli, I. A., Marín-Morales, J., Higuera-Trujillo, J. L., Olmos, E., Minissi, M. E., Teruel Garcia, G., Sirera, M., & Abad, L. (2020). Application of Supervised Machine Learning for Behavioral Biomarkers of Autism Spectrum Disorder Based on Electrodermal Activity and Virtual Reality. Frontiers in human neuroscience, 14, 90. https://doi.org/10.3389/fnhum.2020.00090

Arushi, R. Dillon and A. N. Teoh, “Real-time Stress Detection Model and Voice Analysis: An Integrated VR-based Game for Training Public Speaking Skills,” 2021 IEEE Conference on Games (CoG), Copenhagen, Denmark, 2021, pp. 1-4, doi: 10.1109/CoG52621.2021.9618989.

Badash, I., Burtt, K., Solorzano, C. A., & Carey, J. N. (2016). Innovations in surgery simulation: a review of past, current and future techniques. Annals of translational medicine, 4(23), 453. https://doi.org/10.21037/atm.2016.12.24

Baker, N. A., Polhemus, A. H., Haan Ospina, E., Feller, H., Zenni, M., Deacon, M., DeGrado, G., Basnet, S., & Driscoll, M. (2022). The State of Science in the Use of Virtual Reality in the Treatment of Acute and Chronic Pain: A Systematic Scoping Review. The Clinical journal of pain, 38(6), 424–441. https://doi.org/10.1097/AJP.0000000000001029

Barreda-Ángeles, M., & Hartmann, T. (2022). Psychological benefits of using social virtual reality platforms during the covid-19 pandemic: The role of social and spatial presence. Computers in human behavior, 127, 107047. https://doi.org/10.1016/j.chb.2021.107047

Berg, L.P., Vance, J.M. Industry use of virtual reality in product design and manufacturing: a survey. Virtual Reality 21, 1–17 (2017). https://doi.org/10.1007/s10055-016-0293-9

Berni A, Borgianni Y. Applications of Virtual Reality in Engineering and Product Design: Why, What, How, When and Where. Electronics. 2020; 9(7):1064. https://doi.org/10.3390/electronics9071064

Blum, J., Rockstroh, C., & Göritz, A. S. (2020). Development and Pilot Test of a Virtual Reality Respiratory Biofeedback Approach. Applied psychophysiology and biofeedback, 45(3), 153–163. https://doi.org/10.1007/s10484-020-09468-x

Brambilla, S., Boccignone, G., Borghese, N.A., Chitti, E., Lombardi, R., Ripamonti, L.A. (2023). Tracing Stress and Arousal in Virtual Reality Games Using Players’ Motor and Vocal Behaviour. In: da Silva, H.P., Cipresso, P. (eds) Computer-Human Interaction Research and Applications. CHIRA 2023. Communications in Computer and Information Science, vol 1996. Springer, Cham. https://doi.org/10.1007/978-3-031-49425-3_10

Brown, B., Park, D., Sheehan, B., Shikoff, S., Solomon, J., Yang, J., Kim, I. (2018). Assessment of human driver safety at Dilemma Zones with automated vehicles through a virtual reality environment. Systems and Information Engineering Design Symposium (SIEDS), pp. 185-190

Cho, C., Hwang, W., Hwang, S. and Chung, Y. (2016). Treadmill Training with Virtual Reality Improves Gait, Balance, and Muscle Strength in Children with Cerebral Palsy. The Tohoku Journal of Experimental Medicine, 238(3), pp.213-218.

Dellazizzo, L., Potvin, S., Phraxayavong, K., & Dumais, A. (2021). One-year randomized trial comparing virtual reality-assisted therapy to cognitive-behavioral therapy for patients with treatment-resistant schizophrenia. NPJ schizophrenia, 7(1), 9. https://doi.org/10.1038/s41537-021-00139-2

Deo, N., Khan, K. S., Mak, J., Allotey, J., Gonzalez Carreras, F. J., Fusari, G., & Benn, J. (2021). Virtual reality for acute pain in outpatient hysteroscopy: a randomised controlled trial. BJOG : an international journal of obstetrics and gynaecology, 128(1), 87–95. https://doi.org/10.1111/1471-0528.16377

Dey A., Phoon, J., Saha, S., Dobbins, C., and Billinghurst, M., “A Neurophysiological Approach for Measuring Presence in Immersive Virtual Environments,” 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Porto de Galinhas, Brazil, 2020, pp. 474-485, doi: 10.1109/ISMAR50242.2020.00072.

Dreesmann, N. J., Su, H., & Thompson, H. J. (2022). A Systematic Review of Virtual Reality Therapeutics for Acute Pain Management. Pain management nursing : official journal of the American Society of Pain Management Nurses, 23(5), 672–681. https://doi.org/10.1016/j.pmn.2022.05.004

Dwivedi, A., Kwon, Y. and Liarokapis, M., “EMG-Based Decoding of Manipulation Motions in Virtual Reality: Towards Immersive Interfaces,” 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 2020, pp. 3296-3303, doi: 10.1109/SMC42975.2020.9283270.

Freeman, D., Lambe, S., Kabir, T., Petit, A., Rosebrock, L., Yu, L. M., Dudley, R., Chapman, K., Morrison, A., O’Regan, E., Aynsworth, C., Jones, J., Murphy, E., Powling, R., Galal, U., Grabey, J., Rovira, A., Martin, J., Hollis, C., Clark, D. M., … gameChange Trial Group (2022). Automated virtual reality therapy to treat agoraphobic avoidance and distress in patients with psychosis (gameChange): a multicentre, parallel-group, single-blind, randomised, controlled trial in England with mediation and moderation analyses. The lancet. Psychiatry, 9(5), 375–388. https://doi.org/10.1016/S2215-0366(22)00060-8

Gall, D., Roth, D., Stauffert, J. P., Zarges, J., & Latoschik, M. E. (2021). Embodiment in Virtual Reality Intensifies Emotional Responses to Virtual Stimuli. Frontiers in psychology, 12, 674179. https://doi.org/10.3389/fpsyg.2021.674179

Gall, D., Roth, D., Stauffert, J. P., Zarges, J., & Latoschik, M. E. (2021). Embodiment in Virtual Reality Intensifies Emotional Responses to Virtual Stimuli. Frontiers in psychology, 12, 674179. https://doi.org/10.3389/fpsyg.2021.674179

García, A. A., Bobadilla, I. G., Figueroa, G. A., Ramírez, M. P., & Román, J. M. (2016). Virtual reality training system for maintenance and operation of high-voltage overhead power lines. Virtual Reality,20(1), 27-40. doi:10.1007/s10055-015-0280-6

Geraets, C. N. W., Snippe, E., van Beilen, M., Pot-Kolder, R. M. C. A., Wichers, M., van der Gaag, M., & Veling, W. (2020). Virtual reality based cognitive behavioral therapy for paranoia: Effects on mental states and the dynamics among them. Schizophrenia research, 222, 227–234. https://doi.org/10.1016/j.schres.2020.05.047

González-Franco, M., Peck, T. C., Rodríguez-Fornells, A., & Slater, M. (2014). A threat to a virtual hand elicits motor cortex activation. Experimental brain research, 232(3), 875–887. https://doi.org/10.1007/s00221-013-3800-1

Goudman, L., Jansen, J., Billot, M., Vets, N., De Smedt, A., Roulaud, M., Rigoard, P., & Moens, M. (2022). Virtual Reality Applications in Chronic Pain Management: Systematic Review and Meta-analysis. JMIR serious games, 10(2), e34402. https://doi.org/10.2196/34402

Gromer, D., Reinke, M., Christner, I., & Pauli, P. (2019). Causal Interactive Links Between Presence and Fear in Virtual Reality Height Exposure. Frontiers in psychology, 10, 141. https://doi.org/10.3389/fpsyg.2019.00141

Grudzewski, F., Awdziej, M., Mazurek, G., & Piotrowska, K. (2018). Virtual reality in marketing communication – the impact on the message, technology and offer perception – empirical study. Economics and Business Review, 4(3), 36–50. https://doi.org/10.18559/ebr.2018.3.4

Ham, J., Cho, D., Oh, J. & Lee, J. (2017). Discrimination of multiple stress levels in virtual reality environments using heart rate variability. Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference, 2017, 3989–3992. https://doi.org/10.1109/EMBC.2017.8037730

Hooper, J., Tsiridis, E., Feng, J. E., Schwarzkopf, R., Waren, D., Long, W. J., Poultsides, L., Macaulay, W., & NYU Virtual Reality Consortium (2019). Virtual Reality Simulation Facilitates Resident Training in Total Hip Arthroplasty: A Randomized Controlled Trial. The Journal of arthroplasty, 34(10), 2278–2283. https://doi.org/10.1016/j.arth.2019.04.002

Ida, H., Fukuhara, K., & Ogata, T. (2022). Virtual reality modulates the control of upper limb motion in one-handed ball catching. Frontiers in sports and active living, 4, 926542. https://doi.org/10.3389/fspor.2022.926542

Ishaque, S., Rueda, A., Nguyen, B., Khan, N., & Krishnan, S. (2020). Physiological Signal Analysis and Classification of Stress from Virtual Reality Video Game. Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference, 2020, 867–870. https://doi.org/10.1109/EMBC44109.2020.9176110

Jiang, M. Y. W., Upton, E., & Newby, J. M. (2020). A randomised wait-list controlled pilot trial of one-session virtual reality exposure therapy for blood-injection-injury phobias. Journal of affective disorders, 276, 636–645. https://doi.org/10.1016/j.jad.2020.07.076

Juan, M. C., Elexpuru, J., Dias, P., Santos, B. S., & Amorim, P. (2023). Immersive virtual reality for upper limb rehabilitation: comparing hand and controller interaction. Virtual reality, 27(2), 1157–1171. https://doi.org/10.1007/s10055-022-00722-7

Juliano, J. M., Spicer, R. P., Vourvopoulos, A., Lefebvre, S., Jann, K., Ard, T., Santarnecchi, E., Krum, D. M., & Liew, S. L. (2020). Embodiment Is Related to Better Performance on a Brain-Computer Interface in Immersive Virtual Reality: A Pilot Study. Sensors (Basel, Switzerland), 20(4), 1204. https://doi.org/10.3390/s20041204

Kenyon, K., Kinakh, V., & Harrison, J. (2023). Social virtual reality helps to reduce feelings of loneliness and social anxiety during the Covid-19 pandemic. Scientific reports, 13(1), 19282. https://doi.org/10.1038/s41598-023-46494-1

Kim, H., Kim, D. J., Kim, S., Chung, W. H., Park, K. A., Kim, J. D. K., Kim, D., Kim, M. J., Kim, K., & Jeon, H. J. (2021). Effect of Virtual Reality on Stress Reduction and Change of Physiological Parameters Including Heart Rate Variability in People With High Stress: An Open Randomized Crossover Trial. Frontiers in psychiatry, 12, 614539. https://doi.org/10.3389/fpsyt.2021.614539

Kim, H. K., Park, J., Choi, Y., & Choe, M. (2018). Virtual reality sickness questionnaire (VRSQ): Motion sickness measurement index in a virtual reality environment. Applied ergonomics, 69, 66–73. https://doi.org/10.1016/j.apergo.2017.12.016

Lagos, L., Vaschillo E., Vaschillo, B., Lehrer, P., Bates M., Pandina, R.; Virtual Reality–Assisted Heart Rate Variability Biofeedback as a Strategy to Improve Golf Performance: A Case Study. Biofeedback 1 June 2011; 39 (1): 15–20. doi: https://doi.org/10.5298/1081-5937-39.1.11

Lan, K. C., Li, C. W., & Cheung, Y. (2021). Slow Breathing Exercise with Multimodal Virtual Reality: A Feasibility Study. Sensors (Basel, Switzerland), 21(16), 5462. https://doi.org/10.3390/s21165462

Larsen, C. R., Soerensen, J. L., Grantcharov, T. P., Dalsgaard, T., Schouenborg, L., Ottosen, C., Schroeder, T. V., & Ottesen, B. S. (2009). Effect of virtual reality training on laparoscopic surgery: randomised controlled trial. BMJ (Clinical research ed.), 338, b1802. https://doi.org/10.1136/bmj.b1802

Leveau, P.-H., & Camus, e. S. (2023). Embodiment, immersion, and enjoyment in virtual reality marketing experiences. Psychology & Marketing, 40, 1329–1343. https://doi.org/10.1002/mar.21822

Lin, M., Huang, J., Fu, J., Sun, Y., & Fang, Q. (2023). A VR-Based Motor Imagery Training System With EMG-Based Real-Time Feedback for Post-Stroke Rehabilitation. IEEE transactions on neural systems and rehabilitation engineering : a publication of the IEEE Engineering in Medicine and Biology Society, 31, 1–10. https://doi.org/10.1109/TNSRE.2022.3210258

Lindner, P., Miloff, A., Bergman, C., Andersson, G., Hamilton, W., & Carlbring, P. (2020). Gamified, Automated Virtual Reality Exposure Therapy for Fear of Spiders: A Single-Subject Trial Under Simulated Real-World Conditions. Frontiers in psychiatry, 11, 116. https://doi.org/10.3389/fpsyt.2020.00116

Makransky, G., Terkildsen, T. S., and Mayer, R. E. (2017). Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learn. Instr. doi: 10.1016/j.learninstruc.2017.12.007

Marín-Morales, J., Higuera-Trujillo, J. L., Guixeres, J., Llinares, C., Alcañiz, M., & Valenza, G. (2021). Heart rate variability analysis for the assessment of immersive emotional arousal using virtual reality: Comparing real and virtual scenarios. PloS one, 16(7), e0254098. https://doi.org/10.1371/journal.pone.0254098

Masurovsky A, Chojecki P, Runde D, Lafci M, Przewozny D, Gaebler M. Controller-Free Hand Tracking for Grab-and-Place Tasks in Immersive Virtual Reality: Design Elements and Their Empirical Study. Multimodal Technologies and Interaction. 2020; 4(4):91. https://doi.org/10.3390/mti4040091

Matamala-Gomez, M., Donegan, T., Bottiroli, S., Sandrini, G., Sanchez-Vives, M. V., & Tassorelli, C. (2019). Immersive Virtual Reality and Virtual Embodiment for Pain Relief. Frontiers in human neuroscience, 13, 279. https://doi.org/10.3389/fnhum.2019.00279

Matamala-Gomez, M., Slater, M., & Sanchez-Vives, M. V. (2022). Impact of virtual embodiment and exercises on functional ability and range of motion in orthopedic rehabilitation. Scientific reports, 12(1), 5046. https://doi.org/10.1038/s41598-022-08917-3

Michela, A., van Peer, J. M., Brammer, J. C., Nies, A., van Rooij, M. M. J. W., Oostenveld, R., Dorrestijn, W., Smit, A. S., Roelofs, K., Klumpers, F., & Granic, I. (2022). Deep-Breathing Biofeedback Trainability in a Virtual-Reality Action Game: A Single-Case Design Study With Police Trainers. Frontiers in psychology, 13, 806163. https://doi.org/10.3389/fpsyg.2022.806163

Modrego-Alarcón, M., López-Del-Hoyo, Y., García-Campayo, J., Pérez-Aranda, A., Navarro-Gil, M., Beltrán-Ruiz, M., Morillo, H., Delgado-Suarez, I., Oliván-Arévalo, R., & Montero-Marin, J. (2021). Efficacy of a mindfulness-based programme with and without virtual reality support to reduce stress in university students: A randomized controlled trial. Behaviour research and therapy, 142, 103866. https://doi.org/10.1016/j.brat.2021.103866

Mystakidis S, Berki E, Valtanen J-P. Deep and Meaningful E-Learning with Social Virtual Reality Environments in Higher Education: A Systematic Literature Review. Applied Sciences. 2021; 11(5):2412. https://doi.org/10.3390/app11052412

North, M. M., North, S. M., & Coble, J. R. (1998). Virtual reality therapy: an effective treatment for phobias. Studies in health technology and informatics, 58, 112–119.

Park, C., Jang, G., & Chai, Y. (2006). Development of a Virtual Reality Training System for Live-Line Workers. International Journal of Human-Computer Interaction,20(3), 285-303. doi:10.1207/s15327590ijhc2003_7

Philipp, M. C., Storrs, K. R., & Vanman, E. J. (2012). Sociality of facial expressions in immersive virtual environments: a facial EMG study. Biological psychology, 91(1), 17–21. https://doi.org/10.1016/j.biopsycho.2012.05.008

Qidwai, U., and Ajimsha,M.S., “Can immersive type of Virtual Reality bring EMG pattern changes post facial palsy?,” 2015 Science and Information Conference (SAI), London, UK, 2015, pp. 756-760, doi: 10.1109/SAI.2015.7237227.

Quintana, P., Bouchard, S., Botella, C., Robillard, G., Serrano, B., Rodriguez-Ortega, A., Torp Ernst, M., Rey, B., Berthiaume, M., & Corno, G. (2023). Engaging in Awkward Social Interactions in a Virtual Environment Designed for Exposure-Based Psychotherapy for People with Generalized Social Anxiety Disorder: An International Multisite Study. Journal of clinical medicine, 12(13), 4525. https://doi.org/10.3390/jcm12134525

Radhakrishnan, U., Chinello, F., & Koumaditis, K. (2023). Investigating the effectiveness of immersive VR skill training and its link to physiological arousal. Virtual reality, 27(2), 1091–1115. https://doi.org/10.1007/s10055-022-00699-3

Rimer, E., Husby, L. V., & Solem, S. (2021). Virtual Reality Exposure Therapy for Fear of Heights: Clinicians’ Attitudes Become More Positive After Trying VRET. Frontiers in psychology, 12, 671871. https://doi.org/10.3389/fpsyg.2021.671871

Rizzo, A. S., Difede, J., Rothbaum, B. O., Reger, G., Spitalnick, J., Cukor, J., & McLay, R. (2010). Development and early evaluation of the Virtual Iraq/Afghanistan exposure therapy system for combat-related PTSD. Annals of the New York Academy of Sciences, 1208, 114–125. https://doi.org/10.1111/j.1749-6632.2010.05755.x

Rodrigues, K. A., Moreira, J. V. D. S., Pinheiro, D. J. L. L., Dantas, R. L. M., Santos, T. C., Nepomuceno, J. L. V., Nogueira, M. A. R. J., Cavalheiro, E. A., & Faber, J. (2022). Embodiment of a virtual prosthesis through training using an EMG-based human-machine interface: Case series. Frontiers in human neuroscience, 16, 870103. https://doi.org/10.3389/fnhum.2022.870103

Russell, M. E., Hoffman, B., Stromberg, S., & Carlson, C. R. (2014). Use of controlled diaphragmatic breathing for the management of motion sickness in a virtual reality environment. Applied psychophysiology and biofeedback, 39(3-4), 269–277. https://doi.org/10.1007/s10484-014-9265-6

Schoeller, F., Bertrand, P., Gerry, L. J., Jain, A., Horowitz, A. H., & Zenasni, F. (2019). Combining Virtual Reality and Biofeedback to Foster Empathic Abilities in Humans. Frontiers in psychology, 9, 2741. https://doi.org/10.3389/fpsyg.2018.02741

Scorpio M, Laffi R, Masullo M, Ciampi G, Rosato A, Maffei L, Sibilio S. Virtual Reality for Smart Urban Lighting Design: Review, Applications and Opportunities. Energies. 2020; 13(15):3809. https://doi.org/10.3390/en13153809

Seymour, N. E., Gallagher, A. G., Roman, S. A., O’Brien, M. K., Bansal, V. K., Andersen, D. K., & Satava, R. M. (2002). Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Annals of surgery, 236(4), 458–464. https://doi.org/10.1097/00000658-200210000-00008

Song H, Chen F, Peng Q, Zhang J, Gu P. Improvement of user experience using virtual reality in open-architecture product design. Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture. 2018;232(13):2264-2275. doi:10.1177/0954405417711736

Smith V, Warty RR, Sursas JA, Payne O, Nair A, Krishnan S, da Silva Costa F, Wallace EM, Vollenhoven B, The Effectiveness of Virtual Reality in Managing Acute Pain and Anxiety for Medical Inpatients: Systematic Review, J Med Internet Res 2020;22(11):e17980, doi: 10.2196/17980, PMID: 33136055, PMCID: 7669439

Szczepańska-Gieracha, J., Cieślik, B., Serweta, A., & Klajs, K. (2021). Virtual Therapeutic Garden: A Promising Method Supporting the Treatment of Depressive Symptoms in Late-Life: A Randomized Pilot Study. Journal of clinical medicine, 10(9), 1942. https://doi.org/10.3390/jcm10091942