Discover how eye tracking accuracy is measured effectively in this insightful guide. Learn about the key metrics used to assess accuracy in eye tracking studies. Understanding these metrics is crucial for ensuring the reliability and validity of your eye tracking research. Master the art of precision in eye tracking measurements today.

Table of Contents

The single most important parameter in eye tracking is accuracy. If the system isn’t capturing where your respondents are actually looking, then the rest of your data is on shaky ground – and you might as well head out for an early lunch.

Because every setup is different – from lab-based monitors to mobile eye tracking glasses – there are a couple of ways to ascertain the accuracy of an eye tracking system. In this article, we’ll explore some of the most widely used methods for measuring accuracy. Each has its own strengths and weaknesses, and understanding these trade-offs will help you choose the right approach for your research environment and hardware.

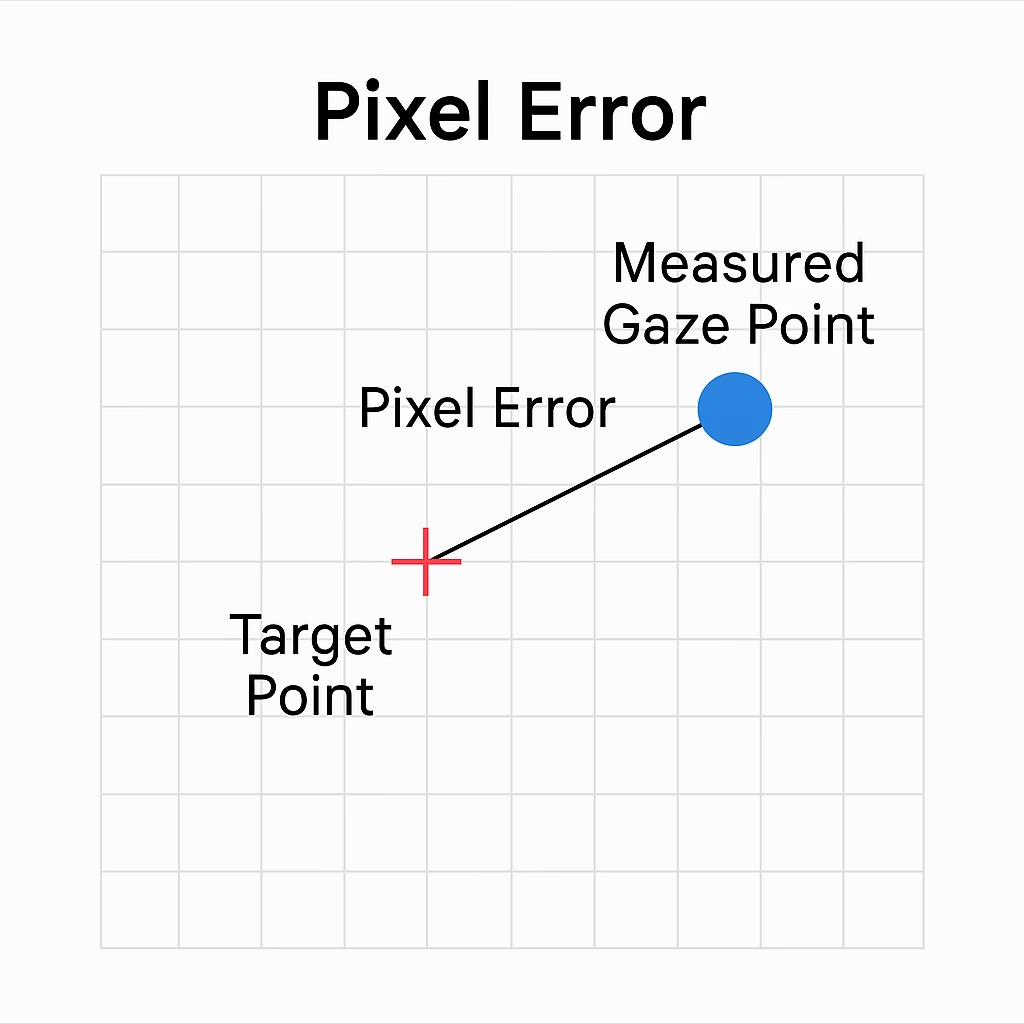

Pixel Error

Pixel error is one of the popular ways to measure eye tracking accuracy. This method shows how accurate the calibration was, quantified as the average (or maximum) number of pixels by which the measured gaze deviates from the true calibration points. The difference between the actual calibration point (e.g., a dot on the screen) and where the eye tracker thinks the person was looking is expressed in pixels.

- A lower pixel error means more accurate calibration.

- For example, if the calibration point is at (500, 400) pixels on the screen, but the tracker detects gaze at (505, 398 – as an example), the error would be 5–6 pixels.

Presenting calibration accuracy through pixel error, can sometimes report less than ideal data on smaller phones when the data is actually good, this is caused by varying pixel density or pixel-per-inch (PPI) between desktop monitors and phones and table screens – the smaller the device the higher PPI – for reference, a modern iPhone resolution is 2556×1179 while a standard desktop monitor clocks in at a similar resolution.

This means that smartphones or tablets often display a higher pixel error, as opposed to a desktop screen, simply because the pixels are packed into a smaller frame while having the same resolution.

Why measure accuracy in pixels?

For screen-based eye tracking systems, reporting accuracy in pixels can be the most practical choice. Since the participant’s gaze is always directed at a defined display, pixel error directly reflects how far the measured gaze point is from the target on that same screen.

This makes interpretation straightforward: if the error is 20 pixels, researchers immediately know how much the gaze estimate deviates within the digital content itself—whether that’s a webpage, a video, or an interface. Since AOI sizes are also defined in pixels, the error naturally scales with them, giving researchers a direct sense of how accuracy impacts AOI-based analyses. Pixel error ties accuracy to the exact medium the participant is interacting with, making it an intuitive and useful measure for evaluating performance in screen-based experiments.

Multi-camera world model accuracy

If you’re working with a system that builds a world model using multiple cameras, such as the Smart Eye Pro system, then you’re no longer limited to a flat screen reference. In these setups, gaze accuracy must be defined relative to objects and distances in physical space. Instead of pixels, deviations can be expressed directly in real-world units such as centimeters or meters. Conceptually, this is the same measure of accuracy – the offset between true and estimated gaze – but adapted to the spatial scale of the environment rather than a screen.

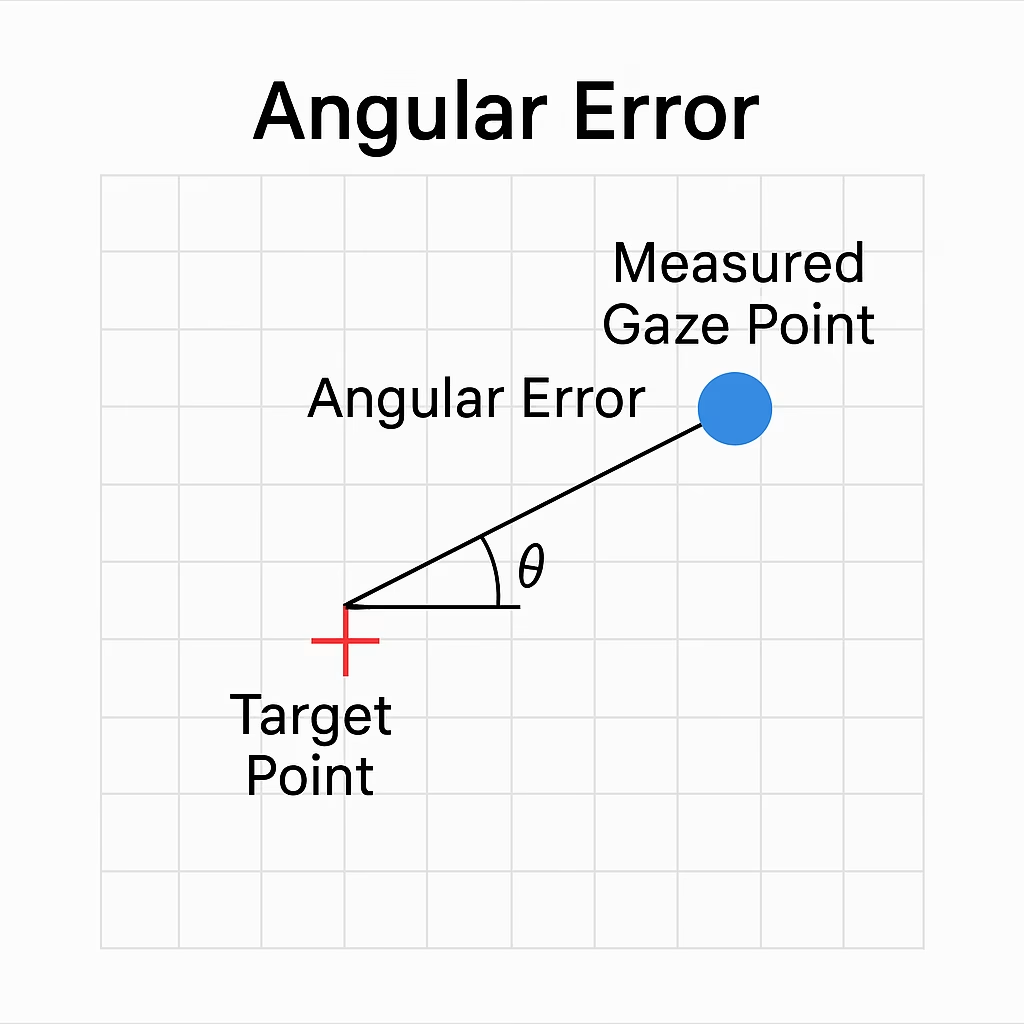

Angular Error

Angular Error is another popular measure of eye tracker accuracy. It describes the angular distance, in degrees of visual angle, between where a person was actually looking (the calibration target) and where the eye tracker estimated their gaze to be.

Here’s how it works:

- When you calibrate an eye tracker, the participant looks at specific points on a screen or in the environment.

- The system then estimates the gaze position.

- Angular error is the angular distance between:

- The true stimulus point (where the participant was supposed to look).

- The measured gaze point (where the eye tracker thinks they were looking).

Why measure in angles instead of pixels?

If accuracy were only reported in pixels, the results would be tied to a specific screen resolution, size, and viewing distance. That means the same error could look very different depending on whether someone was sitting close to a small laptop screen or farther away from a large monitor.

By expressing accuracy as degrees of visual angle, the measure becomes independent of the setup. For example, an angular error of 1° represents the same visual offset for the participant’s eye, no matter the screen. In practice, 1° might equal about 1 cm at a viewing distance of 57 cm, or about 2 cm at 114 cm. This makes angular error a consistent standard that allows researchers to compare accuracy across different devices, labs, and study conditions.

Typical values

- High-quality desktop eye trackers: ~0.3°–0.5° angular error.

- Mobile or glasses-based systems: often 0.5°–1.0° or higher.

Relation to precision

- Angular error = accuracy (systematic deviation from the target).

- Precision = consistency (how tightly repeated gaze points cluster).

Both matter: a tracker can be precise but inaccurate (consistently off-target), or accurate but imprecise (scattered points around the target).

What Can Affect Eye-Tracking Accuracy

When working with eye-tracking, it’s important to understand what “accuracy” really means. As mentioned before, on smaller screens, like phones, you may notice what looks like reduced accuracy. This is actually just a result of how pixel offsets are visualized on small displays—it doesn’t mean the eye tracker itself is less accurate.

That said, some real-world factors can affect how accurately an eye tracker measures gaze. Common issues include:

- Glasses with strong prescriptions or progressive lenses – These can distort light, making it harder for the tracker to interpret gaze correctly.

- Glasses with IR-blocking coatings – Since most eye trackers use infrared light, these lenses can reduce accuracy or even prevent tracking entirely.

- Dirty or reflective glasses – Smudges, grease, or strong glare can interfere with the sensors.

- Eye conditions – Participants with nystagmus or similar conditions may not calibrate well.

- Face and head coverings – Wearing things like hats and masks at the same time can obstruct the eyes and disrupt tracking.

Best Practices for Researchers

To ensure the most accurate results, researchers can take a few practical steps:

- When using smaller screens – Manually verify results in the verification tab. Ask participants to fixate on specific points to confirm accuracy before starting the study.

- Participants with glasses

- Exclude participants wearing IR-blocking or progressive glasses.

- Other glasses wearers can be included or excluded at the researcher’s discretion, depending on calibration quality.

- Participants with certain conditions – People who have had eye surgery, or who experience nystagmus or similar issues, may not calibrate well and should often be excluded.

- Room setup – Use controlled lighting that avoids infrared sources. Overhead or frontal lighting is best, but avoid glare on participants’ glasses.

- Ergonomics – Use a fixed chair to minimize head movement. Combine it with an adjustable-height table so the setup fits each participant comfortably.

Eye Tracking

The Complete Pocket Guide

- 32 pages of comprehensive eye tracking material

- Valuable eye tracking research insights (with examples)

- Learn how to take your research to the next level