Facial coding and fEMG are two leading methods for analyzing facial expressions in biometric research. This article compares their strengths in breadth, depth, application, and output to help researchers choose the right tool for their needs. Discover the differences between facial coding and fEMG today!

Table of Contents

Ladies and Gentlemen! On the iMotions blog today we will pit two popular sensors against each other, head-to-head (or is it face-to-face?)!

But first, some background.

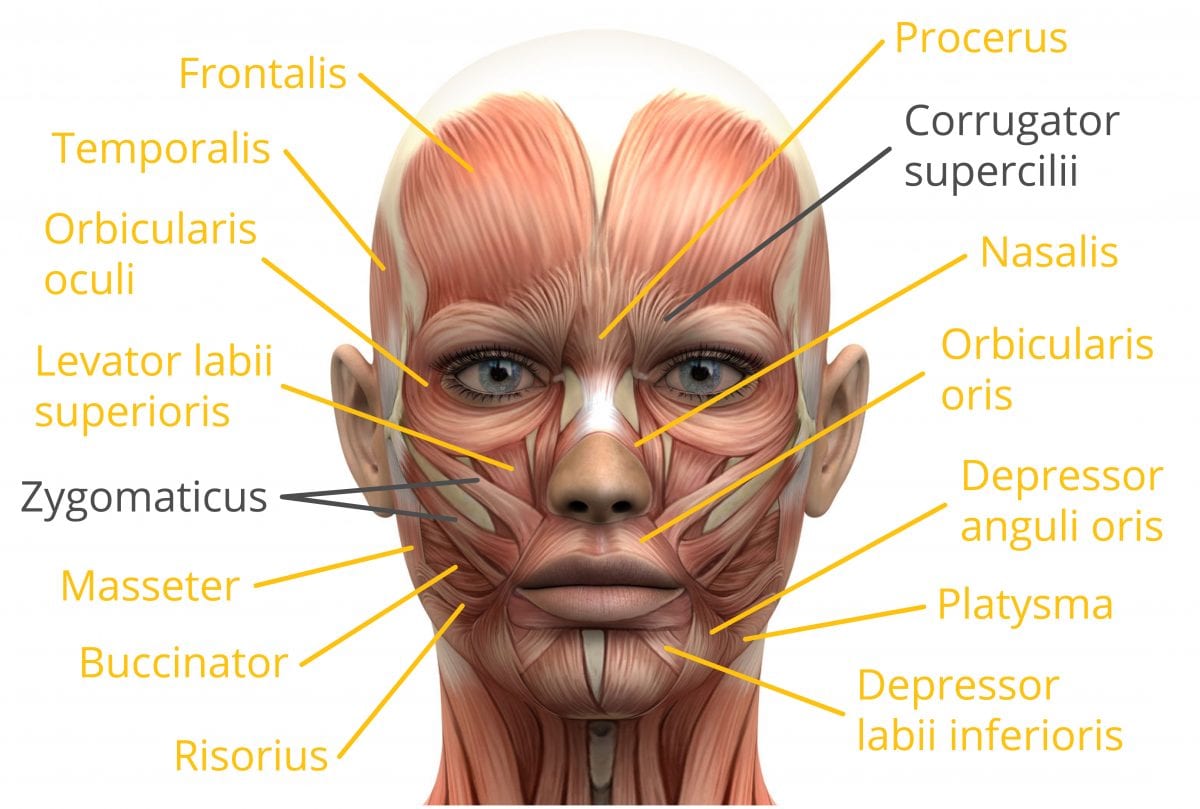

When we say “emotional expressions”, the face is the first thing people think of. Why is that? The human face consists of 43 muscles attached to the front of the skull, and these muscles have no other discernible purpose than to convey emotional information to others.

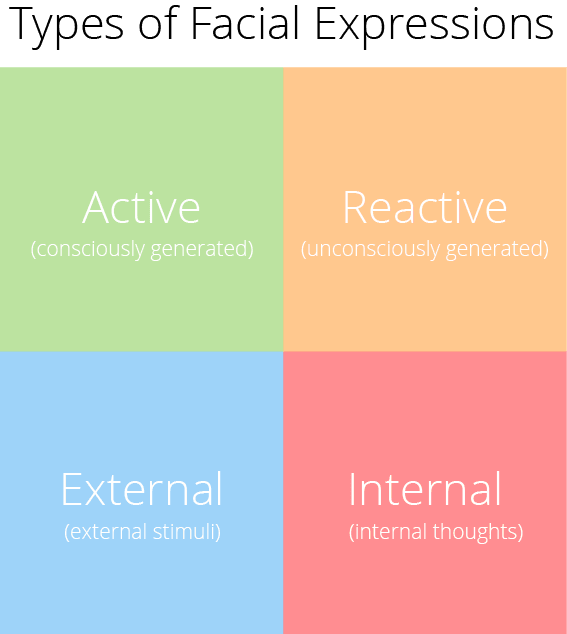

We can change our facial expressions actively (like an actor in your favorite movie), or reactively (like you laughing at an actor in your favorite movie). Facial expressions can be prompted by something external to us (like tasting your favorite food) or internal to us (like thinking about your favorite food when you’re on a diet).

Facial expressions are such an important emotional barometer to us, we’re even trying to find them in our pets.

So, let’s face it (ha!): facial expressions are an integral part of biometric research.

Now, our brains are already the best pound-for-pound facial expression analytical heavyweight there is. In a split-second we can read the atmosphere of a room, watch the joy on a child’s face, or determine whether someone is lying. In both speed and power, our brains reign supreme. Unfortunately, our brains don’t provide usable data that you can format in Excel – and that’s where we come in.

In this article, we will compare and contrast both webcam-based Facial Expression Analysis (FEA) with Facial Electromyography (fEMG) in four categories:

- Breadth

- Depth

- Application

- Output

So which sensor will win this fight? Let’s get ready to research!

Round 1: Breadth

What is Facial Expression Analysis?

FEA involves the use of an automatic facial coding algorithm, like those provided by Affectiva or Emotient. This algorithm uses a simple video feed – whether it’s from a laptop, tablet, phone, GoPro, or standalone webcam.

The nitty-gritty details on how these algorithms work can be found here. But, what’s important is that the entire face is detected, and the individual movements of the face are registered by the position of facial landmarks on the eyes, brows, nose and mouth.

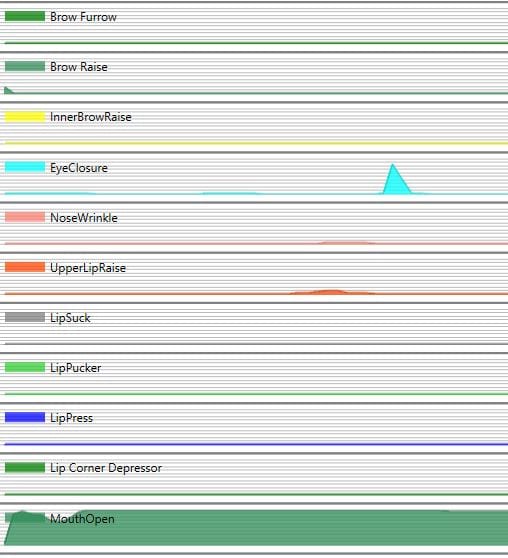

From the relative movement of these landmarks, you can derive Action Units like Brow Furrow, Eye Widen or Lip Corner Depressor. These Action Units can then provide information on the likelihood of a Core Emotion being expressed. For example, weighted scores of Nose Wrinkle and Upper Lip Raise can contribute to the overall score for Disgust.

Affectiva’s FEA algorithm can provide scores for 21 Action Units and 7 Core Emotions, while also providing scores on Engagement and Valence to boot, all from a simple webcam feed. That’s a ridiculous amount of breadth!

fEMG, by comparison, derives facial expressions by measuring the activation of individual muscles. This involves putting electrodes on the skin, so you can measure the electrical activity of the muscles underneath.

Now, each of the Action Units described in FEA can also be discerned using fEMG – however, the hardware you use for fEMG will limit the number of muscles you are able to record from. For example, a single Shimmer EXG will only let you record from two muscles; a BIOPAC wired EMG amplifier will only let you record from one.

You could theoretically record fEMG from every muscle to get all the Action Units, but that would involve a whole lot of stuff stuck to your face, and that’s an unpleasant experience no matter how you slice it.

WINNER: FACIAL EXPRESSION ANALYSIS

Round 2: Depth

As we had discussed earlier, FEA uses a simple camera feed to calculate the likelihood of a facial movement being expressed. This likelihood is expressed as a probability. However, there is one small problem with this:

This means FEA’s detection capability is limited to facial expressions that can be seen.

What about microexpressions, or those tiny facial expressions that last 1/50th of a second? Or what about really subtle facial expressions, where the underlying facial musculature is active, but not active enough to actually move the skin?

This is where fEMG really shines.

fEMG involves the placement of electrodes on the surface of the skin, to measure the electrical activity of the muscle underneath. This activity is typically expressed in millivolts, but can even go as small as microvolts depending on the muscle, a miniscule amount!

This means that the output of fEMG is a true indicator of facial activation, and not a probability like that produced by FEA.

It’s also worth noting that fEMG has much higher temporal resolution than FEA – good EMG data is collected at a whopping 512 Hz compared to 30Hz for FEA. So those super-quick microexpressions? Easily detected with fEMG, less so for FEA.

This makes fEMG a perfect sensor for when you’re looking for really subtle or fleeting expressions.

So, while fEMG may not have the breadth of measurements across the spectrum of human facial expressions, if you’re interested in one or two target muscles (like those for frowning and smiling), fEMG displays exceptional sensitivity and depth of measurement in both timing and amplitude.

WINNER: FACIAL ELECTROMYOGRAPHY

Round 3: Application

What a match! We’ve seen victories on either side with regards to depth and breadth of data. But which use cases are best for FEA, and which are best for fEMG?

Well, for screen-based stimuli such as images, videos, websites, surveys and screen recordings, FEA is super easy to implement since it just involves a webcam. Personally, we like the Logitech C920, although practically any webcam will do. All it has to do is sit on top of your respondent screen, and voila!

An oft-ignored perk of using FEA is the fact that you don’t even need to record people during a live collection to get facial expression data – iMotions has a convenient feature that allows you to post-import videos for retroactive FEA. This is a great option if you have multiple camera feeds through which you’d like to analyze facial expressions. You can also designate which face to analyze if there are multiple people within a video feed!

So it sounds like FEA is versatile, easy, and provides a ton of data with minimal work. However, successful FEA has its limitations – in that the respondent being studied has to be in full view of a webcam. And when it comes to the myriad options in human behaviour research, sometimes the use of a webcam isn’t possible.

In VR studies, it’s impossible to do FEA because the face is occluded by the headset. This also goes for mobile studies – not only because people are moving around in a space, and so are hard to capture on camera, but also because they may be wearing eye-tracking glasses, which also occlude the brow and can interfere with FEA. In both cases, an fEMG setup is a perfect alternative to capture valence data in these unique settings.

Another unsung benefit of using EMG is that it need not be restricted to facial muscles. Depending on your research question, you could measure muscle activity from anywhere on the body. For example, neck and shoulder EMG can be used to assess stress and startle responses. Arm and hand EMG can quantify grasping behaviour. For kinesiology, ergonomic and movement science labs, EMG is an essential tool.

In summary, it’s impossible to say which has better applicability. In fact, FEA and fEMG are perfect complements to each other, and can be used in a wide variety of applications across the spectrum of human behavior research.

WINNER: DRAW

Round 4: Output

We’re head to head coming into the final round! We’ve shown that both FEA and fEMG show equal versatility in application. But when the study is done and you’ve collected your data, what can you do with it?

After post-processing FEA data within iMotions, our analysis tools have a built-in algorithm known as Signal Thresholding. This basically allows you to convert continuous FEA data into a 1 (yes) or 0 (no) over time – per respondent, per stimulus.

Signal thresholding is a great tool for two reasons – a) it allows for easy quantification of data (since now you’re dealing with 0s and 1s); and b) it allows for easy aggregation of population metrics, where for a given stimulus you can see how many people showed a particular emotion at a given time, and for how long. This makes the data easy to summarize and visualize – both of which you can easily and quickly do in iMotions!

fEMG, while theoretically more powerful in export, requires a bit more legwork once the data is collected. The raw data is in millivolts, and the signal must be cleaned and smoothed in order to get any quantifiable results. This means that if you want to use fEMG, you will need:

- Auxiliary software to clean the data (such as Matlab, BIOPAC’s Acknowledge, Python, or R)

- Expertise in coding and signal processing

But, once the fEMG is in a usable format, you can derive powerful metrics such as exact levels of muscle activation and timing. You can also post-import the cleaned EMG data back into iMotions for ease of visualization.

However… in the final round, when it comes down to the output of a measurement, FEA delivers the knockout punch for the widespread ease with which you can derive insightful conclusions from the data.

WINNER: FACIAL EXPRESSION ANALYSIS

So there we have it, ladies and gentlemen! Here’s the scorecard for the amazing match we just witnessed:

Breadth: Facial Expression Analysis

Depth: Facial EMG

Application: DRAW

Output: Facial Expression Analysis

For a quick rundown of the differences between these two facial expression analysis tools, then check out the chart below.

But, even though FEA may have more points than fEMG, is any one technique really better than the other?

Not really… because it all depends on your research question. Both FEA and fEMG can be powerful tools in the right use case. But don’t let a lack of technical expertise limit your research. After all, that’s why we’re here – to make it easier for you. If you’d like more information, please feel free to check out our Facial Expressions Pocket Guide, or reach out to your friendly neighborhood iMotions rep to see how facial expressions can elevate your research!

Free 42-page Facial Expression Analysis Guide

For Beginners and Intermediates

- Get a thorough understanding of all aspects

- Valuable facial expression analysis insights

- Learn how to take your research to the next level

![Featured image for How to Set Up a Cutting-Edge Research Lab [Steps and Examples]](https://imotions.com/wp-content/uploads/2023/01/playing-chess-against-robot-300x168.webp)