iMotions has entered the field of voice and speech analysis, offering new ways to conduct multimodal voice analysis research with a new software feature and a brand-new module. This article explains in depth how these two additions can provide new in-depth insights into human behavior research.

Table of Contents

As readers of our blogs and subscribers to our newsletter will know by now, iMotions has begun to move into the voice analysis space. As the last frontier of the human emotional scope yet to be open to analysis in iMotions, the human voice is now getting its place in human behavior research.

Voice Analysis

See beyond words with voice analysis

Speech-to-Text analysis

In June, at iMotions, we took our first step towards offering speech and voice analysis when we launched our Speech-to-Text analysis feature, developed in collaboration with AssemblyAI. This feature represented a critical turning point in the field of emotion analysis for iMotions, as it was our first official foray into the voice analysis space.

The speech-to-text function is a versatile tool that simplifies speech analysis, by automating the arduous and time-consuming process of transcribing audio and video recordings while simultaneously attributing emotional labels to words within the content.

AssemblyAI’s Audio Intelligence software, which iMotions’ feature is built on, is a cutting-edge platform that specializes in processing and understanding audio data. Leveraging advanced machine learning algorithms, it can transcribe spoken words from audio recordings with impressive accuracy, making it an invaluable tool for industries like transcription services, content creation, and customer service.

Additionally, AssemblyAI’s Audio Intelligence software goes beyond mere transcription by offering speaker identification, sentiment analysis, and keyword extraction, providing valuable insights from spoken content.

This addition to the already extensive iMotions feature library holds immense value for researchers, businesses, and educators. Speech-to-text analysis allows for the swift and accurate extraction of emotional insights from spoken content, while also providing a handy sectioned transcription in the process. With this, you can effortlessly pinpoint and categorize emotionally charged words in an interview, lecture, or customer service interaction. This capability empowers users to identify emotional triggers, assess sentiment, and uncover underlying emotional dynamics within conversations and content, all within the user-friendly iMotions software.

The Voice Analysis Module

However, Speech-to-text analysis was only the first step, as we didn’t rest on our laurels after the success of Speech-to-Text analysis. Instead, we took the next, and much more significant, leap into the auditory and voice space with the introduction of our new Voice Analysis Module, developed in partnership with audEERING.

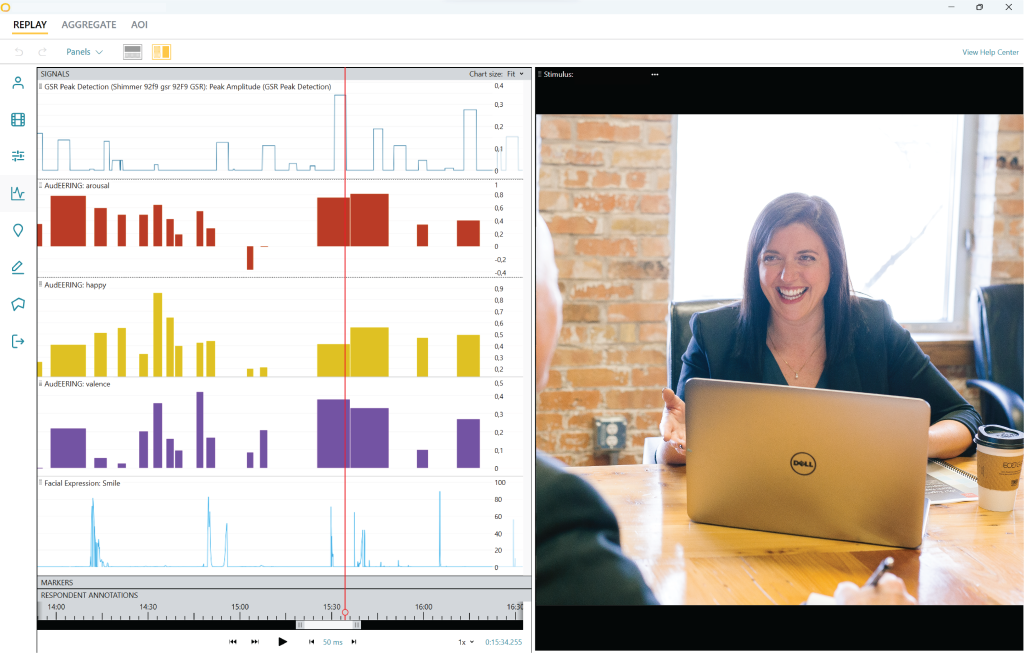

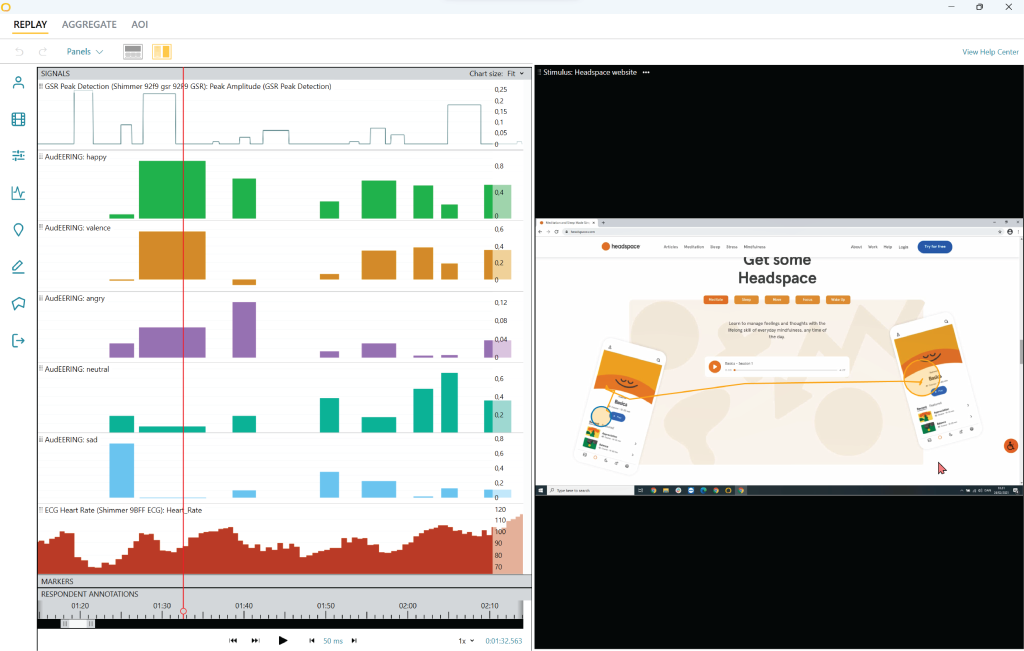

This module capitalizes on the unique acoustic characteristics of the human voice. It leverages cutting-edge machine learning algorithms to analyze aspects like pitch and stress in a speaker’s voice. These acoustic features are directly linked to the emotional valence of speech – the underlying positivity or negativity of an emotion.

Built on openSMILE 3.0

The new voice analysis module integrates audEERING’s devAIce platform, which is built on the acclaimed openSMILE 3.0 software.

The openSMILE software is an open-source audio and speech processing toolkit developed at the Technical University of Munich, by a team that now works at audEERING. It is designed to extract a wide range of audio features and information from audio signals. These features include acoustic, prosodic, and high-level descriptors, making it a valuable tool for tasks such as speech analysis, emotion recognition, speaker identification, and more. openSMILE is widely used in academic research (cited in thousands of peer-reviewed publications) and industry to process audio data, enabling the development of applications in fields like natural language processing, audio mining, and human-computer interaction.

By integrating the Voice Analysis Module into the iMotions ecosystem, users gain the unprecedented ability to gauge the emotional states of speakers in audio recordings. This advancement is nothing short of revolutionary. It adds a new layer of emotional understanding by analyzing the very nuances of how words are spoken, beyond the mere textual content.

The Power of Integration: A Comprehensive Approach

iMotions offers a comprehensive method for analyzing emotions in spoken language by combining Speech-to-Text analysis with the Voice Analysis Module. Just as facial expressions can convey more than words, how we talk about certain topics can vary based on context, the person we’re conversing with, or the subject matter. These variations can lead to unexpected interpretations of data.

For instance, individuals may use humor to discuss serious subjects like death or illness, making it challenging for software to categorize accurately. In such cases, it’s beneficial to have tools at your disposal to validate data. As a researcher, you can utilize both the voice analysis module for real-time emotion detection and emotional valence detection in our speech-to-text feature. This allows you to both hear and see how participants respond to questions or approach specific topics.

Researchers and professionals now have access to a versatile toolkit for dissecting emotional content in spoken language. The Speech-to-Text analysis uncovers emotional insights within the textual components of speech, while the Voice Analysis Module examines the emotional tone conveyed through the speaker’s voice, including tone and stress. This synthesis offers a deeper and more comprehensive understanding of emotional communication.

Implications Across Industries

The implications of these integrated features are vast and span across various industries

Biomarkers: Biomarkers, subtle indicators within the body, are increasingly being explored in conjunction with voice and speech analysis to revolutionize healthcare. This innovative approach holds promise for earlier disease detection and more personalized treatment strategies.

Human-computer interaction: As healthcare steadily embraces tele-diagnostics, encompassing both physical and mental health assessments, the role of both voice analysis and speech analysis, become pivotal in shaping the landscape of human-computer interaction within this domain. Voice analysis and speech analysis offer a versatile and non-invasive means of collecting valuable health data remotely, making it particularly valuable in the context of telehealth and teletherapy.

UX: In UX, especially in think-aloud protocols, where users verbalize their thoughts while interacting with a new product, voice, and speech analysis offer valuable insights for UX designers. Biosensors like eye tracking and facial expression analysis go beyond verbal feedback to reveal what users focus on and how their emotions align. Voice analysis represents the next frontier, delving into the biological aspect of think-aloud protocols.

Mental Health: Therapists can gain deeper insights into their patients’ emotional states by examining both the words spoken and the way they are spoken.

Education: Educators can better engage with students by understanding the emotional dynamics in online lectures through both textual and vocal analysis.

Communications: Academics and researchers can explore human communication with greater depth, delving into the intricate interplay of text and voice in conveying emotions.

Marketing and Advertising: Marketers can fine-tune their campaigns by not only analyzing emotionally charged words in ads but also assessing how the tone of a voiceover influences emotional impact. Marketers and advertisers can also beneficially use voice analysis and speech-to-text to validate the focus group or qualitative interviews they conduct as part of their research.

Diagnostics in Healthcare: Voice analysis can beneficially be used as a powerful diagnostic tool in healthcare, leveraging artificial intelligence and machine learning to detect subtle vocal changes linked to various medical conditions. By analyzing pitch, tone, rhythm, and speech patterns, voice biomarkers can help identify neurological disorders like Parkinson’s disease, mental health conditions such as depression and anxiety, and even respiratory illnesses.

Early detection through voice analysis enables timely intervention, improving patient outcomes. This non-invasive, cost-effective technology holds great promise for remote monitoring, telemedicine, and personalized treatment plans, making healthcare more accessible and efficient while reducing reliance on traditional diagnostic methods.

Conclusion

Our journey from Speech-to-Text analysis to the Voice Analysis Module represents a pivotal moment in the realm of voice emotion analysis. The integration of these features offers a comprehensive approach to understanding emotional expression in speech and it empowers users across diverse sectors to unlock new insights, make informed decisions, and foster more effective communication. This journey underscores iMotions’ commitment to pushing the boundaries of emotion analysis technology and our strong dedication to providing powerful tools for our users.

Free 22-page Voice Analysis Guide

For Beginners and Intermediates

- Get a thorough understanding of the essentials

- Valuable Voice Analysis research insight

- Learn how to take your research to the next level