At iMotions, we are dedicated to maintaining a fairly high-velocity update schedule for our products. Customers and users continuously bring valuable feedback about the updates, fixes, and improvements that they are lacking or could benefit from, which we work to release quickly when they need them. As part of our major release schedule, we are proud to announce that the time has now come for a release with some exciting new features we think you’ll love: iMotions 9.2.

This new update sees two major updates to the software suite; the implementation of Affectiva’s new Affdex 5.1, and a major addition to the annotation functionalities. There are a number of smaller updates included in the release as well, so please check out our full release notes here, or if you are an existing iMotions client, go to the Help Center for the full download and detailed explanation of the new features.

AFFDEX 5.1 integrated

The new and improved AFFDEX SDK comes with a number of long-awaited improvements and new features.

The AFFDEX SDK 2.0 relies more heavily on deep learning and has been trained using a large naturalistic data set. 11 million recordings of people of all ages, genders, and ethnicities, watching commercials were collected, and a large portion of recordings were used to train the algorithm and the rest were used as a control group.

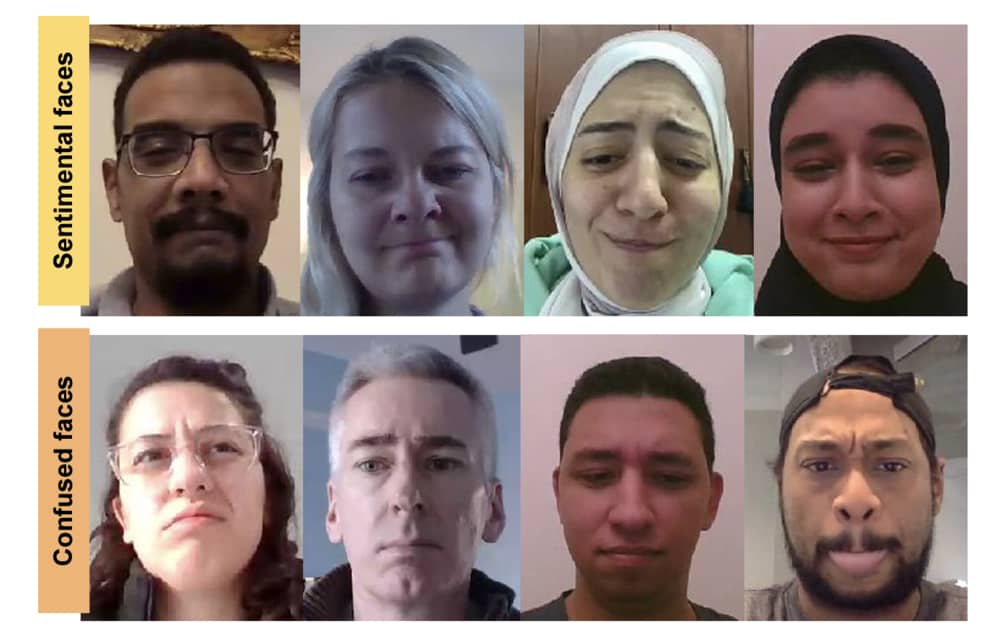

People who are used to working with Affectiva’s facial expression analysis SDK, and facial expressions in general, will know the main 7 core emotions, plus neutral, that the algorithm allows for identifying (Anger, Disgust, Fear, Joy, Sadness, Surprise, and Contempt). These core emotions are found by analyzing the facial action units (AUs) in real-time, and through that process, we get the facial action system (FACS). Using FACS allows for a more nuanced view of facial expressions as well as greater transparency to how the core emotions are computed. In AFFDEX 5.1 two new expressions have been added; Sentimentality and Confusion.

Sentimentality and confusion are two common, but not fundamental (not classified in the facial coding system) expressions that respondents often exhibit during data collection sessions, especially for use cases regarding emotional video content and user experience tasks, respectively. By not being fundamental expressions, Affectiva has had to create a data set of identifying denominators that sums up confusion or sentimentality in the software. This implementation will now give users the ability to go much more in-depth with emotionally complex material during data collection and analysis.

Image: Sample group of people displaying the facial markers for sentimentality and confusion respectively.

If you are an iMotions customer and would like to learn even more about how Affectiva trained the new SDK to work with Sentimentality and Confusion, check out our client-only webinar available on the iMotions Research Community.

Improved face trackers

On the more specific technical side, AFFDEX 5.1 has had its face trackers upgraded. Overall performance from the previous version to the current one is up from 91.3% to 94.6% for correctly identifying faces in the frame. The improved face tracker means that partially obstructed faces, poorer lighting conditions, and alternative head poses have a higher chance of being recognized successfully than in earlier versions.

The improved face trackers have also been trained with a more ethnically diverse data set, meaning that the face tracker has a higher successful tracking rate over a much wider demographic spread, thus making the software applications in many more use cases.

To read about the full AFFDEX feature update and how they created it, you can download the entire whitepaper here.

Automated annotations

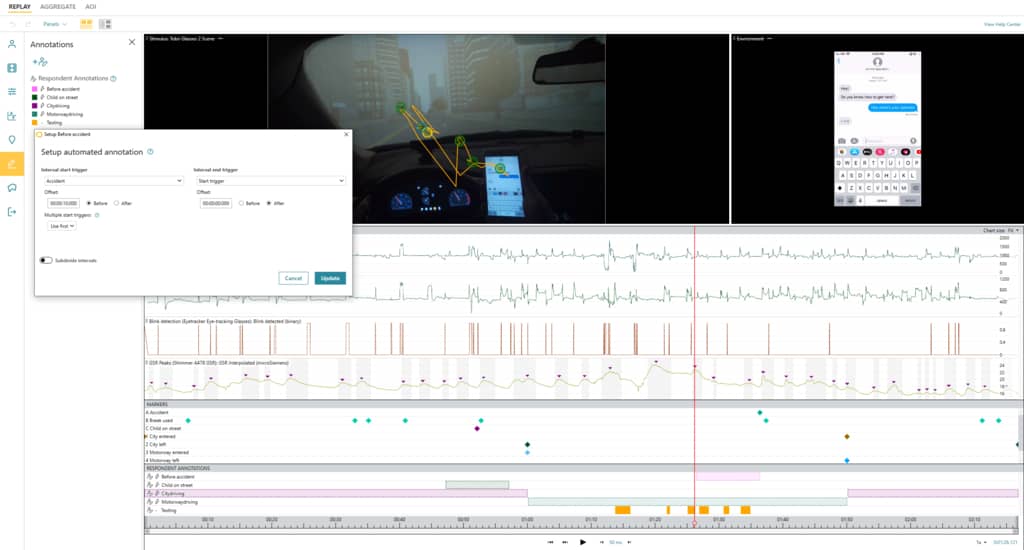

In 9.2 we have given our annotation options a big improvement by giving users the option to automate their annotations. Users who have tried running studies that last more than a couple of minutes, let alone hour-long studies with multiple participants, know how much work doing manual annotations can be.

Up until this latest version of iMotions, setting annotations meant that you would have to apply annotations to each respondent manually – depending on study size which can often be a massive undertaking in both time and budget.

We are happy to announce that from 9.2 you can automate a large part of the annotations for your analysis of respondent data. From iMotions 9.2 the software allows you to define a rule that your Annotation shall last from one event to another and the Annotation is then automatically applied to all respondents across all collected stimuli. All you need for this is an event, which you can collect as a live marker or API trigger, log from a website, the mouse or keyboard, or even set as a point-marker during the analysis.

Image: The automated annotation workflow is quick and intuitive to make annotation rules seamless and efficient.

This is a fantastic new feature for two reasons; time and accuracy. Studies with a large respondent pool will be significantly streamlined and time-effective. Data analysis will also have minimized the risk of human error so that your analyzed data will be uniform and follow the same rules.

How do automated annotations work?

In studies that require a large number of respondents to perform a task with a set list of instructions, each of these tasks could be turned into a live event, which could then be turned into an annotated event that can be automated. Let’s imagine that we have set 30 people the task of driving a car in a simulator and at one point they all have to turn their steering wheels sharply to the right and crash into a specific tree. In this instance, both the sharp turning of the steering wheel and the crashing into the tree are logged in the simulation software as an event. Events are then shared with the iMotions software as an API trigger. These API triggers can then be used by iMotions to create annotations. An annotation could for example be that every time the software logs a sharp right turn, you would want to register 5 seconds before and after the event. Now, instead of setting this annotation manually for every single participant, iMotions can automate it so that every time a participant does a sharp right turn, the software registered 5 seconds before and after the event. This way all participants are annotated identically, thus ensuring data streamlining and validity.