Automated AOIs simplify eye-tracking analysis by efficiently tracking objects across video frames. This guide explores five best practices for using Auto AOIs, covering object selection, movement considerations, and tracking accuracy. Improve your research with expert insights on optimizing Auto AOI algorithms for precise, efficient data collection in eye-tracking studies.

Table of Contents

Introduction to Automated Areas of Interest (Auto AOIs)

Areas of Interest (AOIs) are the most important analysis tool in eye-tracking allowing researchers to turn movement of the eyes into quantitative data to answer research questions and test hypotheses regarding visual attention toward specific objects.

For still images, creating AOIs is as simple as outlining the object that you are interested in. With video this can get more tedious, requiring researchers to manually place AOIs on many frames in order to get meaningful data. One of the tools to reduce the amount of time this analysis step takes is automating the process of drawing AOIs, sometimes referred to as automated AOIs.

Automated AOI

A Revolution in Eye Tracking Data Analysis

How do Automated AOIs work?

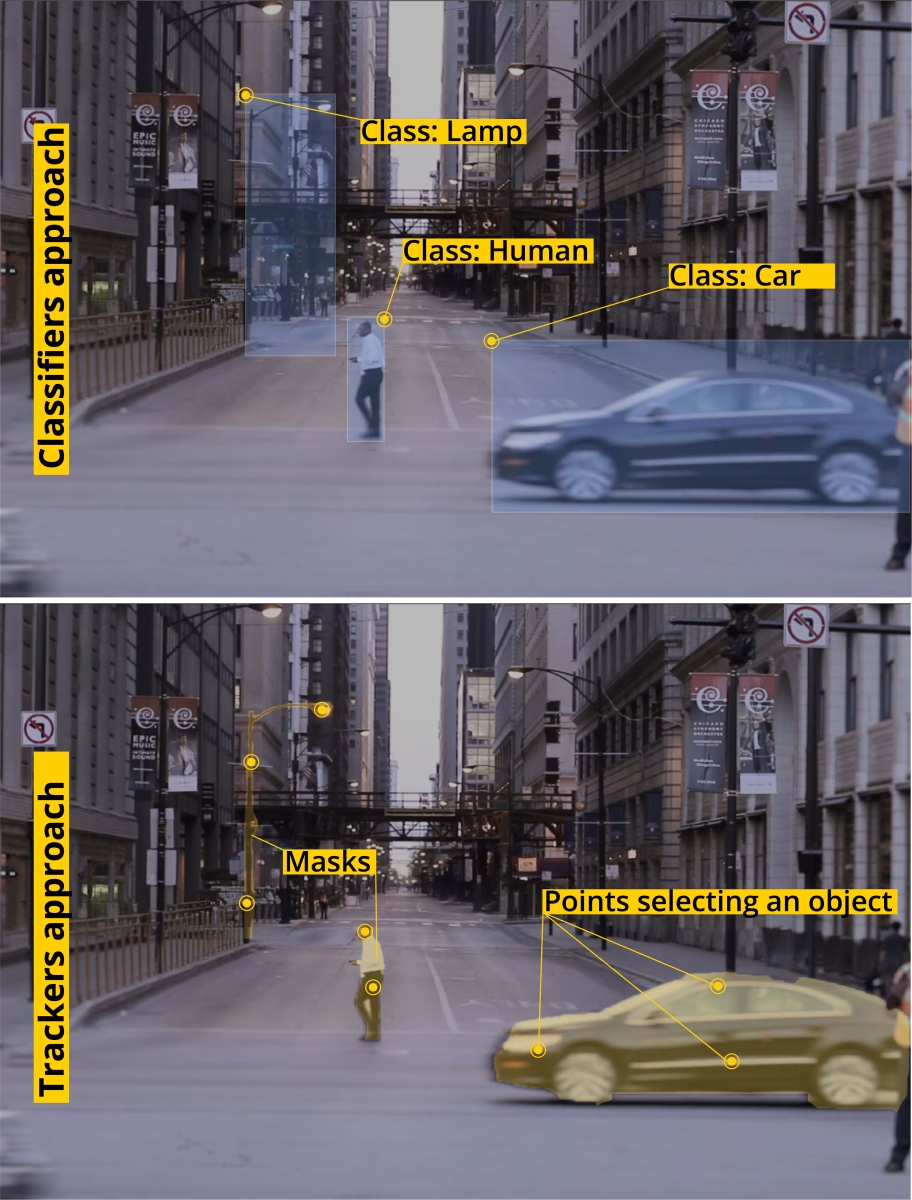

There are two main approaches for how automated AOI algorithms follow an object and create AOIs to match it. Each method has strengths and limitations.

Automated AOIs: Classifier Approach

In the classifier approach to automated AOIs, an algorithm is trained to detect various classes of objects such as “cars” or “people”. The algorithm can analyze a video frame and identify objects that are included in the classes the algorithm was trained on. From here, the algorithm goes to the next frame and finds the objects again (and so on). Along the way, the algorithm draws AOIs around the object in each frame.

The classifier approach can work well if your object is included in the classes the algorithm “knows”. It can also work well to track multiple objects simultaneously. How effective this method is varies with the algorithm. In some cases, researchers have trained the algorithms on classes they are particularly interested in so that they can be very specific about detecting objects.

This means their algorithm might know what type of car is in the video frame and while another algorithm might simply know it is a car. Algorithms vary in the number of classes they are familiar with ranging from twenty to tens of thousands of classes. In either case, the classifier approach is limited by what objects are included in the classes.

Automated AOIs: Tracker Approach

In iMotions, our AutoAOI tool uses an innovative tracker approach to automated AOIs. You choose a video frame that contains your object (called the input frame) and define your object with a few clicks which adds points to the frame.

Our algorithm uses your points to parse out your object and create a mask over it (in our software, this appears as a yellow highlight over the object). The algorithm tracks your defined object through frames, automatically creating AOIs that match the mask of the object. This approach is not limited to classes, it can parse out nearly any type of object that you can define with your points.

Our tracker approach to AutoAOIs means you are not limited by classes. The algorithm can track nearly any object because the algorithm is using information from the pixels in the input frame to determine which pixels are part of your object and which are not.

Information like contrast with the background, visible boundaries, and the location of the object in previous and subsequent frames help the algorithm determine where the object is. Our algorithm has been trained on natural movements and uses information from previous frames to continue to estimate the boundaries of your object from frame to frame even though it does not know what the object is.

Like any method, there are limitations and workarounds that help users get the most out of the tools. In this blog, we will explore five best practice tips for using our AutoAOI tool with the tracker approach.

Automated AOI Use Case Examples with Videos

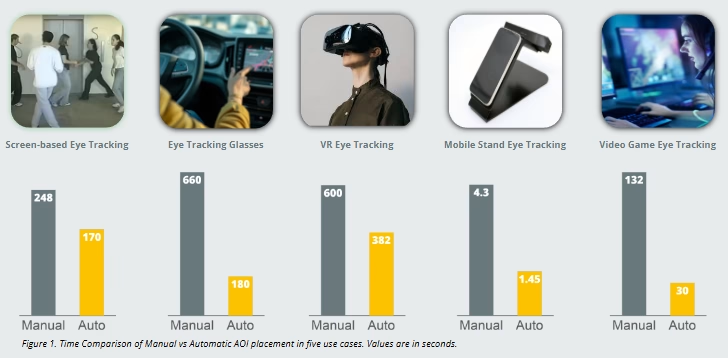

In the following examples, manual and automated AOI placement methods were compared based on time it took to place the AOIs for further quantitative analysis.

Below are 5 scenarios featuring video clips. These video clips highlight the differences in the process of using manual and automated AOI placement.

1. Screen-Based Eye Tracking

2. Eye Tracking Glasses

3. VR Eye Tracking

4. Mobile Stand Eye Tracking

5. Video Game Eye Tracking

5 Important Considerations when using AutoAOIs

1. Choosing an Object

The tracker approach is not limited by classes, so it can work for nearly any moving object. We say nearly any object because people think of objects differently than algorithms understand them. Most (if not all) of the objects included in the classes of the classifier approach will work for the tracker approach because they both use information from pixels in the video frame.

Choose Objects With Obvious Boundaries.

If you want to know if your object will work well for AutoAOIs, consider if you could easily trace the object in your input frame. If another person had to also outline your object, would your outlines be nearly identical or is there a boundary of the object that is a bit vague?

Example: Imagine there is a person walking in your stimulus video and you want to track only their hand. If multiple people tried to trace the hand, the wrist boundary would not be as clear as the outline of the fingers. However, if the walking person was wearing a glove that contrasts with the rest of the arm and the background or has long sleeves, this would work much better because there is a clear boundary against the rest of the arm.

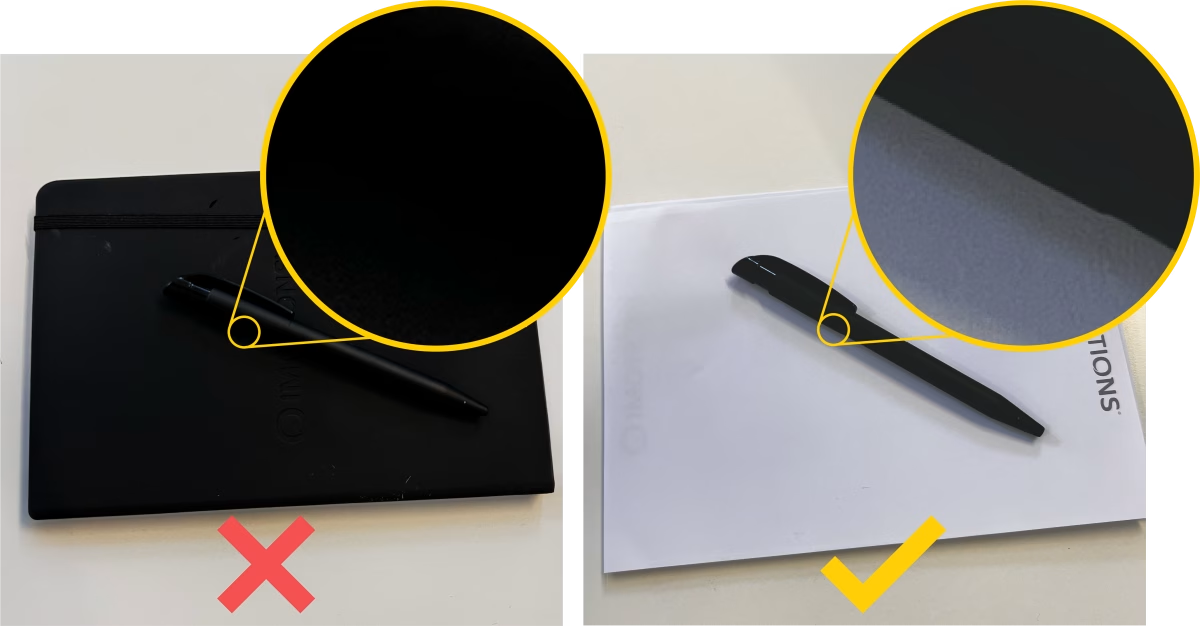

AutoAOIs work better for objects with clear boundaries separating them from the rest of the video frame. In other words, is this object easily distinguishable from the background or other objects in the frame. Keep in mind that the algorithm usually cannot see better than you can. If you are looking at the video and having trouble making out the boundaries of an object (because it is blurry or the image is dark), the algorithm will also struggle.

Choose objects you could set your hand on.

AutoAOIs and the tracker approach work very well for solid objects, (this is also the case for the classifier approach). Neither approach works well for things with conceptual boundaries.

Example:

Let’s say you are conducting a study where your participant is driving a car while wearing eye tracking glasses, and you want to look at behavior related to checking blindspots.

You can use AutoAOIs to track an object in a blind spot and even the various mirrors around the car. AutoAOIs cannot track the concept of a “blind spot” because this is an empty space without clear visual boundaries that you can touch. Similar to the rule above, if different people tried to outline a blindspot on a video, the outlines would not be very similar.

Choose one object at a time.

AutoAOIs can be set up for different objects and the analyses run simultaneously, but each run is for only one object. AutoAOIs are not meant for groups of objects.

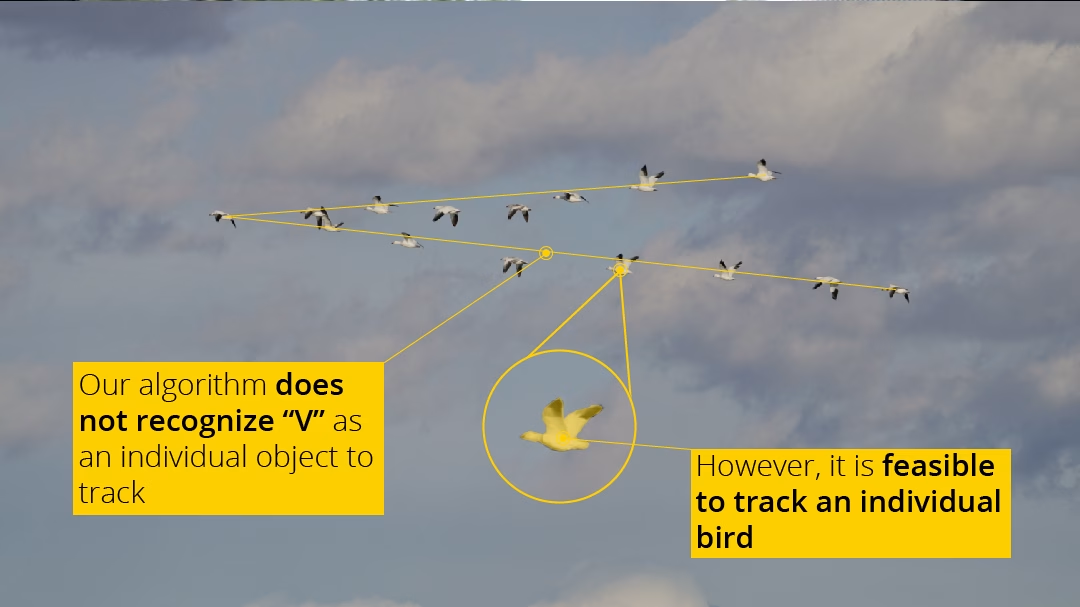

For example, AutoAOIs will struggle to track a flock of birds, a school of fish, or a formation of planes. Even though geese can make an obvious ‘V’ shape as they migrate, the algorithm does not “connect-the-dots” between birds to make the ‘V’.

Good to Know:

- You could choose one to track or try choosing a few individuals to track separately. Research suggests that our eyes track a group of objects by focusing on the center of the group, so if there are certain individuals at the center of the formation, one could try tracking some of these individuals and aggregate the data later.

- Most classifier approaches will also struggle with following a group of individuals unless the algorithm has been trained to do this specifically.

Choose a unique object.

It is possible to track an object that is nearly identical to other objects in the same scene, as long as they do not cross paths. Like the previous example with a flock of birds, tracking a few birds in the flock is fine, unless they start crossing over each other. Our AutoAOI tool has been tested on tracking cars that are weaving through traffic, going behind other cars and reappearing in view. This works well because the car is typically different enough from other cars on the road. Our AutoAOI tool is not limited by the classification of “car”, so your car can be distinguished from other cars based on its unique features.

NPCs (non-player characters) in video games can often look extremely similar and AutoAOIs perform well when tracking an individual. If one NPC moves in front of another, the algorithm has to guess which one of them is the one it was tracking in the previous frames.

Good to Know:

- If the characters are not quite identical, make sure the mask generated by the algorithm covers the defining characteristics. NPCs sometimes have an icon, health bar, or power meter floating above their heads. Try including this in the mask to see if your AutoAOIs perform better.

- Additionally, you can use multiple input frames to tell the algorithm which one is your object. After the objects crossover, make another input frame and mark your object.

- The classifier approach will also have this challenge, sometimes more so depending on if the classes are specific enough to differentiate between the similar objects and whether it has been trained to accommodate these types of movements.

2. Consider Movement and Changes

Automating the process of drawing AOIs optimizes eye tracking data analysis in cases of movement. It is important to understand the type of movement and speed of movement influence tools like AutoAOIs.

Are the movements “natural”?

The algorithm that powers the AutoAOI module is trained on natural movements and considers the information from the previous and subsequent frames when making estimates. AutoAOIs work well with objects moving across the stimulus, growing and shrinking as it moves towards or away from the viewer. It can even keep track of things as they rotate or flip.

It tracks objects that disappear (perhaps go off screen or behind another object) and reappear, as long as the object looks similar to how it did when it disappeared. The algorithm will struggle with objects that have unnatural movements such as cases where a video has been digitally “smoothed” in such a way that it is difficult to create a mask of the object frame by frame.

Consider how fast the object changes

AutoAOIs can accommodate things that change shape or color quite dramatically, as long as the transition occurs over a few frames. If dramatic changes occur faster than the frame rate or your video, the algorithm struggles to recognize the object from the previous frame. For the same reason, instantaneous changes in lighting or the sudden appearance of shadows can be problematic.

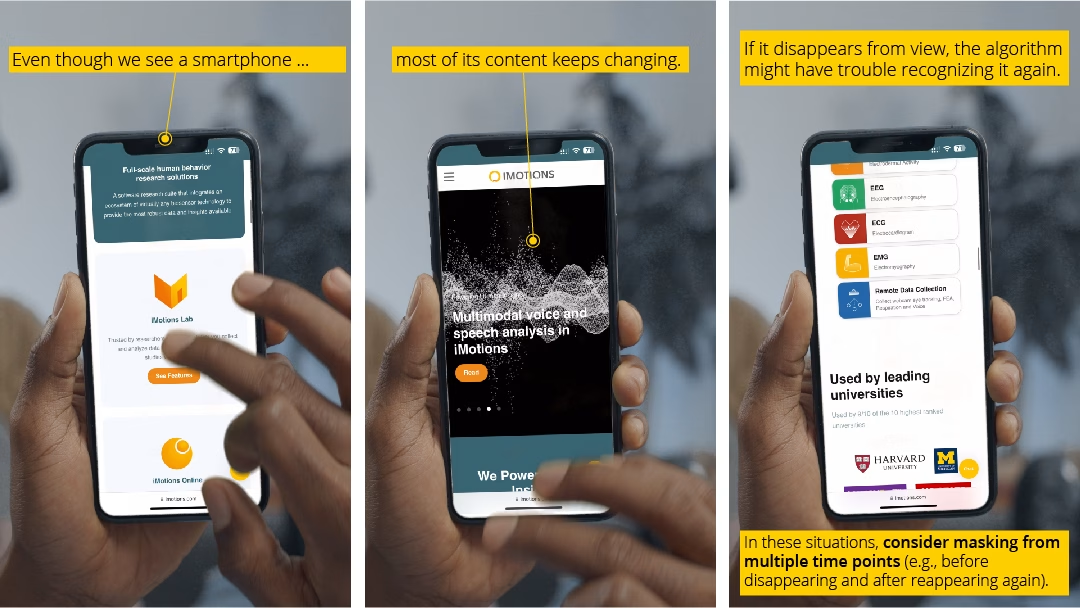

Good To Know: Tracking Screens

- Example: When tracking screened objects (like phones, computer screens, and tablets) the image on the screen of these objects can change instantly. This change is faster than the frame rate of the video and alters the majority of the pixels included in the object. Even though the overall shape of the object does not change, most of the pixels that make up the object do.

For AutoAOIs, one solution is to use multiple instances. Every time the object appears after being out of sight or changes drastically, create an input frame and define a time range in which the object remains in this state. When it changes, make a new input frame with a defined time window covering this state (repeat as necessary). This will be processed as one object but in multiple runs, and will better accommodate these cases.

3. Placing Points to Define your Object

One major advantage of having a tracker approach is that you can define your specific object by simply clicking on it. When points are placed properly, the mask should cover the entire object and only the object.

Start with a point in the center of the object and then see what is missing from the mask. Place your points on what you consider important in defining the object. Consider how the object will change over time. If the object will change drastically, be sure to include points on parts that do not change.

Example: If you want to track a person walking, start with a point on the torso and a point on the head. Next, add the limbs as they will be moving the most. If the person is wearing a distinct hat from other people in the scene, include the hat.

Less is More.

Defining the object should only take a few clicks, many objects can be defined in two or three points. If you seem to need many points to define your object, consider whether there is another frame in which your object is more visible.

Tip: In iMotions’ AutoAOI tool, you can also control-click to indicate what is not included in the object, if the initial mask goes beyond the boundary of your object.

4. Choose a Good Input Frame

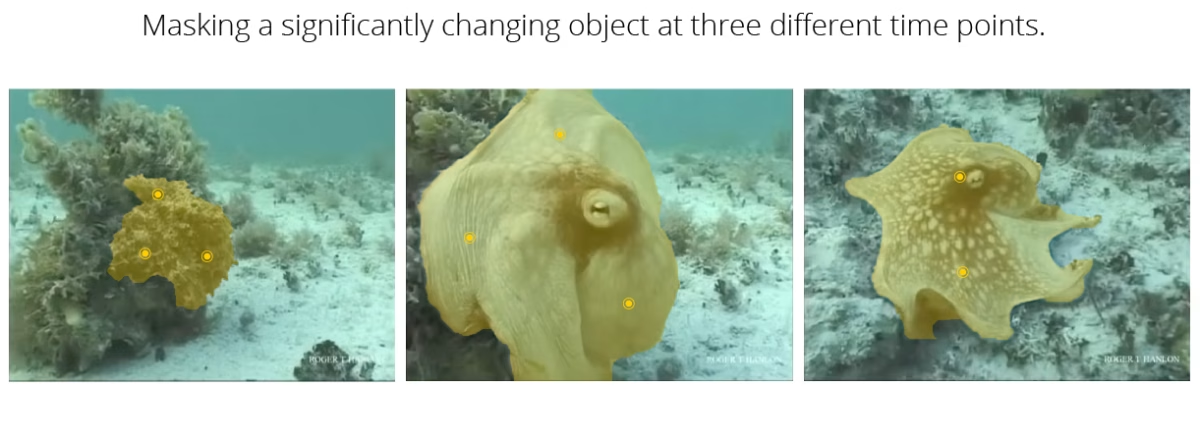

We’ve tracked a camouflaging octopus as it escapes a curious diver, changing color, size and texture drastically twice in one video. AutoAOI had no problem. Without careful selection of input frames this would be impossible.

An ideal input frame has good resolution and the object is clearly visible. Although you typically only need one frame to define an object, if an object changes considerably, try using multiple input frames. Choose a good frame for each “form” of the object. For example, when tracking the octopus I used three frames, one for each of its color/textures.

Additionally, if multiple input frames does not help, multiple instances on the same object often solves the problem (described previously in the screened object example). We’ve tracked Mario’s kart as it runs through a question block, blasts off a ramp with flames coming out of the exhaust pipes. Subsequently, the kart did a flip and the glider opened above the kart. Upon landing, the glider retracted and Mario continued racing.

In this example, multiple input frames or instances (say, one with the glider and without the glider) can be helpful. We can also choose to make the glider a separate AutoAOI. It only takes a few more clicks, and the AutoAOI can run simultaneously with the kart AutoAOI, but it allows us to do new analyses. We can easily compare how long someone looked at the kart vs the glider when the glider was present. With this strategy we also maintain the flexibility to combine these data.

5. Always Check your AOIs

While AutoAOIs make automating the process of adding AOIs around moving objects a lot easier, you should always check to see that the AOIs look the way you think they should, regardless of which method you use. Sometimes there can be a segment of the recording or video which did not work as well as the rest and an alternative solution (an additional input frame or AutoAOI analysis) is needed. Even in these cases, automatic placement of AOIs can still save users hours in analysis.

Eye Tracking

The Complete Pocket Guide

- 32 pages of comprehensive eye tracking material

- Valuable eye tracking research insights (with examples)

- Learn how to take your research to the next level