Areas of Interest (AOIs) are the key tool for quantitative eye tracking analysis. Manually creating AOIs for dynamic stimuli such as videos, eye tracking glasses data, and data from VR environments can be very inefficient and inconsistent. Gaze Mapping and Automated AOIs make it easier to extract quantitative insights from dynamic stimuli.

Table of Contents

Areas of Interest (AOIs) are the powerhouse of eye tracking analysis. AOIs are shapes drawn on stimuli in a study so that the data can be separated by location on the screen. Eye-tracking metrics such as dwell time, time to first fixation, and revisits come from AOIs. While heatmaps and gaze paths are excellent tools that help us understand the set-up of the study and visualize some of the results, AOIs allow us to analyze attention in a quantitative way.

Three ways to create AOIs

Place AOIs manually. This allows you to decide exactly where the AOI should be and what shape. For still images, this is pretty straightforward, just draw a shape around the object you are interested in collecting eye tracking metrics for.

For videos with designated areas where something appears and disappears (e.g., maps and dashboards in video games or the subtitles at the bottom of a movie), AOIs can be turned on and off so that they are only present when the object is present.

The problem with making AOIs on videos is when it comes to freedom of movement (either the study participant, the object, or both). When movement can be in any direction, AOIs have to be adjusted frame-by-frame to follow the object. This can be tedious work. Gaze mapping and Auto AOIs make creating AOIs easier.

Gaze mapping (blog) When analyzing eye tracking recordings, participants are moving and can encounter an object from multiple angles. This means that when the researcher is watching the recording, the object of interest can move from one part of your screen to another. In this way the object moves and manually placing AOIs becomes tedious. Gaze-mapping “maps” the gaze points from the eye tracking data onto a reference image, making manual AOI placement much simpler because they are on a still image.

Automated AOIs These tools create a mask over selected moving objects that precisely reflect their shape and location. In each frame, an AOI is automatically created to fit the mask so that the AOI moves and morphs as the object does.

Gaze mapping and AutoAOIs are analysis tools for dealing with movement during eye tracking, reducing the workload of frame-by-frame analysis, making it easier to do AOI analysis. The problem with movement is that in order to get quantitative insights, researchers manually create AOIs, which is labor intensive. This often requires researchers to “step-through” hundreds or thousands of frames to place AOIs. For eye tracking glasses, VR studies, and screen recordings, each participant has to be analyzed separately.

To make it even more complicated, it often takes multiple researchers a lot of time to place these AOIs for a single study. This adds more problems because each researcher is not likely to place AOIs in the same way or the same size and shape as another. Training and strict protocols help, but also take time and are still error-prone. Gaze Mapping and AutoAOIs help reduce the workload and errors of manually placing AOIs frame-by-frame.

So, how do you know which to choose?

First, Find The Movement

What is moving?

Gaze mapping was developed to accommodate participant movement with eye-tracking glasses while moving near a still object of interest. Whether they are moving through a lab or store, exploring a virtual environment, or operating a vehicle, study participants will view the object from different angles as they approach it and move away from it, making it tedious to draw AOIs.

AutoAOIs were developed to accommodate object movement in dynamic stimuli such as video advertisements, movie trailers, or video games. As with gaze-mapping, it is tedious to draw the AOIs in these scenarios frame-by-frame. AutoAOIs are used to track moving characters or items in videos the study participants watched.

When deciding which tool to use, thinking about what is moving is a good place to start with simple study designs, but don’t stop there! There are many exceptions.

One exception is that gaze-mapping works very well for webpages, even though the respondent is often sitting at a computer and the webpage is moving across their vision. The webpage itself makes a great reference image to map gaze points onto.

Another exception is using AutoAOIs for tracking stationary objects (such as signs, benches, buildings, and billboards) as a study participant drives past with eye tracking glasses.

With so many field studies and VR experiments, what should you use when both the study participant and the object are moving? It depends!

While movement may be a good starting place for understanding when to use Gaze Mapping vs AutoAOIs, considering the study design and the research question is a more reliable approach. We will go through two critical considerations to help you decide whether gaze mapping or AutoAOIs best suit your study.

Make Sure Its Picture Perfect

Would the object make a good reference image?

Gaze-mapping puts data points on a reference image and therefore works best with objects that could be well-represented in a single image (even if it is a big one). In other words, gaze mapping works well for things you might consider kind of flat. Examples include product shelves, dashboards, websites, control panels, gallery walls and billboards. While some of these are 3D objects, the research question is often focused on one side of the object.

Whole-in-One – Capture The Scene

Are you interested in the object as a whole or features on that object?

There are cases when you could use either AutoAOIs or gaze mapping and the best choice depends on your research question.

If you are interested in collecting eye tracking metrics on whole objects, you could use AutoAOIs. In a driving experiment, you might want to see if the driver noticed a particular billboard. In a video game, the playable character might be running past or interacting with a control panel and you could measure how much time the player spent looking at the control panel. In a museum, we might be interested in seeing how many people noticed a sign or picture on a gallery wall. Billboards, control panels, and pictures can be AutoAOIs.

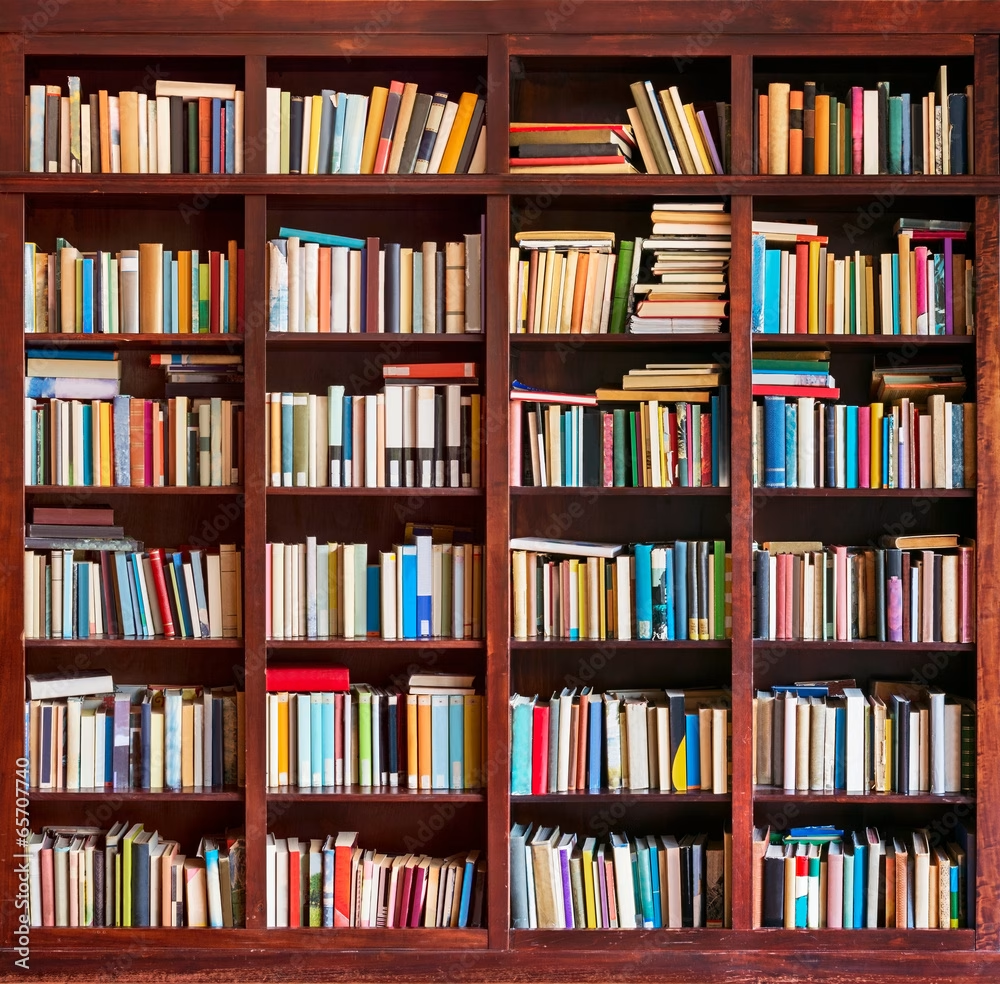

Example: Book Marketing

We are looking into book marketing and are interested in determining

- what people notice about our poster when they enter the building

- how people’s eyes scan our carefully curated display shelf

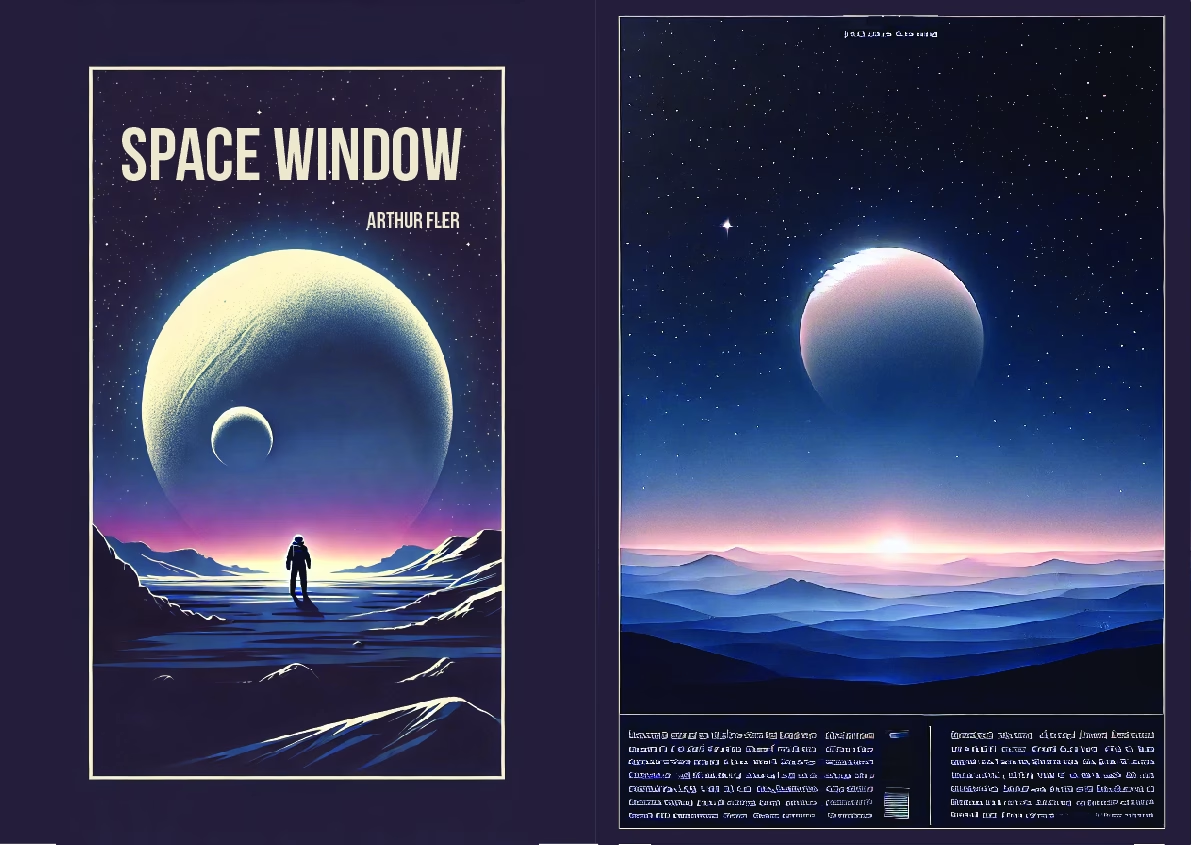

- what part of the book cover (back and front) they focus on when they hold the book.

In the first part of our study, subjects walk freely through a bookstore or library. We have a poster promoting our book by the entrance and a display shelf with our book inside. A reference image could be made of the display shelf and a separate one of the promotional poster.

In the second part of the study, people are handed the book to examine freely. Reference images could be made of the front cover and the back cover to see which features (text or pictures) caught their attention longest.

The reason using multiple reference images works is that all of these reference images (shelves, poster, and the front and back cover pages of the book) are of things that are relatively flat and have a clear face that can be used as a reference image. Importantly, each of these objects are not included in the other reference images.

However, if answering your research question relies on eye tracking metrics of features of an object, it might be worth considering gaze mapping. Billboards might have a picture of the product, a tagline, and a logo or brand name. Control panels have buttons, switches, gauges or monitors.

A gallery wall might have multiple pieces of artwork and signs with information about the art. Even a single painting might have multiple subjects within it that you want to consider. All of these objects are relatively flat (make good reference images) and have components or features that could be studied using AOIs, making gaze mapping a great choice.

You are not limited to choosing one method or the other, you can do both! Just be sure your research question and hypotheses align with the analysis strategy.

Let’s take our bookstore/library sign study from before:

Conclusion

Both Gaze Mapping and AutoAOIs save researchers time during data analysis by avoiding frame-by-frame placement of AOIs. To decide which to use, consider your research question (Is the whole object an AOI or are there multiple AOIs on the object?) and your study design (what is moving? Is your object flat?). Your research may involve using both techniques.

When designing your eye tracking study, make your analysis strategy before you collect data (it can be refined in a pilot study). This is the ideal time to decide whether to use gaze-mapping, AutoAOIs, or both and make sure you collect data that can answer your research question.

Let’s talk!

Schedule a free demo or get a quote to discover how our software and hardware solutions can support your research.