iMotions is headquartered in Copenhagen, a city some would argue is the design capital of the world. Danes are said to be very much attuned to how spaces and objects make us feel, and designers like Arne Jacobsen and Bjarke Ingels are household names. Even the Danish word hygge has come to be known internationally as a practice of creating coziness in your surroundings, as Danes prize the combination of functionality and minimalism with aesthetics and beauty.

Yet even for the most skilled designers and architects, when it comes to designing new public spaces, whether a new building, an urban area, or even an art exhibit, understanding how visitors and inhabitants will react to them is often based on institutional knowledge about architecture and urban planning, but also a fair bit of guesswork. How can we take the guesswork out of this process, before we sink lots of money, resources, and manpower into the actual building process? Emerging technologies in neuroarchitecture, including virtual reality, eye trackers and other biosensors, are starting to give us some answers. Here are some studies with eye tracking and other biosensors like GSR and EEG that are influencing the fields of architecture, urban planning, and evidence-based design.

Table of Contents

- 1) Where do we look when we look at buildings?

- 2) How restorative can indoor environments be?

- 3) How rewarding are certain aesthetic and functional features of homes?

- 4) How can we guide visual attention when looking at paintings?

- 5) How do we rank elements depicted in renderings of proposed urban planning initiatives?

- Eye Tracking Glasses

- References

1) Where do we look when we look at buildings?

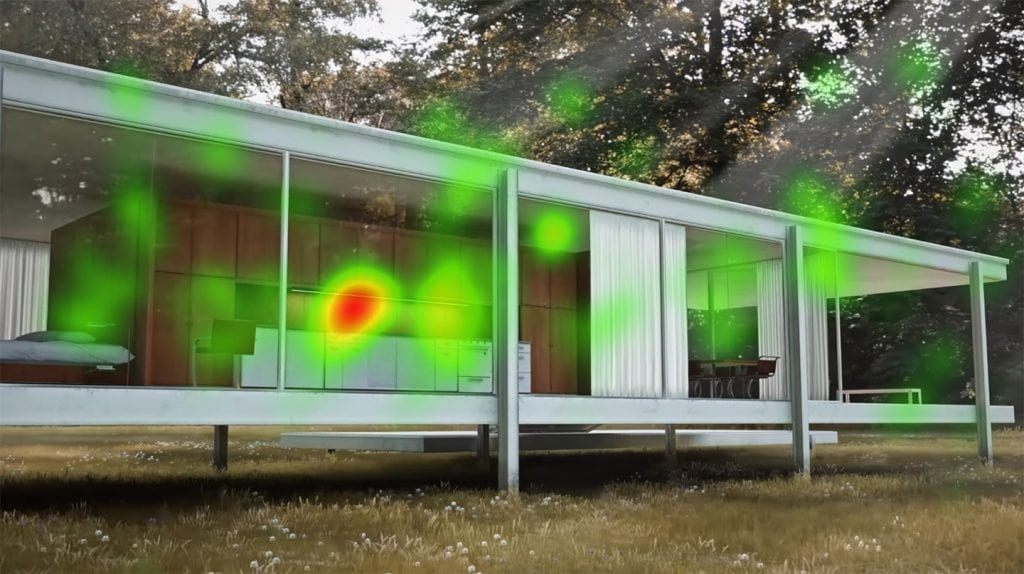

Ann Sussman and Janice Ward used iMotions to analyze participants’ gaze with eye tracking as they looked at pictures of buildings in New York and Massachusetts. What they found is that featureless or blank facades do not attract visual attention, but walls with high-contrast features or murals do, as do images of people on those facades. They liken this behavior to an attempt to attach to something so that we can ground ourselves in a space and feel less anxious. [1]

2) How restorative can indoor environments be?

Zhengbo Zou and Semiha Ergan at New York University observed how participants navigated two virtual environments to determine whether certain design features can have an impact on restorativeness. Monitoring EEG, eye tracking and EDA / GSR in iMotions, the scientists placed participants in the virtual environments, one that was optimized to be restorative (whose qualities were determined by previous studies related to human experience of restorativeness), and the other non-restorative. They found that the presence and size of windows, the amount of natural light, and presence of natural views are statistically significant in terms of their relationship to participants’ experience of restorativeness in these virtual spaces. They recommend that the findings could help other architects and interior designers not only design similar studies to collect user experience data, but also to guide designs towards including these features so that residents feel more at home in spaces. [2]

3) How rewarding are certain aesthetic and functional features of homes?

Similarly, Balconi, Rezk and Leanza researched whether aesthetic and functional attributes in domestic environments have an effect on mechanisms of cognitive and emotive reward, measured by showing participants videos of different domestic environments while capturing eye tracking and EEG data. They posit that functional (light, size and ceiling height) and aesthetic (colors, shapes and design) elements engage and reward people differently according to their age, gender, and spatial cognition abilities. These findings, they conclude, show the importance of using neuroarchitecture to design environments that better consider their end users’ spatial orientation. [3]

4) How can we guide visual attention when looking at paintings?

Researchers at Marche Polytechnic University in Italy wanted to understand how we perceive art in order to optimize Augmented Reality displays at museums. They tracked eye tracking data in iMotions while participants viewed three famous paintings at the National Gallery of Marche and used Area of Interest (AOI) data to understand which visual elements in the paintings participants focused on the most — and then accentuated those elements with additional Augmented Reality displays that further guided and personalized the viewing experience. This study provides exciting new ideas for orienting and guiding museum-goers through the visiting experience using Augmented Reality. [4]

5) How do we rank elements depicted in renderings of proposed urban planning initiatives?

Architectural renderings of proposed urban spaces, also known as visual preference surveys, give viewers an idea of how a space will look before it is built. In 2016, urbanism researchers conducted a study that reinforced the efficacy of these visual preference surveys by uniting participants’ stated answers with their eye tracking data. They found that in both the qualitative and quantitative responses, images of people, pedestrian features and greenery elicited positive reactions while cars and parking elicited more negative reactions. Interestingly enough, reactions to buildings in the designs were mixed. The researchers indicate that urban planners can use this technique as a way to better understand how to make cities pedestrian-friendly and less trafficked by vehicles. [5]

Check out: How architecture affects human behavior

Conclusion

Moving towards evidence-based design using neuroarchitecture techniques paves the way for constructing cities and structures in the future that are perfectly aligned with our aesthetic, emotional, and functional preferences for our surroundings. Employing new technologies like eye tracking in virtual reality allows architects to test prototypes of these surroundings before they’re built, therefore optimizing resources to design more human-centric spaces. To learn more about eye tracking, download our free pocket guide below.

Eye Tracking Glasses

The Complete Pocket Guide

- 35 pages of comprehensive eye tracking material

- Technical overview of hardware

- Learn how to take your research to the next level

References

[1] Sussman, A. & Ward, J. M. (2017, November 27). Game-Changing Eye-Tracking Studies Reveal How We Actually See Architecture. Retrieved from https://commonedge.org/game-changing-eye-tracking-studies-reveal-how-we-actually-see-architecture

[2] Zou, Z., & Ergan, S. (2019). A framework towards quantifying human restorativeness in virtual built environments, EDRA 50: Sustainable Urban Environments: Research, Design and Planning for the Next 50 Years. May 22 – May 26, 2019. Brooklyn, New York, U.S.

[3] Balconi, M., Rezk, S., Leanza, F. (2015). Environment Impact based on functional and aesthetical features: what is their influence on spatial features and rewarding mechanisms?, Abstract de VI International Conference on Spatial Cognition, 16 (S1): 83-83.

[4] Naspetti S., Pierdicca R., Mandolesi S., Paolanti M., Frontoni E., Zanoli R. (2016) Automatic Analysis of Eye-Tracking Data for Augmented Reality Applications: A Prospective Outlook. In: De Paolis L., Mongelli A. (eds) Augmented Reality, Virtual Reality, and Computer Graphics. AVR 2016. Lecture Notes in Computer Science, vol 9769, DOI: https://doi.org/10.1007/978-3-319-40651-0_17

[5] Noland, R. B., Weiner, M. D., Gao, D., Cook, M. P. & Nelessen, A. (2017). Eye-tracking technology, visual preference surveys, and urban design: preliminary evidence of an effective methodology, Journal of Urbanism: International Research on Placemaking and Urban Sustainability, 10:1, 98-110, DOI: 10.1080/17549175.2016.1187197