iMotions 11

Built on 20 years of innovation

Capturing The Full Picture of Human Behavior

In 2005, we set out with a bold vision: to give researchers the power to see the signals beneath the surface, how people truly think, feel, and act, without being tied to any single device or method.

Twenty years later, our users are living our vision with us. Today, iMotions stands as the world’s leading platform for multimodal behavioral research, trusted by scientists, educators, and businesses across the globe.

Join CEO Peter Hartzbech as he reflects on 20 years of innovation, and explore the interactive timeline to see how iMotions has redefined behavioral research – and where we’re headed next.

Built for Researchers, Refined by Experience

Motions 11 continues our long-term goal of making behavioral research easier, faster, and more flexible for everyone.

Built on two decades of experience, this release focuses on practical improvements that help researchers work smarter, from smoother sensor integrations, to better automation and visualization tools.

Every update supports the same core idea: a hardware-agnostic platform where you can connect the tools you need, analyze data efficiently, and share insights with confidence.

Watch iMotions’ VP of Engineering Jacob Ulmert explain the latest updates and walk through what’s new in iMotions 11.

See the milestones of innovation that is the reason why virtually every top university through out the years have used our software

- iMotions Founded The founding vision was to build non intrusive emotion sensing technologies, with a strong focus on the software layer. Remote eye tracking was the first sensor modality utilizing Pupil, Blink and Gaze to estimate emotional responses. The first round of seed money secured to hire people and start building product and business. The first target market identified was Neuromarketing a newly minted field combining neuroscience, biosensors and marketing.

- First Product Release Emotion Tool 1.0 Released. Built to cater for early adopters of biosensors in the marketing research space. It featured the patent pending emotional response detection algorithm, allowing marketeers to pretest print ads in a fast and easy way.

- Entrepreneurship Prize iMotions wins Computer World DK Edison Prize, Chosen by the audience – giving the iMotions team a confidence boost and stronger belief in the chosen direction.

- Expanding The Market during Crisis The 2008 financial crisis also hits iMotions as venture financing gets tough. A crisis is an excellent accelerator for innovation – iMotions expands the product to support more usecases and a wider client base.

- First academic client iMotions signs up the first academic client – CBS in Denmark

- Beyond Eye Tracking – Pivot to Multimodal Platform iMotions partnered with Emotiv and integrated the first EEG sensor. Quickly after iMotions partnered with Affectiva in order to integrate their Q-Sensor. For the first time allowing users to conduct multimodal (vendor agnostic) research using one single software. Partnerships and integrations became a strong and defining driver for the growth of the business. iMotions closed its first large University at Iowa State University with Dr Stephen Gilbert.

- Boston office iMotions opens office in Boston, USA. Founder relocates and leaves Denmark behind to hire people and build up the market, with a focus on Academia. The beginning of a strong growth path, where product-market fit is sharpened. 2012 was the first year of profitable operations – a proof that the company has successfully expanded beyond the Neuromarketing applications.

- Integrating Affectiva As one of iMotions close partners in Facial Expression analysis were acquired by Apple, iMotions reinvigorates the partnership with Affectiva – by integrating their highly acclaimed engine for detecting emotions in the face.

- Gazelle Prize 2016 iMotions wins Borsens Gazelle Prize 2016 for high, consistent growth.

- Gazelle Prize 2017 iMotions wins Borsens Gazelle Prize 2017 for high, consistent growth.

- Gazelle Prize 2018 iMotions wins Borsens Gazelle Prize 2018 for high, consistent growth.

- Covid 19 Never let a crisis go to waste. The pandemic made it impossible to conduct research in the lab – the solution was to expand product to support Online research, which eventually led to new innovations such as high performance webcam eye tracking and webcam respiration.

- Acquisition Smart Eye aquires iMotions in a deal of SEK 400 Million. iMotions is operating independently and comntinues the hardware agnostic strategy. Affectiva, iMotions and Smart Eye under the same roof – a very visionary setup for the automotive segment, while iMotions leads the research side of the business.

- Affectiva becomes part of iMotions Affectiva becomes part of iMotions under Smart Eye’s human behavior research division. Affectiva and iMotions have been close partners since the very beginning and the joint forces is a substantial boost for them both allowing sharing of technology and resources, boosting iMotions AI capabilities and Affectiva’s multi-modal integration.

- Advanced Data Visualization Developed more sophisticated graphing and visualization options for research data.

- iMotions 11 Released The result of 20 years of pure hammering. We thank all customers and partners that helped us going through thick and thin. We look forward to the next 20 years of building.

iMotions 11 — Features you don’t want to miss out on

This release marks a cornerstone in iMotions history with 20 years of continuous innovation and improvements to the all in one software solution for human behavior research, iMotions Lab.

fNIRS Module

We partnered with Artinis to bring you the best of the crop fNIRS. So you can now record fNIRS data as well as export it in SNIRF format

Multi-stimulis Replay

The new multi-stimulus replay timeline lets you visualize a respondent’s full exposure, including signals, annotations, and events, in one continuous flow.

Multi-face tracking and emotion analysis processing with Affectiva

With the new Facial Expression add-on it is now possible to easily track and analyze multiple faces in videos. This is ideal for video conferences and calls.

R Notebook Improvements

New notebook for Accelerometry and all new metrics for Voice Analysis, Facial Expression Analysis and ECG as well as general performance improvements to metric calculations.

Webcam Respiration

Since iMotions 10 we have added a brand new module allowing for contact-free breathing measurements in remote research (online).

Video Segment Detection

Automatically create video segmentation based on scene detection and utilize a built-in vision language model for automatic scene descriptions.

Auto AOI

Support for Ellipse and Polygon dynamic AOI’s. Support for static stimuli. General improvements.

iMotions Lab in Action

-

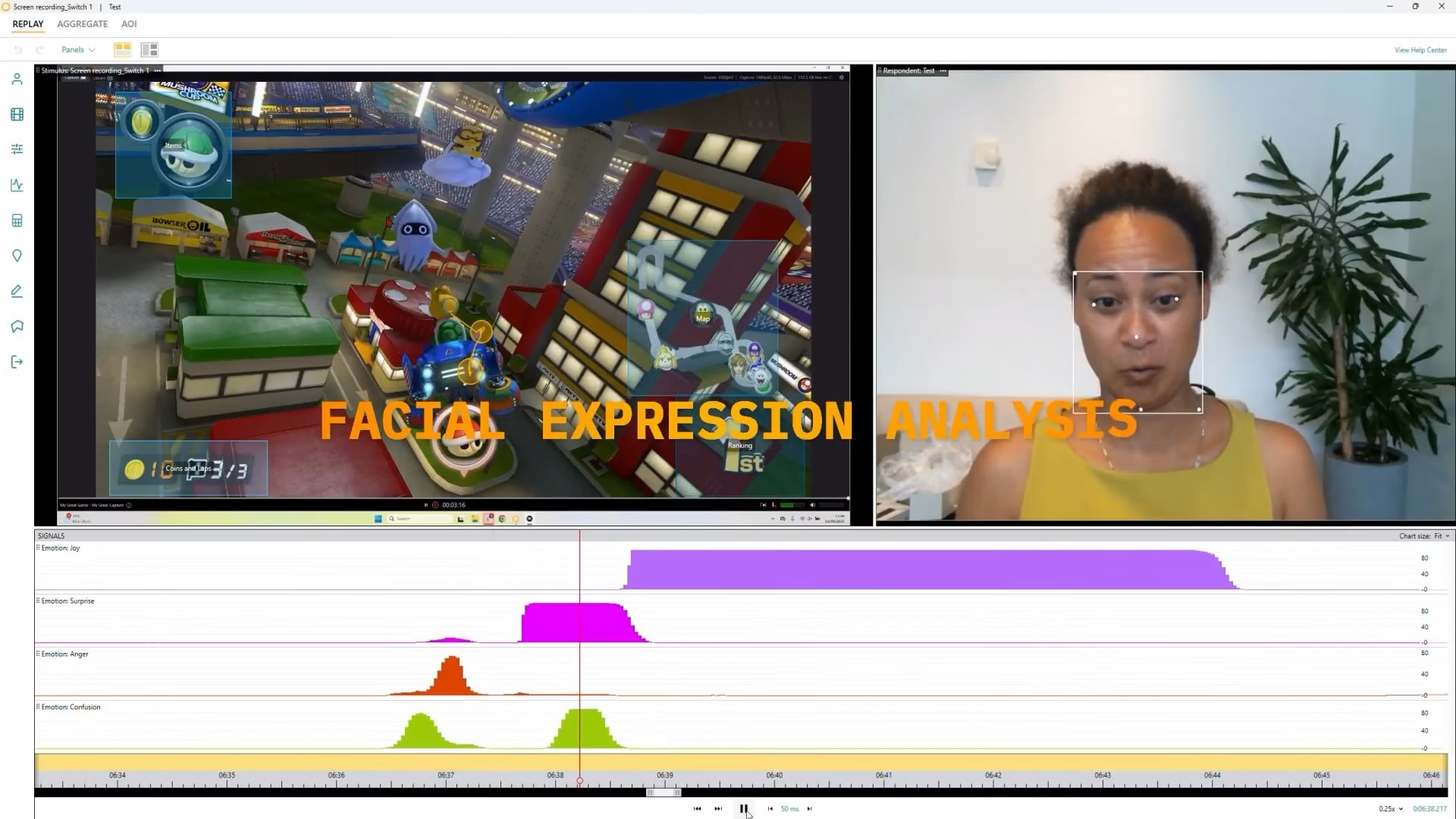

Mario Kart: From data to insights

Look at engagement through facial expression analysis and blinks.

-

Mario Kart: More sensors, more insights

More complex multimodal analysis looking at engagement in terms of crash events with ECG, Resp, SB ET, Voice, and FEA

-

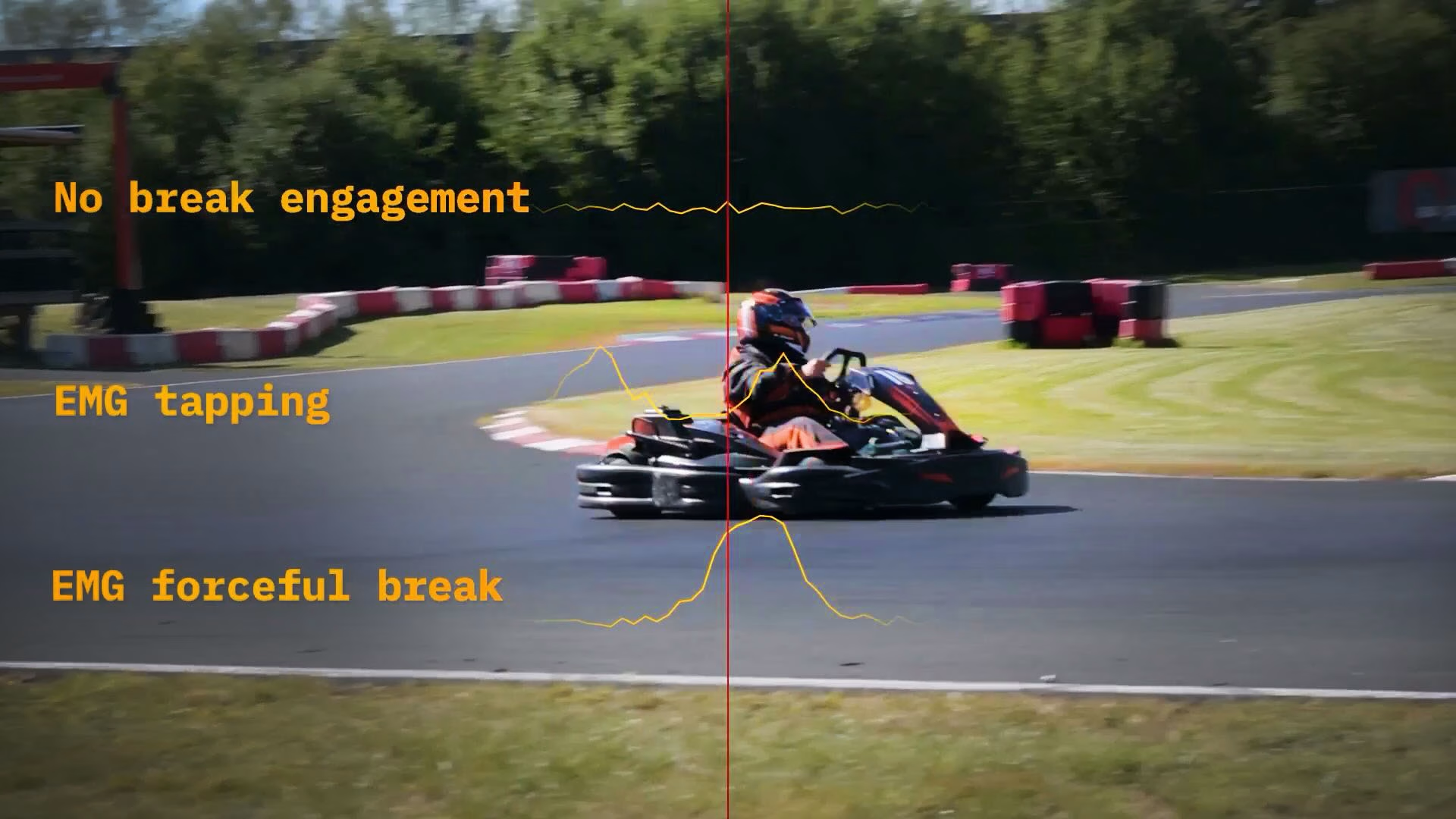

GoKart Racing: Research Outside The Lab

We took eye-tracking glasses, EMG sensors, a respiration belt, and ECG monitoring to the go-kart track to measure driver attention, stress, arousal, and braking behavior in a real driving scenario.

Ready to upgrade?

iMotions 11 is a free update for all users with an active license. Head over to my.imotions.com and grab your copy now.