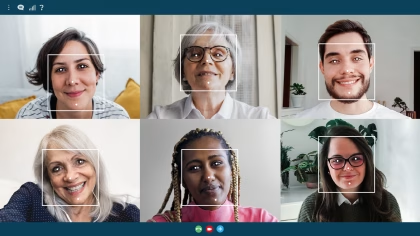

Personalization relies on user models– representations of the user’s competencies, preferences, and skills to adapt the system behavior to optimize interaction. But the anticipated gain in productivity is offset by the effort involved in collecting and maintaining said user model. This is particularly pronounced in systems like ALeA (Adaptive Learning Assistant, https://courses.voll-ki.fau.de/), where the learner models contain competency estimations for thousands of concepts among multiple dimensions– here Bloom’s learning levels. In this paper we present an exploratory study design that tries to determine whether close visual observation of learners can be used to elicit competency data automatically– a task human educators perform routinely when teaching small groups of learners and adaptive learning systems should be equipped to mimic– with the help of this study.

Related Posts

-

Multiface Analysis in Action: Advanced Methods for Studying Facial Expressions in Group Settings

-

Memory and Visual Attention: 5 Foundational Eye-Tracking Experiments

-

Converting Raw Eye-Tracking Data into Cognitive Load Indicators

-

Desire Before Delight: Why Wanting Drives Consumer Choice More Than Liking