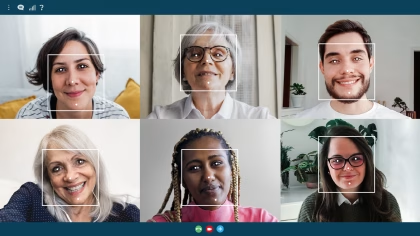

Investigating how trust is built and maintained is especially important as technological advances make scam and fraud easier and quicker to enact. Fields such as neuroeconomics, psychology, and computer science have devoted considerable attention to the roles that emotional expression possess in determining decision making, with many studies utilizing paradigms including trust games, negotiation games, and dilemma games to model real-world decision-making processes. Current research on player behavior and decision making typically isolates specific aspects, such as acts of betrayal by a trustee or the influence of emotional facial expressions. In contrast, the paper herein describes a study that comprehensively examines both elements while incorporating automatic facial analysis, adding a source of multimodal affective data. This technology, which allows for real-time, objective, and non-intrusive data collection, has been piloted in a dynamic dyadic trust game environment. The following study builds a task framework based on current theories that inform the role of facial expression in decision-making, current models that guide predictive decision making, and the role that automatic facial analysis plays within the aforementioned. We implement that framework to conduct a pilot study investigating human behavioral responses to affective expressions applied to a digital agent.