AI Powered Facial Expression Analysis

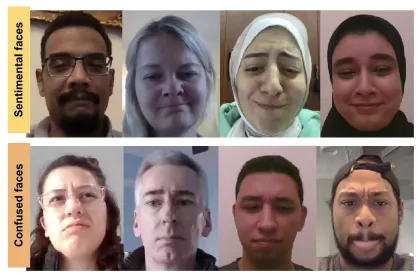

Our face displays our outward emotional expressions – giving a view of how we show our inner emotional state. These expressed emotional states are detected in real time using fully automated computer algorithms that record facial expressions via webcam.

Tracking facial expressions can, when used in controlled contexts and in collaboration with other biosensors, be a powerful indicator of emotional experiences. While no single sensor is able to read minds, the synthesis of multiple data streams combined with strong empirical methods can begin to reach in that direction.

Live or post automatic facial coding from any video

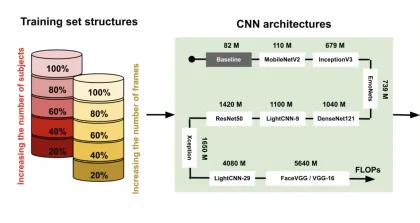

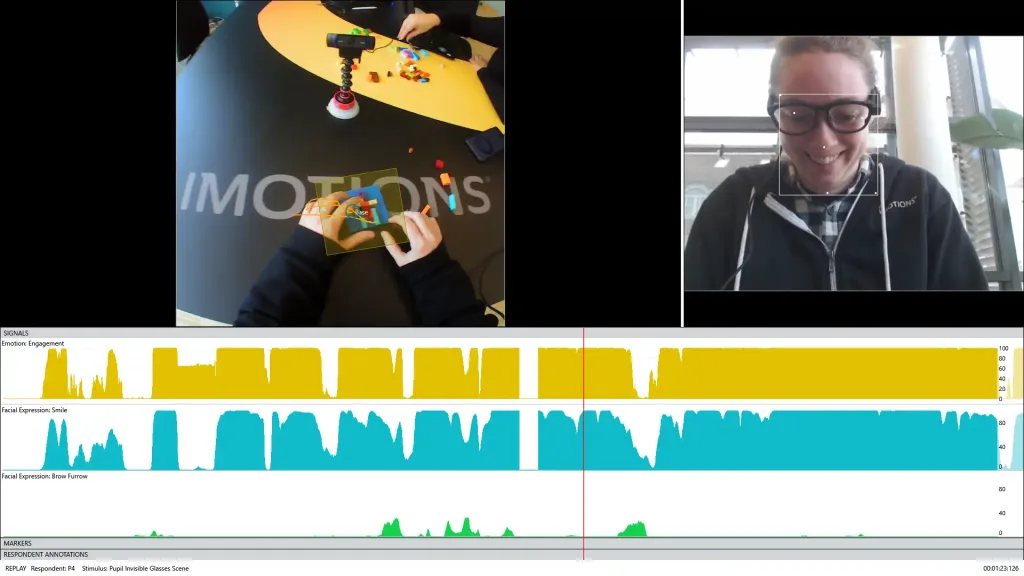

The iMotions Facial Expression Analysis Module seamlessly integrates the leading automated facial coding AI Affectiva’s AFFDEX. Using a webcam, you can live synchronize expressed facial emotions with stimuli directly in the iMotions software. If you have recorded facial videos, you can simply import videos and carry out the analysis. Gain insights via built-in analysis and visualization tools, or export data for additional analyses.

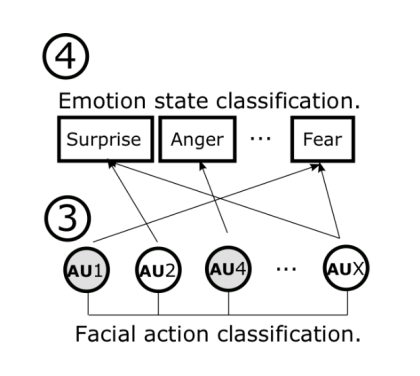

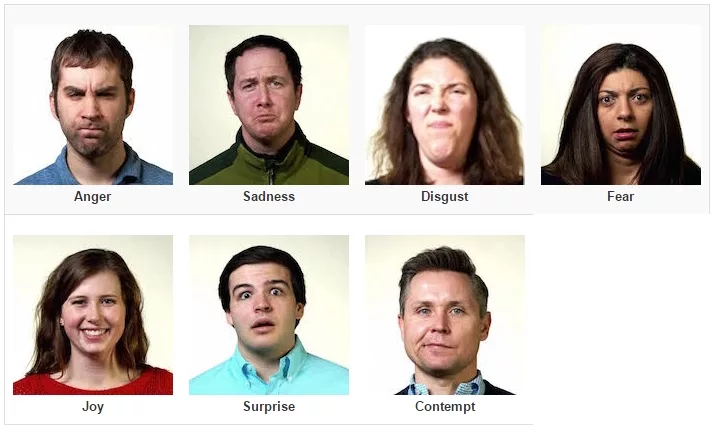

Detect 7 core emotions

iMotions’ facial expression analysis module provides real-time insights by tracking subtle facial muscle movements to identify seven core emotions: Joy, anger, fear, surprise, sadness, contempt, and disgust. The methodology is built on the Facial Action Coding System (FACS), the objective framework developed by Dr. Paul Ekman and Wallace Friesen.

Researchers can leverage this data for applications such as user experience testing, market research, and mental health assessment, enhancing product design, marketing strategies, and overall well-being.

Get Valence and Engagement Statistics For Your Content

Valence and engagement are crucial metrics for understanding emotional responses. Valence represents the overall emotional tone, ranging from negative to positive. It helps researchers assess user experiences, product preferences, and brand perception. High valence indicates positive emotions, while low valence suggests negative feelings.

On the other hand, engagement measures the level of expressiveness and involvement. It reflects how actively an individual responds to stimuli, such as advertisements, videos, or interactive content. By tracking valence and engagement, businesses can optimize marketing strategies, improve user satisfaction, to name a few.

Track the Movement of the Face for Facial Expression Analysis

Researchers are already using the facial expression analysis module to:

- Measure personality correlates of facial behavior

- Test affective dynamics in game-based learning

- Explore emotional responses in teaching simulations

- Assessing physiological responses to driving in different conditions

- And more

Get a Demo

We’d love to learn more about you! Talk to a specialist about your research and business needs and get a live demo of the capabilities of the iMotions Research Platform.

FAQ

Here you can find some of the questions we are asked on a regular basis. If you have questions you cannot find here, or elsewhere on our website, please contact us here.