iMotions has launched a series of live and recorded webinars about a broad range of topics within human behavior research. Our expert panelists include our in-house Ph.D. scientists, Product Specialists, and Customer Success Managers.

It’s been a fun team effort across our global offices and a great success. Our Part 1 series had over 1.5k total registrations! We were overwhelmed by the amount of interest we received and we thank you for your continued support.

Did you miss it? Don’t worry: we’ve recorded the webinars in Events if you’re interested in seeing them for yourself.

Here are some of the Webinar Series’ Highlights:

- Measuring Anxiety in VR

- Bringing Neuroscience to Business Schools

- What are Emotions, and How do We Measure Them?

- Lab, real world, or phone screens – All about eye tracking setups

Webinar: Measuring Anxiety in VR

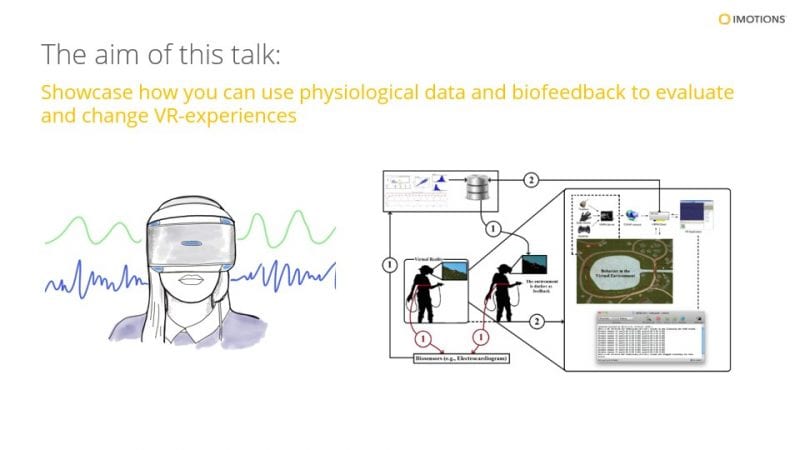

In this webinar, Tue Hvass, PhD (Senior Customer Success Manager) & Mike Thomsen (Technical Specialist) presented how biosensor data can be used to measure anxiety for healthcare applications.

Working in collaboration with the University of Southern Denmark’s VR8 project, researchers are looking to evaluate and implement a virtual reality exposure intervention with an automated biofeedback system that provides flexible and adjustable treatment to adults with social anxiety disorder.

This innovative project aims to use virtual reality in order to enable patients to train in any given situation that otherwise would be avoided in real life. The project uses biofeedback measurements from the body for the experiences in VR and syncs them through iMotions. The patient’s pulse, sweat and eye movements are registered and used to adjust “the exposure.”

Picture Source Reference (1): Cipresso, Pietro. (2015).

Highlighted Question

- What is a feedback loop and what type of biosensors are you using?

Biofeedback is using a physiological response to drive the VR experience. For example, if the participant is starting to feel social anxiety, we can change the environment so it’s more calming, change the music, or turn it off completely.

Most common tools available for VR human behavior research are:

- Eye Tracking for Visual Attention

- Electrodermal Activity/EDA for Arousal

- Electroencephalography – EEG

Further Reading: The Future of VR Exposure Therapy

Webinar: Bringing Neuroscience to Business Schools

Human behavior research has become increasingly prevalent in the study of business. Our behaviors and decisions are subject to many kinds of cognitive influences, including motivation, workload, stress and aversion to risk. Therefore, an understanding of business naturally must involve an understanding of human behavior. In this webinar, neuroscientist Dr. Jessica Wilson and Robert Christopherson provided a crash course in the most common biosensors used in business research and education.

Conventionally, what people have done to understand human behavior is to use traditional research methods like questionnaires, self-reporting, and surveys, but they are not the most ideal method. What we say is not necessarily how we feel. Our filters and biases, cultural influences, our ability to recall events, and even our emotional lexicon can affect the quality and accuracy of a participant’s self-reporting. This can limit the type of information you can get.

That’s why iMotions helps augment self-reporting questionnaires by using physiological data to help provide a more holistic view of how someone is acting in the moment.

Over the past decade, neuroscience has found new traction in business subdisciplines including consumer neuroscience, education, communication, and decision-making. But how can a business school begin to integrate neuroscience tools into their research facilities? Jessica and Robert shared examples of how schools and companies all over the United States are leveraging this technology, and provided actionable tips on how to get your laboratory off the ground if you’re ready to make the leap. Universities that have successfully integrated biosensor facilities into their business school tended to fall into one of three different use cases:

- Augmenting research output

- Ensuring student success

- Facilitating community involvement through commercial partnerships

Highlighted Questions

- What is the maximum number of biofeeds you can track; can you track 100 or more?

In a practical sense, that is restricted by the hardware on your computer. iMotions software is intended to collect data for one person at a time. In terms of the number of inputs per person, then it depends on the hardware. For example, an EEG headset can have up to 32 different channels. You can use our API to bring in non-native feeds as well. However, the more important consideration is to aim for quality of signal, not quantity, and to pick and choose which metrics are best for your research question.

- What are the commercial applications for iMotions?

We see a breadth of industry verticals employing biosensors into their research. Some examples are shown below: Media, Consumer Goods, Financial Services, Tech & Telecommunications, Automotive, Travel, and Services. Within these verticals, we see some commonality in the kinds of applications that use biosensors, including Consumer Insights, Product Testing and Usability. For students, this is especially important, as training in biosensor research techniques within a business school provides an in-demand skill set that can be immediately applicable in any industry.

Want to expand your knowledge?

Learn where The Top 10 Human Behavior Research Courses are being taught.

Check out 15 Powerful Examples of Neuromarketing in Action

Webinar: What are Emotions, and How do We Measure Them?

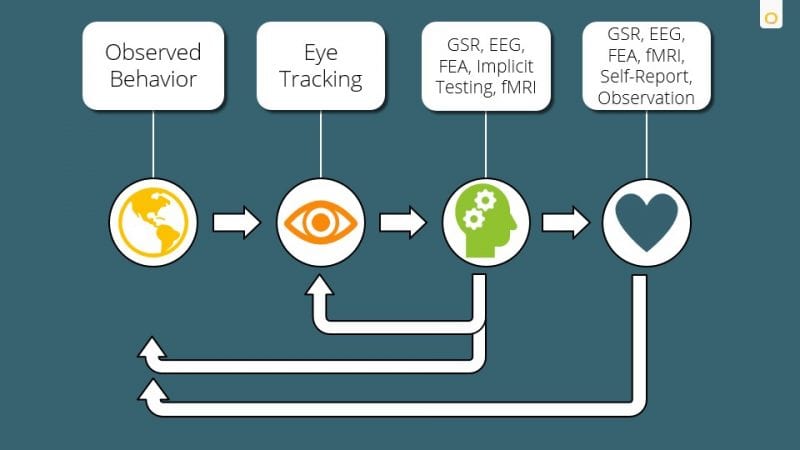

What can we reliably measure of an individual’s “emotional experience”? Brendan Murray, PhD and Jessica Wilson, PhD introduced how measuring emotions helps us understand human behavior.

What is Emotion:

Emotion is everywhere around us. Emotions are our brain’s way of tagging things that are relevant. We are bombarded by information every day and we need to filter out that information for survival, rewards, goals etc. Our emotional responses help us change our behavior and store information.

How do we measure Emotion?

We can measure the various emotional responses individuals are having in the moment to things in their environment. Many theories of emotion tend to operationalize emotion into two dimensions that generally operate independently from one another:

Emotional Arousal: is the intensity of your emotional response.

Emotional Valence: is it a positive or negative response?

Read more on how to measure Emotional Arousal with EDA (Electrodermal Activity or GSR), also known as skin conductance.

Similar to how emotions can vary in intensity, they can also vary in terms of their valence: How “positive” or “negative” they are. One way that we communicate our emotional valence to others is via our facial expressions: smiles, frowns, and furrowed brows can all communicate important information to others about what we are experiencing. By leveraging systems that can “code” a person’s facial expressions, we can gain insight into how positive or negative an experience is for that person in the moment.

Read more on how to measure Emotional Valence with Facial Expression Analysis.

Highlighted Questions

- What is the difference between Feelings and Emotion?

When we talk about emotions or emotional responses, we are typically talking about a response in a specific moment. They tend to be very rapid, very fleeting, and serving that evolutionary purpose of helping make those momentary decisions.

Feelings or moods tend to be spread out over a long period of time – these are “lower level” and more persistent states that are not necessarily tied to a single moment in time the way that emotional responses are.

Check out: How to Measure Emotions and Feelings (And the Difference Between Them)

- What happens when biosensors tell you something different from survey results?

These can be the most interesting results. With biosensor research you’re capturing their responses as they are experiencing it, whereas self-report is after the experience and can help with insights on what their take-aways were or what was most memorable to them.

Biosensor and neuroscience research can give you the “what” and “how”

- What are people responding to

- How intense are those responses

Self-report: Can give you insights into the “why”

- Why do people think they prefer A vs. B.

Neuroscience and biosensor results can be good indicators of how that specific person will behave and self-report is a good indicator of advocacy in the future.

Further readings:

System 1 and System 2: Facts and Fictions

Webinar: Lab, real world, or phone screens – All about eye tracking setups

Tom Baker, PhD (Customer Success Manager ) and Kerstin Wolf (Product Specialist) discussed the different setup environments for eye tracking. In this webinar, they demonstrated use cases and demo studies for different setups, from screen-based to mobile testing to VR. iMotions has a suite of analysis tools that can be utilized with proper eye trackers for each case use.

What eye tracking can tell you:

- Tool for learning about visual attention

- Insights into cognitive abilities/processes and attention

Typical Application Areas:

- Usability research/application testing

- Human factors in engineering/safety applications

- Car/airplane simulators (including our API integration)

- Athletics/sports sciences

- Psychological/cognitive research

- Teaching/training/education

- Neuromarketing

Types of Eye Tracking Setups

Screen based tracking (the classic setup)

Screen-based eye tracking allows for the recording and analysis of responses to multimedia stimuli. You can perform screen-based eye tracking on images, videos, websites, games, software interfaces, 3D environments, and mobile phones to provide deeper insights into visual attention.

Eye tracking on phones or tablets

It’s possible to connect, and calibrate the eye tracking to, virtually any mobile phone or tablet. This allows user responses to be examined regardless of the device of choice. The same stimuli types can also be recorded, even when presented on a mobile device.

Eye tracking glasses

If your research doesn’t take place in a lab with a screen, you can use eye tracking glasses to test in the real world, like in-store, sports, or human factors.

VR Eye Tracking

The iMotions VR Eye Tracking Module allows for eye tracking data collection, visualization, and analysis in virtual environments using the HTC Vive Pro Eye and Varjo VR-1 & VR-2 eye tracking headsets. Capture and analyze visual attention using heat maps, gaze replays, and areas of interest (AOI) output metrics such as time to first fixation & time spent.

Highlighted Question

- Can you see if an individual is excited using eye tracking?

Unfortunately, there is nothing inherent about eye tracking itself that tells you about a person’s emotional state.

What you can do is combine other sensors together with your eye tracker. For example, excitement is something that can be measured by your physiological arousal and can be detected in Electrodermal Activity (also known as Galvanic Skin Response) and can be synchronized with your eye tracking data to see where the individual is experiencing excitement based on what they are looking at.

- What’s the difference between eye tracker software and iMotions?

The software that comes with a manufacturer’s hardware can typically only handle eye tracking. iMotions software gives you the ability to collect data, analyze eye tracking data, and add other modularities like facial expressions analysis, EDA, EEG, ECG etc. They are all integrated so data will show on one PC screen.

Further readings

- How Are Simulations Used in Human Behavior Research?

- Check out 5 essentials for Eye Tracking Research

We hope you are enjoying our webinar series with our expert panelists and we are excited about the opportunity to share our knowledge with you! We look forward to seeing you in our upcoming webinars. Feel free to register for the recordings and future webinars on our Events page.

Thank you for all the great feedback and support and we look forward to helping you with your future research using biosensors.

References:

Cipresso, Pietro. (2015). Modeling Behavior Dynamics using Computational Psychometrics within Virtual Worlds. Frontiers in Psychology. 6. 10.3389/fpsyg.2015.01725.